Update #40: AI's Year in Review and In-Context Learning as Gradient Descent

Selected trends from a speedy year in AI, and the relationship between in-context learning and gradient descent

Happy New Year, and welcome to the 40th update from the Gradient! If you were referred by a friend, subscribe and follow us on Twitter!

The 2023 Gradient Prize is open! Send us your pitches here :)

News Highlight: AI’s Year In Review

Instead of making predictions for 2023, as ML researchers, we thought it might be better to look at data from 2022 to analyze trends from this past year. Let’s dive in!

What we searched for in 2022

The “AI” keyword search boomed globally in 2022, peaking in Dec 11-17 to an all-time high (ChatGPT launched on November 30). Related phrases that surged include “midjourney”, “Stable Diffusion”, “ChatGPT”, “craiyon”, “Dall E Mini”, and of course “Google AI Sentient”.

Can you guess the top 5 countries in which “Machine Learning” was the most popular keyword search as a fraction of all searches on Google from that country? Prepare to be bamboozled—here’s the list in decreasing order of popularity:

Iran

India

Pakistan

Kenya

Nigeria

It’s exciting to note that the list includes no Western countries, and is perhaps indicative of the emerging role that AI will play in developing countries in this decade. Play with the data yourself on Google Trends here.

Where we invested in 2022

CB Insights’ Q3 2022 report on the State of AI lays out various trends and statistics in investments in AI. Here are our key takeaways from the 137 page report:

Funding in AI is projected to drop roughly 30% YoY (down to $47.3B in 2022, from $68.8B in 2021)

Deals and funding dropped to 8 quarter lows in Q3 2022 globally

US holds most of the share in AI funding (38-41%), closely followed by Asia (33-37%)

Venture capital makes most of AI funding (29%)

Of all rounds, early stage deals lead the pack, 65% of all investments

Healthcare funding dropped to a 9 quarter low. 62% of all deals in healthcare were in the US

Fintech AI deals hit a record low. While Europe captured more than half of the total funding amount, more deals were done in the US

Retail Tech AI deals dropped to a 7 quarter low, US captures the majority of funding

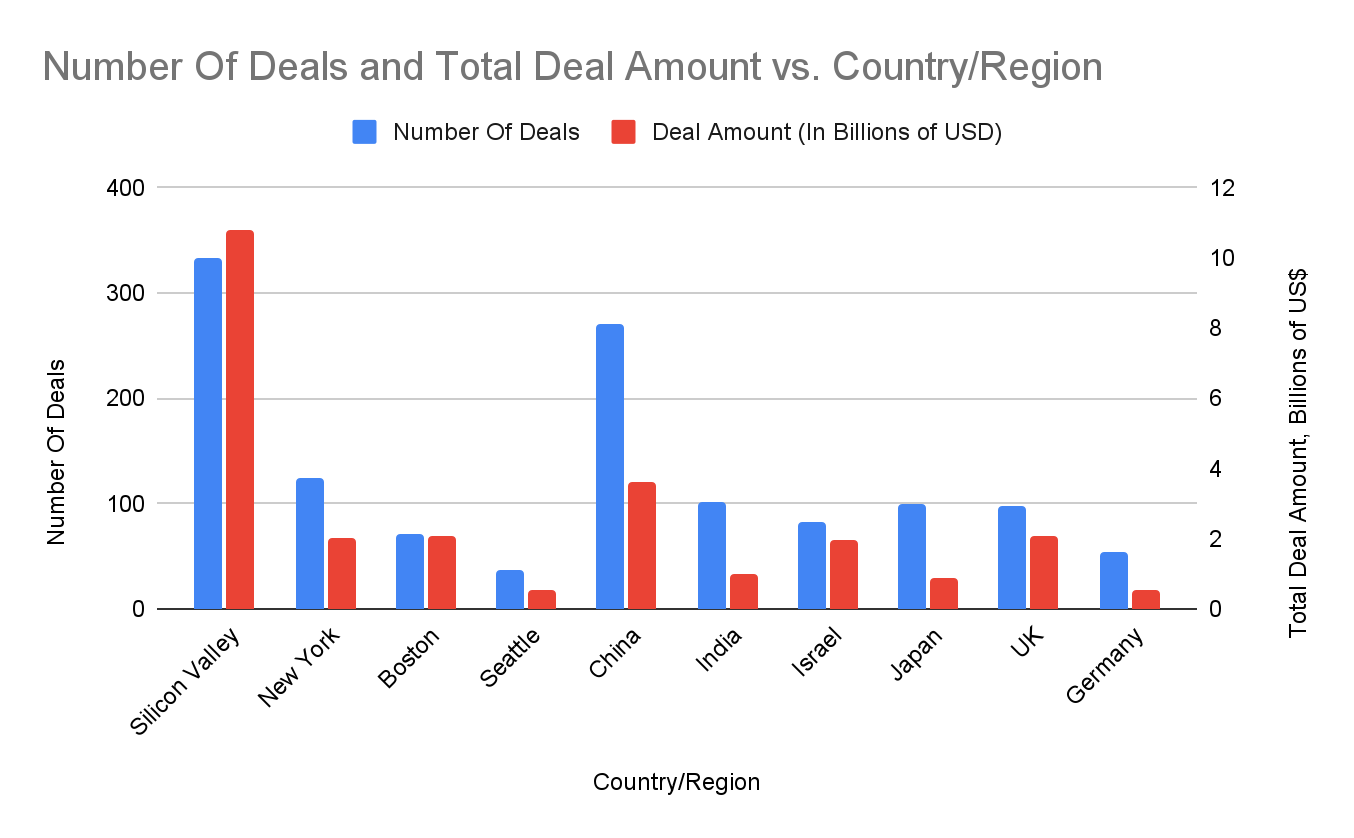

We also broke down the number of deals and total deal amount up to the end of Q3 2022 by geographic region/country. In the United States, Silicon Valley still dominates in both numbers, while China drew second in the global market. See the chart below for a more detailed analysis.

How organizations used AI in 2022

McKinsey also published its State of AI in 2022 Report, which instead covers trends in the industry’s adoption and use of AI. Let’s get right to it:

Trends in AI Adoption:

AI adoption by organizations has leveled off in the past few years to roughly 50-60%, with companies integrating 3-4 different kinds of ML algorithms in their operations. Of all ML sub-domains, which do you guess is the most widely adopted? If you guessed NLP, you’d be right on the trend, but off from the mark. The table is led by robotics, with a 39% adoption rate, followed by computer vision (34%). NLP/conversational interfaces came in third at 33%.

Hiring and Talent in AI:

Companies that use AI mostly deploy engineers in the roles of Software Engineers, Data Engineers, and AI Data Scientists. Most survey respondents say that hiring people in these roles has been hard, and has not become easier over time, with Data Scientists being the hardest to find. Interestingly, companies that are “AI High Performers” (defined by McKinsey as companies for which 20% or more of their EBIT (Earnings Before Income and Taxes) came from AI use) employ AI Data Scientists and Machine Learning Engineers at a much higher rate than other companies that use AI (~60% of high performers employ individuals in these roles compared to ~30% of other companies). These AI high performers also seem to recruit from a wider range of sources than other companies. Specifically, high performers recruit employees in ML roles from universities at roughly twice the rate of other companies, while the primary recruiting pathway for other companies was upskilling employees internally into AI related roles (47% of all respondents). Lastly, while only about a quarter of all employees at companies using AI identified as a racial or ethnic minority or as women, less than half of these companies have programs in place to increase diversity in the workplace.

How we published in 2022

arXiv continued to be dominated by publications in computer science, receiving 69,848 submissions in 2022 (5% increase over 2021, see arXiv statistics for CS). 2022 also saw a comeback to in-person conferences, and submissions to major conferences also increased compared to 2021: CVPR (+16.3%), NeurIPS (14.13%), AAAI (14.01%), ICLR (11.04%), ICML (+2.12%) (Numbers from Github)

Another State of AI in 2022 report by Nathan Benaich and Ian Hogarth laid out various trends in AI, including those in AI research. 2022 was the year of generative models (DALL-E, Parti, Imagen, Make-a-video, Phenaki, to name a few), breakthroughs in AI applied to the physical sciences (advances in Nuclear Fusion, applications of AlphaFold 2 from basic science to drug discovery, protein localization understanding), LLMs applied to Robotics (SayCan), and the rise of decentralized AI research labs (Adept, Cohere, Stability.ai, Midjourney). Want a deeper dive into the report? Check out our podcast with Nathan Benaich, one of the authors of the State of AI report, here.

Research Highlight: Language Model In-context Learning as Gradient Descent

Sources:

[1] What learning algorithm is in-context learning? Investigations with linear models

[2] Transformers learn in-context by gradient descent

Summary

Transformer-based large language models (LLMs) demonstrate an ability called in-context learning, whereby a model, given several examples to illustrate a task in a prompt, is capable of performing the task without any parameter updates. Several recent works give evidence that in-context learning may work like classical gradient-based optimization algorithms in certain situations. They show that small Transformer layers can theoretically implement gradient descent on the in-context data, and provide empirical evidence showing that in-context learning mimics gradient descent in certain senses.

Overview

In-context learning is a very useful and surprising capability of LLMs, so researchers have worked to understand possible mechanisms behind it. The explanations hypothesized by [1][2][3] relate in-context learning to gradient-based optimization algorithms performed on the in-context examples. In particular, let PROMPT denote in-context examples; PROMPT consists of pairs of one input (e.g. a French phrase) and an associated output (e.g. the English translation). In-context-learning takes in the in-context-examples, along with a new input, and seeks to predict the associated output, so we want

On the other hand, an optimization algorithm like gradient descent can be used to fine tune the Transformer on the training examples in PROMPT before giving this Transformer with updated weights the new input. [1][2][3] suggest that oftentimes the Transformer’s prediction will be similar for both the in-context-learning and fine tuning paradigms.

The three recent works each give some theoretical evidence suggesting that Transformer-based LLMs can implement gradient descent. [1] provides particular weight instantiations of small Transformers such that the forward passes implement gradient descent on linear regression tasks; similarly [2] provides this implementation for a single linear self attention layer — a simplified model of the Transformer architecture. This does not imply that trained Transformers necessarily converge to in-context-learners that implement gradient descent, but it shows that Transformer architectures of modest size technically can implement gradient descent. Moreover, [3] provides an interpretation of the forward pass of a linearized Transformer as predicting based on a weight matrix updated by a so-called meta-gradient computed from in-context examples.

These works also have experiments demonstrating various degrees of alignment between in-context learners and optimization algorithms for fine tuning. [1] shows on linear regression tasks that 1-layer in-context-learning Transformers match one step of gradient descent closer than other learning algorithms, while deeper and wider Transformers match Bayes-optimal algorithms ( (ordinary least squares or ridge regression solutions) very well. [2] shows that Transformers with linear self-attention performing in-context learning often match gradient descent or an improved variant of gradient descent remarkably well in terms of loss achieved. [3] compares SGD 1-epoch fine-tuning with in-context learning on several downstream tasks, and finds that in-context learning tends to perform significantly better. They also find that attention maps in later layers tend to be similar for fine tuned and in-context-learning Transformers.

Why does it matter?

In-context learning is a key property of LLMs, which allow them great flexibility and power for performing numerous tasks. Much work has focused on investigating the capabilities of in-context-learning in Transformers, or uncovering possible mechanisms behind in-context-learning. The works [1][2][3] show that in certain settings, in-context-learning may behave like the better-understood optimization algorithms for fine tuning. Optimization algorithms have been thoroughly studied, and this prior literature may be useful for understanding and improving in-context learning; for instance, [3] leverages the connection between gradient descent and attention to derive a form of attention with “momentum” that is analogous to accelerated gradient descent algorithms that use momentum updates.

Author Q&A

To find out more, we asked Ekin Akyürek, PhD student at MIT CSAIL and first author of [1], a few questions:

Q1: If language models do indeed implement in-context-learning as optimization algorithms on in-context-examples across a wide variety of settings, what would this imply for the field?

A1: “We hypothesize that in-context learning can be implemented by LMs running *learning* algorithms internally.

We first need to understand the triviality of this. There are very simple learning algorithms such as k-nearest neighbor that are very easy to implement for a Transformer model — answering a query based on similarity to the examples presented in the context. This is similar to ‘induction head’ view proposed before by the Anthropic AI. But I think the interesting part is whether they can come-up with more nuanced algorithms like GD or ridge regression or an algorithm that we don’t know much. What kind of pre-training could elicit this behavior? If they can come up with better algorithms, can we read them off?”

Q2: What are important future directions for understanding in-context-learning?

A2: “With sufficient data, Transformers can converge to Bayes optimal solutions as shown in our work. This means that they pick-up the prior distribution of data presented during pre-training. Hence, Transformers trained on finite data will not learn generic learning algorithms such as GD and have an additional prior penalty. An important direction is understanding how this prior changes by the pertaining data, how can we analyze and control it? There is an interesting result with real LMs by Min et al. shows that reshuffling labels doesn’t change the in-context learning ability of the model in, for example, a sentiment analysis task. Our finding gives an explanation for this, sometimes the LM can have strong prior so that you cannot change its behavior via in-context exemplars. So, another important direction is how can we bypass this prior when we need to?”

Q3: Are you excited by any promising directions for improving in-context-learning capabilities of sequence models?

A3: “We show that Transformer layers are very expressive in terms of algebra (matrix multiplication, dot products, addition etc.). Learning algorithms can essentially be expressed as sets of algebraic instructions. So, I believe the architecture here is not the bottleneck, and improving in-context learning efficiency could come from data-work on pre-training these models. But there is another issue with in-context learning: computational complexity. Each example in prompt makes inference slower and expensive. If you have enough data and only care about a specific task, overall it is probably cheaper to fine-tune a model and serve it without prompts. So, improving computational complexity of inference is important to make in-context learning/prompting practical.”

Editor Comments

Derek: I was excited to see these works come out, as the hypothesis that in-context learning may work similarly to learning algorithms for fine tuning on in-context examples is really aesthetically pleasing and may provide some (hopefully useful) intuition about in-context learning. Also, these works provide interesting and natural extensions to the observations of Garg et al., who showed that Transformers could nearly optimally learn linear regressions in-context, and could also learn other function classes quite well (see our coverage of that paper in Gradient Update #31).

New from the Gradient

Happy Holidays! Podcast Roundup

Other Things That Caught Our Eyes

News

A 72-year-old congressman goes back to school, pursuing a degree in AI “Normally Don Beyer doesn't bring his multivariable calculus textbook to work, but his final exam was coming up that weekend.”

Copyright Office Sets Sights on Artificial Intelligence in 2023 “The US Copyright Office over the next year will focus on addressing legal gray areas that surround copyright protections and artificial intelligence, amid increasing concerns that IP policy is lagging behind technology.”

AI or No, It’s Always Too Soon to Sound the Death Knell of Art “There’s a hilarious illustration from Paris in late 1839, mere months after an early type of photograph called a daguerreotype was announced to the world, that warned what this tiny picture portended.”

New YouChat Chatbot Offers ChatGPT-Style Generative AI Search Engine “Search engine developer You.com has debuted a new conversational AI tool combining search with a ChatGPT-style generative AI engine.”

Europe Is Lagging Behind In Developing Large AI Models “Europe is lagging behind, as unfortunately is doing in other fields… they generally lack the amount of money, data, and computational resources required to develop these systems.”

ChatGPT Banned on Chinese Social Media App WeChat “OpenAI’s ChatGPT has been removed from WeChat, the popular Chinese social media app developed by TikTok owner Tencent. The conversational AI based on the GPT-3.5 large language model had been popping up as mini-programs on the social network, but are no longer accessible.”

Papers

Daniel: I’m pretty sure that Cramming is going to get a lot of buzz if it hasn’t already, so I’ll go with something different: “Emergent Analogical Reasoning in Language Models” is a paper claiming that GPT-3 matches and sometimes surpasses human capabilities for abstract pattern induction, including a novel reasoning task modeled on Raven’s Progressive Matrices. This is very interesting because a number of notable minds in AI, including François Chollet and Melanie Mitchell, have poked at LLMs’ abilities to perform just such analogical reasoning–indeed, if the claims in this paper hold in a strong form then that would be very interesting for our understanding of intelligence and the ability of LLMs to be more than “manifold manipulators,” as Chollet might put it. However, it’s worth noting that Raven’s Progressive Matrices are here morphed into a text-based matrix reasoning task–I agree with this response that the text-based problem feels more difficult than the original visual problem, and I would expect this to have implications for the human-machine comparison conducted in the paper. That said, I would be excited to see more balanced discussion and further investigation into the work from this paper. Because I already can’t seem to pick one paper, I’ll use a last sentence to shill this work by Jimmy Lin on building a culture of reproducibility in research.

Derek: I liked “Recycling diverse models for out-of-distribution generalization”. First, it contains a great summary and comparison of different linear mode connectivity hypotheses and related weight averaging or ensembling schemes; included are quotes from seminal papers in this area, which I do not see often but I quite enjoyed in this paper. It also proposes two new linear mode connectivity hypotheses (one of which is implicit in several previous works). Based on the new hypotheses, the authors propose the so-called Recycling method for OOD generalization, which takes one pretrained model, finetune several copies of it on auxiliary tasks, puts a shared linear probe on each copy to finetune on a target task, and finally averages across the weights of these copies to get a final model. This is a simple methodology that achieves SOTA performance on DomainBed. Beyond achieving this SOTA result, Recycling gives a good way to make use of (i.e. “recycle”) separate finetunings of a single pretrained model that may already exist.

Tanmay: The paper “Planning Paths through Occlusions in Urban Environments” from CoRL 2022 caught my eye. The method uses image inpainting to fill in occluded portions of a lidar map, without explicitly telling the model which pixels to paint in. Furthermore, this work is unique because it takes the dynamics of the car itself into account, and also integrates path-planning so that a reliable trajectory can be computed in the inpainted map without occlusions. The paper also provides a novel dataset of similar occluded urban environments to enable improvements upon this work. While the paper uses a hybrid A* planner in its implementations, the pipeline is modular enough to use any other full-stack planning method too. The method outperforms existing generative models and path planners while maintaining a similar inference time to ensure that the model can be used in real-time. The paper however suffers from some limitations, including the assumptions that localization information is available and accurate, and that semantic segmentations of the lidar map can be generated in real-time.

Tweets

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at editor@thegradient.pub and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! For feedback, you can also reach Daniel directly at dbashir@hmc.edu or on Twitter. If you enjoyed this newsletter, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!

While I fell short in understanding all of the technical details in the Emergent Analogical Reasoning paper, seems like a big deal.

Good to see the authors’ substantial caveats at the end of the Discussion section. Human-like reasoning abilities, not a demonstration of machine analogical capability through anything resembling human evolutionary processes.

Tiktok is not owned by Tencent.. but ByteDance.