Gradient Update #23: DALL-E 2 and Grounding Language in Robotic Affordances

In which we discuss how DALL-E 2's potential uses are influenced by OpenAI's business model and an exciting method that allows language model outputs to be utilized by robots.

Welcome to the 23rd update from the Gradient! If you were referred by a friend, subscribe and follow us on Twitter!

News Highlight: DALL-E 2, the future of AI research, and OpenAI’s business model

Summary

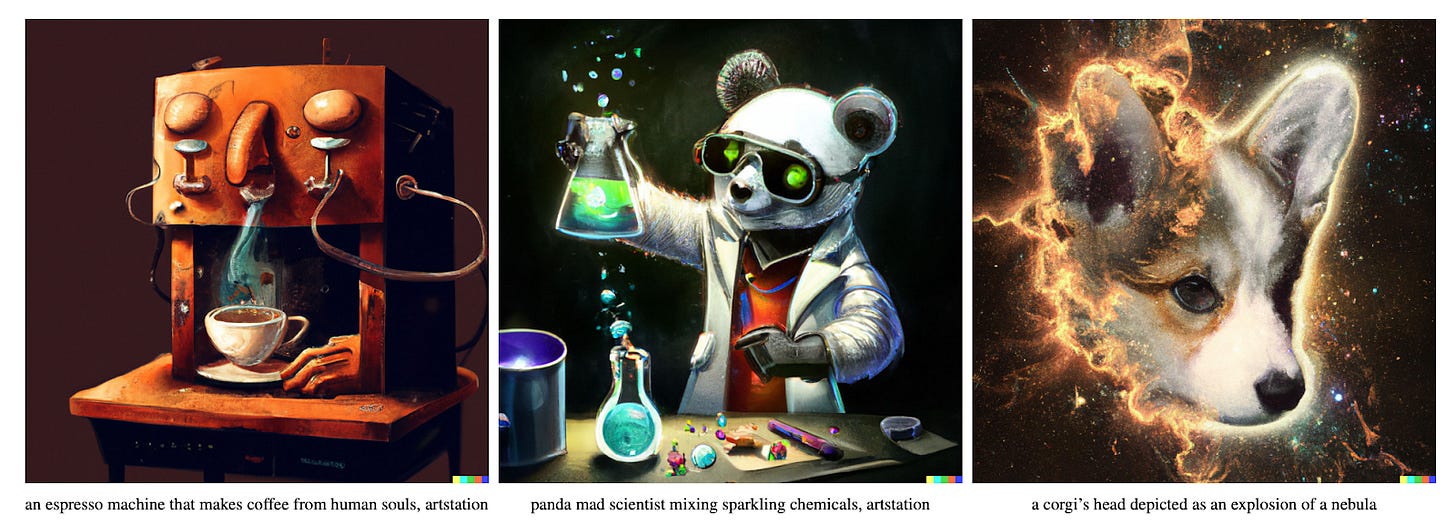

OpenAI’s DALL-E 2 is a new AI model that can generate images from text inputs. This work continues OpenAI’s research on advanced generative models and multimodal AI. When compared with the first DALL-E model’s, the images generated by DALL-E 2 are of higher resolution, significantly more realistic, and better match their input captions. Also, DALL-E 2 is capable of editing images based on natural language captions, and of generating variations of input images. DALL-E 2 makes use of the recent advances of CLIP and diffusion models in deep learning. Currently, DALL-E 2 is not openly accessible: the code and trained models have not been released, and very few people have API access to call the model.

Background

Generated images from DALL-E 2

DALL-E 2 leverages two recent advances in deep learning: CLIP and diffusion models. CLIP is an OpenAI innovation of 2021 that jointly embeds images and associated text. Diffusion models are generative models that sample images by gradually removing noise from an initial signal. Last year’s DALL-E model used CLIP to sample high quality images from input text, by choosing images that CLIP ranks as closest to the input text. CLIP is more deeply integrated into DALL-E 2, as we explain in the next paragraph. Also, DALL-E uses autoregressive modeling with a Transformer (much like in language modeling), while DALL-E 2 uses diffusion models.

(Top row) A Salvador Dali painting and the OpenAI logo. (Bottom row) Variations generated by encoding with CLIP then decoding with the diffusion decoder of DALL-E 2.

DALL-E 2 contains two components that build on CLIP: a decoder and a prior (see details in the research paper). Given an image embedding generated by CLIP, the decoder is a diffusion model that generates images that are likely to have produced this embedding. The prior, which may be an autoregressive or diffusion model, samples CLIP image embeddings given input text. Thus, DALL-E 2 generates an image from a text input by first using the prior to generate a CLIP embedding from the text input, and then using the decoder to generate an image from the CLIP embedding.

A photo of a Victorian house is edited by DALL-E 2 with the target caption “a photo of a modern house”.

Beyond generating images from prompts, DALL-E 2 is also capable of image manipulation. The decoder in DALL-E 2 allows for generating variations of input images, by first computing the CLIP embedding of the input image and then using the decoder to generate an image that is similar to the input image. Interpolation between two input images can be done by decoding interpolations of the CLIP embeddings of the two images. So-called “text diffs,” in which an input image is manipulated by a natural language prompt, can be computed by taking an input image and an associated caption (e.g. “a photo of a victorian house”), and decoding a CLIP text embedding that is an interpolation between the original caption and the new caption (e.g. “a photo of a modern house”).

Why does it matter?

DALL-E 2 significantly improves over DALL-E, both qualitatively (in terms of image quality) and quantitatively (in terms of FID score). Thus, people have considered the transformative effects that these models may have on society, such as potentially replacing creative labor for certain tasks like creating accompanying images for written articles. On the other hand, researchers like Gary Marcus and Melanie Mitchell have critiqued the limitations of DALL-E 2 on basic reasoning tasks that humans are easily able to do.

As with any significant AI engineering effort, DALL-E 2 raises questions about ethics. OpenAI has taken some steps to reduce potential harms, such as “removing the most explicit content from the training data”, and developing a content policy that prohibits generation of certain types of “violent, adult, or political content”. Also, OpenAI claims that it is partly limiting access to DALL-E 2 in order to give time for a small group of experts to study its “capabilities and limitations”.

While restricting access does prevent direct application of the model to cause harms, there are other trade-offs involved. At the moment, only the small subset of people that have access can study the properties of DALL-E 2 and influence its further development or deployment. Furthermore, OpenAI and its corporate partner Microsoft control future releases and applications of DALL-E 2, even though the interests of these companies may not align with everyone who could be affected by such transformative technology.

Editor Comments

Daniel: Just as with DALL-E when it was released, DALL-E 2 is an exciting step forward. A system that can generate photorealistic images and modify existing images based on text promises a number of applications and directions. I do think OpenAI’s choice not to release it publicly makes some sense, but is a tradeoff, especially given the fact that OpenAI is now a more commercialized entity. While limited access mitigates the risk of misuse, it also limits who can study and understand what biases and limitations a model may have. It’s unclear precisely what balance is best with these things, but I think a slightly more liberal policy of allowing many researchers (perhaps limited) access to study the model would be a good direction.

Derek: I was surprised at how much better DALL-E 2 is over previous models, and I wonder when open-source models will be able to catch up. The architectural choices are definitely interesting; instead of feeding everything as a sequence into a Transformer as in DALL-E, they use diffusion models. I am quite curious as to how models with the strength of DALL-E 2 will affect the creative economy.

Paper Highlight: Do As I Can, Not As I Say: Grounding Language in Robotic Affordances

Figure: An overview of SayCan’s methodology that uses Large Language Models and learned value functions to determine which language prompts are feasible in a given real world environment.

Summary

Google’s Robotics Lab and the Everyday Robot Project have developed a novel methodology — “SayCan” — to ground a large language model’s output in a real world environment, and enable a robot to utilize that output to process complex requests from a user. While language models contain a wealth of semantic knowledge, their outputs are not necessarily constrained to what may be feasible in a particular setting. In order to allow robots to effectively leverage large language models (LLMs), the authors propose a model to generate a sequence of instructions from an LLM based on a finite set of skills that the robot can then easily perform step-by-step. Their methodology also uses a novel affordance-based value function that scores the likelihood that a proposed instruction will be successfully completed in the robot’s observed environment, thereby grounding the language model in the real world. The methodology is evaluated in two kitchen spaces and shows promise in planning and executing both simple and abstract task instructions.

Overview

Natural Language Processing (NLP) has seen major advances recently, leading to the creation of LLMs which can respond to text queries, find images based on a prompt, and even generate new images based on a text input. Such models have cross-domain uses, especially in the field of robotics, where researchers have used LLM’s ‘zero-shot’ capabilities for multi-task robot learning, and learning tasks that the robot has not seen before.

However, an inherent problem that arises with using LLMs “out-of-the-box” is that their responses are not grounded in the real world. For instance, given a text query, “I spilled my drink, can you help?”, GPT-3 responds with “You should try using a vacuum cleaner”, FLAN responds with “I’m sorry, I didn’t mean to spill it” and LaMDA responds with “I’m sorry, do you need me to find a cleaner?”. All are valid responses, but might not help a robot decide what to do next. What if the environment does not contain a vacuum cleaner, or the robot does not know how to operate a vacuum cleaner?

The proposed model, SayCan, attempts to solve this problem through a two-step architecture. Firstly, the space of the robot’s actions are constrained to picking and placing 15 specific objects in 5 possible locations. This creates a finite set of “skills” that the robot can execute, such as “Pick up an apple”, or “Bring tea to the kitchen”. The LLM is then used to score how likely each skill is to make progress towards the input query. For instance, with a text input of “How would you bring water to the bedroom?”, of all possible skills involving picking, placing or carrying the 15 objects, the skill that would lead to the most success would be “1. Find a water bottle”, followed by “2. Bring the bottle to the bedroom”, and lastly “3. Place the bottle on the bed.” The LLM’s internal distribution is used to rank each skill at each step, and choose the skill that has the highest probability of completing the task. This is the “Say” part of the “SayCan” architecture.

Secondly, at each step, the robot must also decide if it is feasible to execute a skill in its current environment. For instance, given the prompt “How would you help me if I’m hungry?”, two valid first steps could be “1. Find an apple”, or “1. Find a banana”. However, if the robot can only see an apple in its environment and no banana, then finding a banana should be impossible. This real-world grounding of output sequences is done by learning a value function that estimates the likelihood of the skill being executed, given the robot’s current state. This constitutes the “Can” part of the architecture.

Combining these two modules, SayCan is highly effective at executing simple and complex human instructions in a real world environment. The authors tested the methodology in a real world setting in two kitchen environments and evaluated using two metrics: “Planning-success rate” (% of tasks that were correctly planned by the LLM module), and the “Execution-success rate” (% of tasks that were successfully executed by the robot using the LLM-generated plan). Over a set of 101 tasks given to a fleet of mobile robots, the authors saw a planning-success-rate of 70%, and an execution-success-rate of 61% . While the robots performed the best when given structured language inputs, they had high success rates given crowd-sourced inputs too.

Why does it matter?

Enabling robots to work with humans in real world environments is an ongoing challenge, owing to those environments’ highly unstructured and dynamic nature. Using language as an ontology to bridge the gap between complex human instructions and specific skills a robot can execute is a truly novel idea. This work not only allows semantic knowledge from LLMs to be applied to robotics, but also inherently improves LLMs by enabling them to generate more meaningful outputs. The proposed architecture is also highly effective at creating a structured plan even for complex queries. For instance, when asked, “I just worked out, can you bring me a drink and a snack to recover?”, the model chooses to bring the user an apple and a bottle of water, instead of soda or chips, which are also present in the environment. This shows that the LLM scored healthy options higher when asked for a post-workout meal, showing LLM’s promising ability to produce meaningful outputs that can be used by a robot for downstream tasks. The open-source project is indubitably the first of its kind, and opens up the door for scalable robot learning in a real-world setting.

Editor Comments

Tanmay: The intersection of robotics and NLP is rapidly evolving and it's exciting to see this research. I am interested to see how such methodologies can generalize though. Since this approach essentially iterates over all possible skills to choose the “best” skill, I want to see how future iterations will scale this method as the set of skills becomes larger and the time complexity increases. Additionally, something I found to be unique in this study was to not use the language model to generate outputs, but to use the semantic knowledge it learned to find relationships between two text inputs. I am excited to see how researchers continue to use language models in similarly novel applications.

Daniel: The thing that really excites me about this work is the grounding aspect. Language models trained on a stupid amount of text on the internet can come up with anything to say–their utterances might make sense or might be totally detached from reality. For many of their potential applications, LMs will likely need to have a sense of what is possible in the real world. To that end, finding promising ways to constrain their outputs and make those outputs “actionable” will enable progress. I’m excited to see what steps forward we can take with work like SayCan, and, like Tanmay, I’m curious to see how larger action spaces will manifest in restraining LM outputs.

New from the Gradient

AI Startups and the Hunt for Tech Talent in Vietnam

Other Things That Caught Our Eyes

News

GM Cruise autonomous taxi pulled over by police in San Francisco without humans, ‘bolts’ off (U: Cruise responds) “Over the weekend, a GM Cruise converted Chevy Bolt without a driver was pulled over by San Francisco Police. In an unexpected turn, the car “bolted” to a safe spot. Cruise responded. The original Instagram poster noted that this occurred in the Richmond District of San Francisco last week.”

The First Learning on Graphs Conference (LoG) “Graph Machine Learning has become large enough of a field to deserve its own standalone event: the Learning on Graphs Conference (LoG). The inaugural event will take place in December 2022 and will be fully virtual and free to attend.”

Now You Can Search On Google With Text And Images At The Same Time Using Google’s New AI-Powered MultiSearch Feature “Google began rolling out a new Search tool on Thursday that will allow users to search for information using both text and photos at the same time.”

Actors launch campaign against AI 'show stealers' “Actors' livelihoods are at risk from artificial intelligence (AI) unless the law changes, a union warns. Equity, the performing arts workers union, has launched a new campaign, ‘Stop AI Stealing the Show’.”

Startups Join AI Acquisition Rush “Venture-backed companies spent $8 billion acquiring an estimated 72 artificial intelligence developers last year”

Papers

Exhaustive Survey of Rickrolling in Academic Literature Rickrolling is an Internet cultural phenomenon born in the mid 2000s. Originally confined to Internet fora, it has spread to other channels and media. In this paper, we hypothesize that rickrolling has reached the formal academic world. We design and conduct a systematic experiment to survey rickrolling in the academic literature. As of March 2022, there are \nbrealrickcoll academic documents intentionally rickrolling the reader. Rickrolling happens in footnotes, code listings, references. We believe that rickrolling in academia proves inspiration and facetiousness, which is healthy for good science. This original research suggests areas of improvement for academic search engines and calls for more investigations about academic pranks and humor.

A Modern Self-Referential Weight Matrix That Learns to Modify Itself The [weight matrices]... of many traditional NNs are learned through gradient descent in some error function, then remain fixed. The WM of a self-referential NN, however, can keep rapidly modifying all of itself during runtime. In principle, such NNs can meta-learn to learn… and so on, in the sense of recursive self-improvement… We propose a scalable self-referential WM (SRWM) that uses outer products and the delta update rule to modify itself. We evaluate our SRWM in supervised few-shot learning and in multi-task reinforcement learning with procedurally generated game environments. Our experiments demonstrate both practical applicability and competitive performance of the proposed SRWM.

Semantic Exploration from Language Abstractions and Pretrained Representations Continuous first-person 3D environments pose unique exploration challenges to reinforcement learning (RL) agents because of their high-dimensional state and action spaces. These challenges can be ameliorated by using semantically meaningful state abstractions to define novelty for exploration… we show that vision-language representations, when pretrained on image captioning datasets sampled from the internet, can drive meaningful, task-relevant exploration and improve performance on 3D simulated environments. We also characterize why and how language provides useful abstractions for exploration… We demonstrate the benefits of our approach in two very different task domains… as well as two popular deep RL algorithms: Impala and R2D2.

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at gradientpub@gmail.com and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! If you enjoyed this piece, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!