Gradient Update #3: New in Reinforcement Learning - Chip Design and Transformers

as well as other news, papers, and tweets in the AI world!

Welcome to the second monthly Update from the Gradient1! If you were referred by a friend, we’d love to see you subscribe and follow us on Twitter!

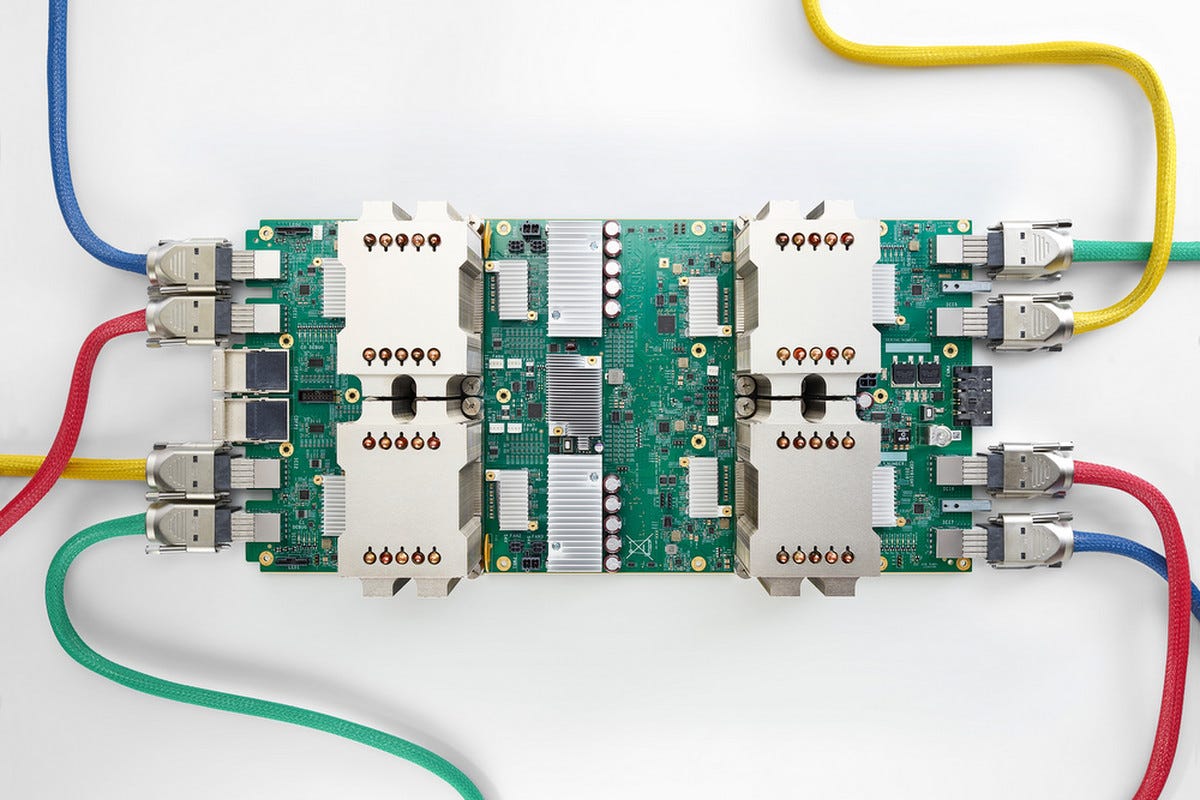

News Highlight: AI for Chip Design

This edition’s news articles are Google is using AI to design its next generation of AI chips more quickly than humans can.

Summary In their recent paper in Nature, A graph placement methodology for fast chip design (which is a follow up to a prior paper, Chip Placement with Deep Reinforcement Learning), Google researchers have shown that reinforcement learning can for the first time outperform humans at the task of chip floorplanning. In under six hours, their method generates chip floorplans that are superior or comparable (in terms of timing, total area, total power, and others) to ones produced by humans, even though humans took months to design the same type of chip. Both the human design and the design by their method outperformed a baseline approach for automated floorplanning in terms of timing by a wide margin. Beyond experimental results, the paper also notes that the method has already been used to generate the design of Google’s next generation of Tensor Processing Units (TPUs).

Background As summarized in the paper, “Chip floorplanning involves placing [modules such as a memory subsystem or compute unit] onto chip canvases (two-dimensional grids) so that per-formance metrics (for example, power consumption, timing, area and wirelength) are optimized, while adhering to hard constraints on density and routing congestion.” This problem can have an enormous state space, far larger than Go and other problems that have been addressed with reinforcement learning in recent years.

Why does it matter? This problem has been studied for decades, and this result heralds a major advance that could revolutionize the process. While other automated approaches to chip design exist, they are generally far outmatched by human designers, so Google’s demonstration that this is not the case on their TPU chip is an enormous achievement. Further, they note that the approach taken for training is specifically designed to enable the trained agent to generalize to different chips, meaning that it should be possible to apply this technique in other contexts. Given computers are already enormously complex and only getting moreso, the potential impact of specialists using powerful automated design tools is enormous.

What do our editors think?

Hugh: There is a hypothesis that is gaining steam in the AI world that the first tasks that will be automated away by AI will not necessarily be the “lower skill” jobs that we traditionally associate with automation but instead very high skill jobs (radiology, chip design, accounting, etc.) that are either neatly constrained in some environment and/or routine. This is further evidence in support of that. I also think there is a very nice aesthetic appeal to having AI algorithms improve the very hardware they are run on.

Andrey: I have long wondered when deep reinforcement learning will have a real world impact comparable to computer vision or NLP. While there are examples such as DeepMind’s datacenter cooling optimization or warehouse automation, these seem relatively rare. So this advance seems huge to me, given the importance and complexity of the task, and I hope it means we’ll see more similar results leveraging reinforcement learning going forward.

Paper Highlight: Decision and Trajectory Transformers

This edition’s papers are Decision Transformer: Reinforcement Learning via Sequence Modeling and Reinforcement Learning as One Big Sequence Modeling Problem.

Summary Two separate groups from Berkeley’s AI Lab proposed similar approaches to revise the way we think about reinforcement learning, and announced their work just a day apart from each other.

What is the new proposal? Instead of using standard reinforcement learning algorithms such as TD learning, Decision Transformer trains a language model to directly predict the sequence of experiences conditioned. Then, to generate the actual policy, they query the “language model” for a sequence with high rewards and play according to that. According to the authors of Reinforcement Learning as One Big Sequence Modeling Problem, who graciously acknowledged the concurrent work in their tweets and on their website, the main difference between them is that “at a high-level, ours is more model-based in spirit and theirs is more model-free, which allows us to evaluate Transformers as long-horizon dynamics models (e.g., in the humanoid predictions above) and allows them to evaluate their policies in image-based environments (e.g., Atari)” (quote from website).

These ideas have been tried before in the literature, but not with nearly as much success. Prior works have also sought to change the problem of RL into one of supervised learning, most notably Training Agents using Upside-Down Reinforcement Learning, Acting without Rewards, and Reward-Conditioned Policies. According to Decision Transformer: Reinforcement Learning via Sequence Modeling, “A key difference in our work is the shift of motivation to sequence modeling rather than supervised learning: while the practical methods differ primarily in the context length and architecture, sequence modeling enables behavior modeling even without access to the reward, in a similar style to language or images, and is known to scale well.”

Why does it matter? This is a novel and powerful way of approaching reinforcement learning that combines advances from language modelling into a completely different domain. As described in Gradient Update #1, transformers have shattered not only NLP benchmarks, but also “shown improvements in other domains like music or vision.” Soon it may add shifting the dominant approach to reinforcement learning to its list of accomplishments.

What do our editors think?

Hugh: I’m actually really impressed that a method like this works and doesn’t just find some adversarial example case in the language model that doesn’t actually produce high rewards. Some caveats include that they only analyzed some of the simplest games from the Atari and OpenAI Gym benchmarks and that the approach might face potential scaling issues for very long horizon / complex games where representing the entire trajectory might be intractable even for deep nets. Nevertheless, I think that this is an extremely promising new direction and if it ends up scaling to more complex games, it has the potential to totally shift the way we think about RL.

Andrey: Although we’ve seen impressive progress in terms of deep RL performance on games and robotic tasks, the problem of exploring to find reward and then doing temporal difference learning on an ever-shifting dataset makes it inherently hard for deep RL algorithms to work consistently and without a huge amount of data. These works are not only very cool as they show a possible way around these issues, but also because it is refreshing to see such novel approaches that question the basic approach to RL most published work is based on. Hopefully, much future work exploring this paradigm will come about.

Guest thoughts

Kevin Lu (joint first author of Decision Transformer): Transformers have been extremely successful in language and vision, helping to achieve state-of-the-art in both fields (and others, such as protein modeling). However, past works investigating transformers for RL have run up into significant barriers, namely high variance from nonstationary objectives due to iterative TD backups/value function errors, and a scaling issue where RL algorithms degrade, rather than improve, with bigger architectures. A big reason why this work is exciting is because Decision Transformer proposes a path for RL that is stable and scales well, promising the ability to use the same successful techniques such as large-scale pretraining, long-horizon reasoning/credit assignment, and scaling model sizes.

Furthermore, our work supports the notion that transformers and self-attention can serve as a universal approach towards deep learning. An extra side effect of this is that those who were previously familiar with transformers, but not familiar with RL, now have a much lower barrier to entry to explore RL regimes due to similarity between the frameworks. We hope this can additionally help to mature the field and others familiar with sequence modeling can use previously distant techniques to improve performance in RL settings.

Michael Janner (first author of Trajectory Transformer): When we began working on the Trajectory Transformer, we were focused simply on building a more reliable long-horizon predictive model. It took us some time to realize that the Transformer could replace not only the usual single-step dynamics model, but also many other parts of the decision-making algorithm, such as action constraints and terminal value prediction. The result is a “model-based reinforcement learning algorithm” in name only; it is essentially all language modeling! It is also rather exciting to see the Decision and Trajectory Transformer papers come to similar conclusions despite their different starting points. This general recipe seems to be surprisingly effective, and is promising in that it might provide for a more scalable class of decision-making algorithms.

Other News That Caught Our Eyes

Preferred Networks Becoming Japan's Industrial AI Powerhouse "There's an AI unicorn startup you might not have even heard of. That is, if you live in the Western hemisphere."

Drones, robots, and AI are changing the face of solar and wind farm inspections, literally "Robots and drones with deep learning computer vision and advanced imaging cameras are changing the landscape of inspections for renewable energy sites and associated equipment."

Tech Companies Are Training AI to Read Your Lips "First came facial recognition. Now, an early form of lip-reading AI is being deployed in hospitals, power plants, public transportation, and more. A patient sits in a hospital bed, a bandage covering his neck with a small opening for the tracheostomy tube that supplies him with oxygen."

Study shows physicians' reluctance to use machine-learning for prostate cancer treatment planning "A study shows that a machine-learning generated treatment plan for patients with prostate cancer, while accurate, was less likely to be used by physicians in practice. Advancements in machine-learning (ML) algorithms in medicine have demonstrated that such systems can be as accurate as humans."

The Efforts to Make Text-Based AI Less Racist and Terrible "Language models like GPT-3 can write poetry, but they often amplify negative stereotypes. Researchers are trying different approaches to address the problem."

Another Day on Arxiv

Multi-head or Single-head? An Empirical Comparison for Transformer Training “Multi-head attention plays a crucial role in the recent success of Transformer models, which leads to consistent performance improvements over conventional attention in various applications. The popular belief is that this effectiveness stems from the ability of jointly attending multiple positions. In this paper, we first demonstrate that jointly attending multiple positions is not a unique feature of multi-head attention, as multi-layer single-head attention also attends multiple positions and is more effective. Then, we suggest the main advantage of the multi-head attention is the training stability, since it has less number of layers than the single-head attention, when attending the same number of positions.”

Partial success in closing the gap between human and machine vision “A few years ago, the first CNN surpassed human performance on ImageNet. However, it soon became clear that machines lack robustness on more challenging test cases, a major obstacle towards deploying machines "in the wild" and towards obtaining better computational models of human visual perception. Here we ask: Are we making progress in closing the gap between human and machine vision? .... Our results give reason for cautious optimism: While there is still much room for improvement, the behavioural difference between human and machine vision is narrowing.”

Pre-Trained Models: Past, Present and Future “Large-scale pre-trained models (PTMs) such as BERT and GPT have recently achieved great success and become a milestone in the field of artificial intelligence (AI). … It is now the consensus of the AI community to adopt PTMs as backbone for downstream tasks rather than learning models from scratch. In this paper, we take a deep look into the history of pre-training, especially its special relation with transfer learning and self-supervised learning, to reveal the crucial position of PTMs in the AI development spectrum. Further, we comprehensively review the latest breakthroughs of PTMs.”

VOLO: Vision Outlooker for Visual Recognition “In this work, we aim to close the performance gap and demonstrate that attention-based models are able to outperform CNNs… we introduce a novel outlook attention and present a simple and general architecture, termed Vision Outlooker(VOLO)... the outlook attention aims to efficiently encode finer-level features and contexts into tokens, which are shown to be critical for recognition per-formance but largely ignored by the self-attention. Experiments show that our VOLO achieves 87.1% top-1 accuracy on ImageNet-1K classification, being the first model exceed-ing 87% accuracy on this competitive benchmark, without using any extra training data.”

Distributed Deep Learning in Open Collaborations “Modern deep learning applications require increasingly more compute to train state-of-the-art models. To address this demand, large corporations and institutions use dedicated High-Performance Computing clusters, whose construction and maintenance are both environmentally costly and well beyond the budget of most organizations. As a result, some research directions become the exclusive domain of a few large industrial and even fewer academic actors. To alleviate this disparity, smaller groups may pool their computational resources and run collaborative experiments that benefit all participants. This paradigm, known as grid- or volunteer computing, has seen successful applications in numerous scientific areas... In this work, we carefully analyze [the constraints of this paradigm] and propose a novel algorithmic framework designed specifically for collaborative training. We demonstrate the effectiveness of our approach for SwAV and ALBERT pretraining in realistic conditions and achieve performance comparable to traditional setups at a fraction of the cost.”

Tweets

Closing Thoughts

If you enjoyed this piece, give us a shoutout on Twitter. Have something to say about AI for AI chip design or sequence modeling for RL? Shoot us an email at gradientpub@gmail.com and we’ll select the most interesting thoughts from readers to share in the next newsletter! Finally, the Gradient is an entirely volunteer-run nonprofit, so we would appreciate any way you can support us!

For the next few months, the Update will be free for everyone. Afterwards, we will consider posting these updates as a perk for paid supporters of the Gradient! Of course, guest articles published on the Gradient will always be available for all readers, regardless of subscription status.

Super interesting, I love the format. Thanks