Update #53: Autonomous Robotaxi Issues and Scaling MLPs

SF city leaders warn of the dangers of autonomous vehicles, and experiments in scaling multi-layer perceptrons reveal insights into inductive bias.

Welcome to the 53rd update from the Gradient! If you’re new and like what you see, subscribe and follow us on Twitter :) You’ll need to view this post on Substack to see the full newsletter!

Want to write with us? Send a pitch using this form.

News Highlight: As autonomous robotaxis look to expand through California, San Fransisco Fire Chief insists autonomous vehicles are not ready for prime time

Summary

In California, there are two government agencies tasked with regulating autonomous vehicles. The Department Of Motor Vehicles (DMV) issues permits and collects data on collisions, and the California Public Utilities Commission regulates commercial passenger services such as buses, taxis, and limos. On June 29th, the Utilities Commission voted on a resolution which clarifies that ”issues such as traffic flow and interference with emergency workers can’t be used to deny expansion permits” as well as approving Waymo’s phase 1 autonomous vehicle passenger service throughout the state. This vote came on the heels of a Los Angeles Times recent interview of San Francisco's Fire Chief Jeanine Nicholson where she, alongside other city leaders throughout the state, warn of the dangers that autonomous vehicles pose to their communities and concludes “they’re not ready for prime time.”

Background

California’s Department Of Motor Vehicles (DMV) issued autonomous vehicle testing permits to 41 entities, 7 of which engaged in driverless tests through the state over the past 4 years. As the number of vehicles on the road increases, so too does the amount of data collected by regulatory agencies. These agencies track and report statistics on robotaxi collisions, “but they don’t track data on traffic flow issues, such as street blockages or interference with firetrucks.” According to the San Francisco Fire Department, there have been 39 incidents of robo taxis interfering with department duties since the start of the year. Some of those incidents include parking on top of a fire hose during an active fire scene, blocking drivers from dispatching to emergencies at two different medicinal emergencies including one mass shooting. These incidents tracked by the Fire Department represent only a microcosm of the true impact to safety services. Other government agencies heavily involved in public safety—such as the Department of Emergency Medical Services, San Francisco Police Department, and the Municipal Transportation Agency—did not track and report incidents.

Why does it matter?

In the words of Waymo, Google’s former self-driving car project, autonomous vehicles are designed to ”make it safe and easy for people & things to get around.“ With that lofty goal in mind, it is a little confusing why both the state and Waymo would disregard such relevant data when it comes to making informed safety decisions.

Choosing to ignore relevant safety data points compounds existing concerns about current reporting which include examples of deceptive reporting practices, industry sponsored lawsuits aimed at restricting publicly available crash data, and the sparsity of the data that is currently reported. This is particularly concerning, given the existing safety concerns that can be parsed from the data that is currently reported.

According to the California DMV there have been 28 accidents with Cruise vehicles, 24 accidents with Waymo vehicles, and 11 reported for Zoox vehicles in 2023. While these accidents are small in total magnitude, when we take into account the small number of vehicles estimated to be on the road (388 Cruise, 688 Waymo, 142 Zoox), a very different and dangerous picture emerges. 7% of Cruise Vehicles, 3% of Waymo Vehicles and 17% of Zoox vehicles are estimated to have been in one or more incidents this year. When we compare the number of accidents per vehicle for autonomous vehicles to that of non-autonomous vehicles which have an accident rate of 1.8% (5 million accidents per year / 278 million vehicles) we see a very different safety picture. Using this set of metrics, we see that the “best” autonomous vehicles are involved in incidents at a rate of almost 2x non-autonomous vehicles while the worst have accidents at a rate of 8.5x higher.

Additionally, these numbers do not take into account the knock on safety effects that go uncounted by the DMV such as autonomous vehicles blocking first responders, running over dogs, engaging in hit and runs, and spontaneously combusting (to their credit, self-driving cars are not unique in their ability to spontaneously combust).

Editor Comments

Justin: As someone who drives less than 10 miles a month in one of the most car worshiping parts of the world (Los Angeles), I would love to live in a world with easier access to safe and reliable transportation. And while the vision presented by these companies for autonomous driving is an improvement over today, the reality is that these companies have quite a large gap to close before they can be widely and safely deployed. It is extremely worrying to me that we are moving in a direction of collecting fewer points of data to fully evaluate the safety of autonomous vehicles.

Research Highlight: Scaling MLPs: A Tale of Inductive Bias

Summary

Multi-layer Perceptrons (MLPs) are the most fundamental type of neural network, so they play an important role in many machine learning systems and are the most theoretically studied type of neural network. A new work from researchers at ETH Zurich pushes the limits of pure MLPs, and shows that scaling them up allows much better performance than expected from MLPs in the past. These findings may have important implications for the study of inductive biases, the theory of deep learning, and neural scaling laws.

Overview

Many neural network architectures have been developed for different tasks, but the simplest form is the MLP, which consists of dense linear layers composed with elementwise nonlinearities. MLPs are important for several reasons: they are used in certain settings such as implicit neural representations and processing tabular data, they are used as subcomponents within state-of-the-art models such as convolutional neural networks, graph neural networks, and Transformers, and they are widely studied in theoretical works that aim to understand deep learning more generally.

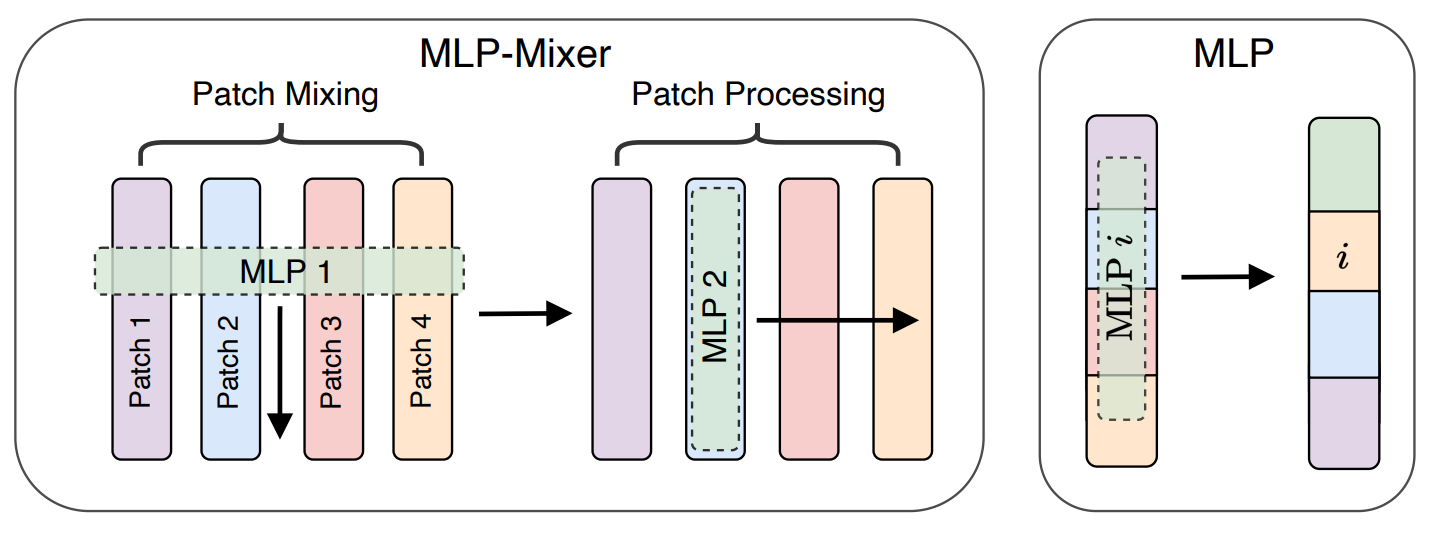

This current work scales MLPs for widely studied image classification tasks. The pure MLPs considered in this work significantly differ from MLP-based models for vision such as MLP-Mixer and gMLP (see Figure above). The latter two works use MLPs in a specific way that encodes visual inductive biases by decomposing linear maps into channel mixing maps and patch mixing maps. In contrast, pure MLPs flatten entire images into numerical vectors, which are then processed by general dense linear layers.

The authors consider isotropic MLPs in which every hidden layer has the same dimension and layernorm is added after each layer of activations. They also experiment with inverted bottleneck MLPs, which expand and contract the dimension of each layer and include residual connections. The inverted bottleneck MLPs generally perform much better than the isotropic MLPs.

Experiments on standard image classification datasets show that MLPs can perform quite well, despite their lack of inductive biases. In particular, MLPs perform very well at transfer learning — when pretrained on ImageNet21k, large inverted bottleneck MLPs can match or exceed the performance of ResNet18s (except on ImageNet itself). Moreover, as with other modern deep learning models, the performance of inverted bottleneck MLPs scales predictably with model size and dataset size; interestingly, these scaling laws show that MLP performance is more limited by dataset size than model size, which may be because MLPs have less inductive biases and hence require more data to learn well.

Why does it matter?

Scaling laws and gains from scaling model and dataset sizes are important to study, as larger versions of today’s models may have sufficient power to do many useful tasks. This work shows that MLP performance also follows scaling laws, though MLPs are more data-hungry than other deep learning models. Importantly, MLPs are extremely runtime efficient to train: their forward and backward passes are quick and, as shown in this work, they improve when they are trained with very large batch sizes. Thus, MLPs can be used to efficiently study pretraining and large dataset training.

The authors’ observations that MLPs perform well with very large batch sizes is very interesting. Convolutional neural networks generally perform better with smaller batch sizes. Thus, using MLPs as a proxy to study CNNs (for instance, in theoretical works) may be faulty in this sense, as the implicit biases or other properties of the optimization process may significantly differ when training with these two different architectures.

That large-scale MLPs can do well is even more evidence that inductive biases may be significantly less important than model and data scale in many settings. This finding aligns with the finding that at a large enough scale, Vision Transformers outperform CNNs in many tasks, even though CNNs have more visual inductive biases built in.

Editor Comments

Daniel: This work is really interesting to consider in light of older results like double descent—if you’re interested in the study of inductive biases in models, you should absolutely be familiar with that line of work (here is where I also throw in the insufferable plug for my conversation w/ Preetum Nakkiran who has authored important work on these questions). The interpretive lens of thinking about stochastic gradient descent’s inductive biases—that (in the double descent regime) it selects the most simple model with perfect training accuracy past an interpolation threshold—might offer some interesting insight in thinking about MLPs as well.

Derek: I was excited by this work as soon as I saw the title. Even though I work on developing highly structured neural network architectures, I often reach for MLPs to use as crucial subcomponents in larger models. Also, I have worked on reducing inductive biases in several settings — especially through replacing or augmenting architectural structure with structure in positional encodings, which have been very useful for MLPs in implicit neural representations. I quite like the authors’ insights on batch size and implications for deep learning theory, and I would love to see more empirical deep-dives on crucial parts of modern deep learning.

New from the Gradient

Jeremie Harris: Realistic AI Alignment and Policy

Antoine Blondeau: Alpha Intelligence Capital and Investing in AI

Other Things That Caught Our Eyes

News

Chuck Schumer Joins Crowd Clamoring for AI Regulations “Senate Majority Leader Chuck Schumer (D., N.Y.) launched an effort Wednesday to write new rules for the emerging realm of artificial intelligence, aiming to accelerate U.S. innovation while staving off a dystopian future.”

Wimbledon to introduce AI-powered commentary to coverage this year “Game, set and chatbot: Wimbledon is introducing artificial intelligence-powered commentary to its coverage this year. The All England Club has teamed up with tech group IBM to offer AI-generated audio commentary and captions in its online highlights videos.”

17 fatalities, 736 crashes: The shocking toll of Tesla’s Autopilot “Crashes have surged in the past four years, reflecting the hazards associated with increasingly widespread use of Tesla’s futuristic driver-assistance tech.”

A.I. May Someday Work Medical Miracles. For Now, It Helps Do Paperwork. “Dr. Matthew Hitchcock, a family physician in Chattanooga, Tenn., has an A.I. helper. It records patient visits on his smartphone and summarizes them for treatment plans and billing. He does some light editing of what the A.I.”

China's Baidu claims its Ernie Bot beats ChatGPT on key tests as A.I. race heats up “Baidu said Tuesday the artificial intelligence model underpinning its chatbot outperformed OpenAI's ChatGPT in several key areas. The Chinese search engine has been publicly testing the Ernie Bot in China since it was revealed in March.”

U.S. Considers New Curbs on AI Chip Exports to China “The Biden administration is considering new restrictions on exports of artificial-intelligence chips to China, as concerns rise over the power of the technology in the hands of U.S. rivals, according to people familiar with the situation.”

Mark Zuckerberg Was Early in AI. Now Meta Is Trying to Catch Up. “The CEO considers artificial intelligence critical to long-term growth and is taking more control over efforts. Many Meta AI researchers have departed in the last year. Meta is doing something Mark Zuckerberg doesn’t like: playing catch up.”

Google DeepMind’s CEO Says Its Next Algorithm Will Eclipse ChatGPT “In 2016, an artificial intelligence program called AlphaGo from Google’s DeepMind AI lab made history by defeating a champion player of the board game Go.”

Inflection lands $1.3B investment to build more ‘personal’ AI “There’s still plenty of cash to go around in the generative AI space, apparently. As first reported by Forbes, Inflection AI, an AI startup aiming to create ‘personal AI for everyone,’ has closed a $1.”

Meta’s new AI lets people make chatbots. They’re using it for sex. “From sex chats to cancer research, ‘open-source’ models are challenging tech giants’ control over the AI revolution — to the promise or peril of society”

Amazon isn’t launching a Google-style ‘Code Red’ to catch up with Big Tech rivals on A.I.: ‘We’re 3 steps into a 10K race’ “Despite Bill Gates saying that models like ChatGPT will spell the end for Amazon and Google as we know them, Amazon seems determined to win the generative artificial intelligence race slowly but surely.”

A.I.’s Use in Elections Sets Off a Scramble for Guardrails “In Toronto, a candidate in this week’s mayoral election who vows to clear homeless encampments released a set of campaign promises illustrated by artificial intelligence, including fake dystopian images of people camped out on a downtown street and a fabricated image of tents set up in a park.”

Papers

Daniel: I found Michael C. Frank’s recent commentary “Baby steps in evaluating the capacities of large language models” a great read. Abstract representations are a key feature of human cognition, and the presence of these representations in LLMs is a proof of concept that they can be learned from data—but creating behavioral tests that conclusively show the presence of a particular representation is difficult. This is true when such tests are applied to LLMs… or to children. Developmental psychologists have been asking similar questions—do the objects of study understand other minds? Do they have the same concepts we have?—of children. The commentary isn’t too long so I’ll avoid spoiling the specific aspects of developmentalists’ toolkits that Frank points out, but I’ll echo his call for synchronizing progress in AI with what we know about human development for the benefit of both domains of study. I’ll also call out a recent paper (a bit related to my comments on stochastic gradient descent above) on SGD dynamics.

Derek: “Log-linear Guardedness and its Implications” by Ravfogel, Goldberg, and Cotterell is a nice paper that theoretically formalizes some intuitions and breaks others. They study linear concept erasure, which linearly modifies learned representations to remove undesired information (such as gender information, when we do not want downstream classifiers to be sensitive to gender). Past works often assume some notion of the form: if a linear classifier cannot predict gender from a representation, then a downstream classifier based on that representation cannot use gender information to discriminate in the downstream task. The authors prove that this is true under certain conditions if the downstream task is binary, but is false in some sense if the downstream task has more than two classes. I really liked how this work aimed to formalize intuitions that many past works have relied on, and showed some theoretical limitations that suggest further work may be needed.

Ads

A Banksy got everyday investors 32% returns?

Mm-hmm, sure. So, what’s the catch?

We know it may sound too good to be true. But thousands of investors are already smiling all the way to the bank, thanks to the fine-art investing platform Masterworks.

These results aren’t cherry-picking. This is the whole bushel. Masterworks has built a track record of 13 exits, including net returns of +17.8%, +21.5%, and +35.0%, even while financial markets plummeted.

But art? Really? Okay, skeptics, here are the numbers. Contemporary art prices:

outpaced the S&P 500 by 131% over the last 26 years

have the lowest correlation to equities of any asset class

remained stable through the dot-com bubble and ’08 crisis

Got your attention yet? The Gradient readers can skip the waitlist with this exclusive link.

See important disclosures at masterworks.com/cd

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at editor@thegradient.pub and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! For feedback, you can also reach Daniel directly at dbashir@hmc.edu or on Twitter. If you enjoyed this newsletter, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!

But wouldn't a Waymo car drive much more than 2x the amount of hours/miles per day as a human-operated vehicle?