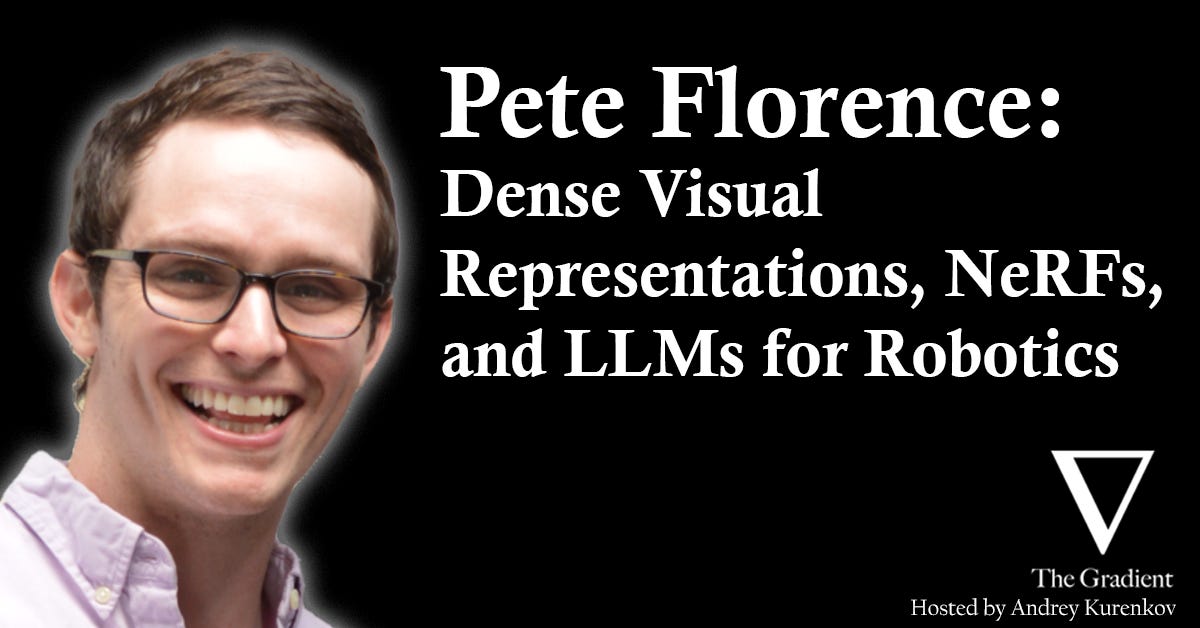

In episode 54 of The Gradient Podcast, Andrey Kurenkov speaks with Pete Florence.

Note: this was recorded 2 months ago. Andrey should be getting back to putting out some episodes next year.

Pete Florence is a Research Scientist at Google Research on the Robotics at Google team inside Brain Team in Google Research. His research focuses on topics in robotics, computer vision, and natural language -- including 3D learning, self-supervised learning, and policy learning in robotics. Before Google, he finished his PhD in Computer Science at MIT with Russ Tedrake.

Subscribe to The Gradient Podcast: Apple Podcasts | Spotify | Pocket Casts | RSS

Follow The Gradient on Twitter

Outline:

(00:00:00) Intro

(00:01:16) Start in AI

(00:04:15) PhD Work with Quadcopters

(00:08:40) Dense Visual Representations

(00:22:00) NeRFs for Robotics

(00:39:00) Language Models for Robotics

(00:57:00) Talking to Robots in Real Time

(01:07:00) Limitations

(01:14:00) Outro

Papers discussed:

Aggressive quadrotor flight through cluttered environments using mixed integer programming

High-speed autonomous obstacle avoidance with pushbroom stereo

Dense Object Nets: Learning Dense Visual Object Descriptors By and For Robotic Manipulation. (Best Paper Award, CoRL 2018)

Self-Supervised Correspondence in Visuomotor Policy Learning (Best Paper Award, RA-L 2020 )

iNeRF: Inverting Neural Radiance Fields for Pose Estimation.

NeRF-Supervision: Learning Dense Object Descriptors from Neural Radiance Fields.

Socratic Models: Composing Zero-Shot Multimodal Reasoning with Language.

Inner Monologue: Embodied Reasoning through Planning with Language Models

Code as Policies: Language Model Programs for Embodied Control