Update #83: AI Music Fraud and PlanSearch

We look at the mechanics of AI-assisted music streaming fraud; researchers develop a new algorithm for LLM code generation that leverages inference time search over high-level plans

Welcome to the 83rd update from the Gradient! If you’re new and like what you see, subscribe and follow us on Twitter. Our newsletters run long, so you’ll need to view this post on Substack to see everything!

Recently there has been a lot of discourse about whether AI art is real art. While we did not have the nerve nor subject matter expertise to resolve this question on this week’s update, the following pieces are worth checking out:

Ted Chiang’s New Yorker essay “Why A.I. Isn’t Going to Make Art”, which kicked off this round of discourse

The Read Max dispatch responding to Chiang’s essay and the National Novel Writing Month (NaNoWriMo) LLM controversy

Celine Nguyen’s wonderful exploration of where AI art stands in relation to the rest of art

As always, if you want to write with us, send a pitch using this form.

News Highlight: First criminal charges for AI-abetted music streaming fraud

Summary

Federal prosecutors unveiled the first ever criminal charges for a scheme involving “Artificially Inflated Music Streaming”. The indictment alleges that Michael Smith, a musician living in North Carolina, purchased AI-generated tracks, uploaded them to various streaming platforms, then used thousands of “bots” to repeatedly stream the tracks. The scheme allegedly netted him more than $10 million in royalty payments over the course of seven years. He is charged with wire fraud, wire fraud conspiracy, and money laundering conspiracy. Each charge carries a maximum sentence of 20 years in prison.

Overview

The scheme is pretty simple:

Purchase thousands of fake email addresses

Use these email addresses to create and register thousands of fake accounts on music platforms like Spotify, Apple Music, and Youtube Music.

The royalty rate per stream is higher for paid accounts. So, find a “Manhattan-based service” whose business is to “provide large numbers of debit cards, typically corporate debit cards for employees of a company” to make it appear like each fake account used a different source of payment. Setting up thousands of paid accounts costs money, but it will be worth it.

Start streaming music you own a lot. More specifically:

Profit

In the beginning there was no AI. Instead Smith unleashed his streaming bots on a music publicist’s large catalog of existing music. Later he offered his streaming army as a service to other musicians to boost their streams. But you can only stream a single song so many times before someone notices. To evade detection, Smith needed a bigger catalog, more material. So in 2018 he partnered with an as of yet unnamed “AI music company” to provide him with thousands of songs each week to upload to the platforms and manipulate the streams of.

The unnamed music company would provide Smith with the tracks and he’d generate random but oddly plausible track titles and artists:

Despite his best efforts, Smith had his account flagged or removed from platforms numerous times over the course of the scheme. Really he could have been a lot more subtle about the whole thing:

The indictment does not provide details on how the AI-generated music was actually generated. The only indication that they were using bona fide deep learning genAI and not some sort of simpler procedural method is an email excerpt in the indictment from one of the AI music company employees to Smith: “Song quality is 10x-20x better now, and we also have vocal generation capabilities. . . . Have a listen to the attached for an idea of what I'm talking about." And in some sense AI is incidental to this story; there is nothing illegal about uploading AI-generated music to Spotify. AI just scaled the pre-existing fraud.

Our Take

The federal prosecutors are describing Smith’s crime as stealing from “musicians, songwriters, and other rights holders whose songs were legitimately streamed.” Mechanically that’s true: Smith’s actions resulted in smaller royalty payments to other musicians. But I’m somewhat sympathetic to Mr. Smith (as are NYT commenters) he took advantage of a broken system in a way that many others do.

Matt Levine has a characteristically good and relevant take:

“Basically much of modern economics, and life, has the following characteristics:

Everything is intermediated through some depersonalized automated electronic exchange.

The automated electronic exchange has a mechanism — how it actually works, what the exchange’s software allows you to do — and also rules, the terms of service regulating how you can use the mechanism, which are fuzzier than the mechanism and written in small print, things like “don’t do fraud” or “you have to be a human” or whatever.

The mechanism is much more legible and salient than the rules, and in a depersonalized electronic world people treat the mechanism as the rules: They don’t believe that the rules exist, because the rules seem to contradict how the service works. The basic description of Spotify’s mechanics suggests Smith’s alleged arbitrage; if he didn’t do it surely someone else would.”

– Cole

Research Highlight: Planning In Natural Language Improves LLM Search For Code Generation

Summary

Researchers from Scale AI (including Gradient co-founder Hugh Zhang 😀 ) published PlanSearch, a novel search algorithm for LLM code generation tasks. Unlike traditional methods that scale inference compute by searching over similar code solutions, PlanSearch explores the space of problem-solving plans in natural language. This approach leads to a more diverse exploration of potential solutions. The algorithm shows promising results on several coding benchmarks, including HumanEval+, MBPP+, and LiveCodeBench. This work addresses the challenge of effectively scaling inference compute in LLMs for code generation and offers a new direction for search over the “concept” space rather than over code.

Overview

The authors observe that a lack of diversity in SoTA LLM outputs can hinder search algorithms (defined as any method that leverages additional compute at inference time to improve overall performance) because search benefits from exploring a diverse set of possibilities. A lack of diversity constrains search to a narrower set of possibilities. There is evidence that post-training methods like DPO and RLHF reduce output diversity and in fact the authors show that some models’ base versions outperform their instruct versions when they are allowed to generate multiple possible solutions:

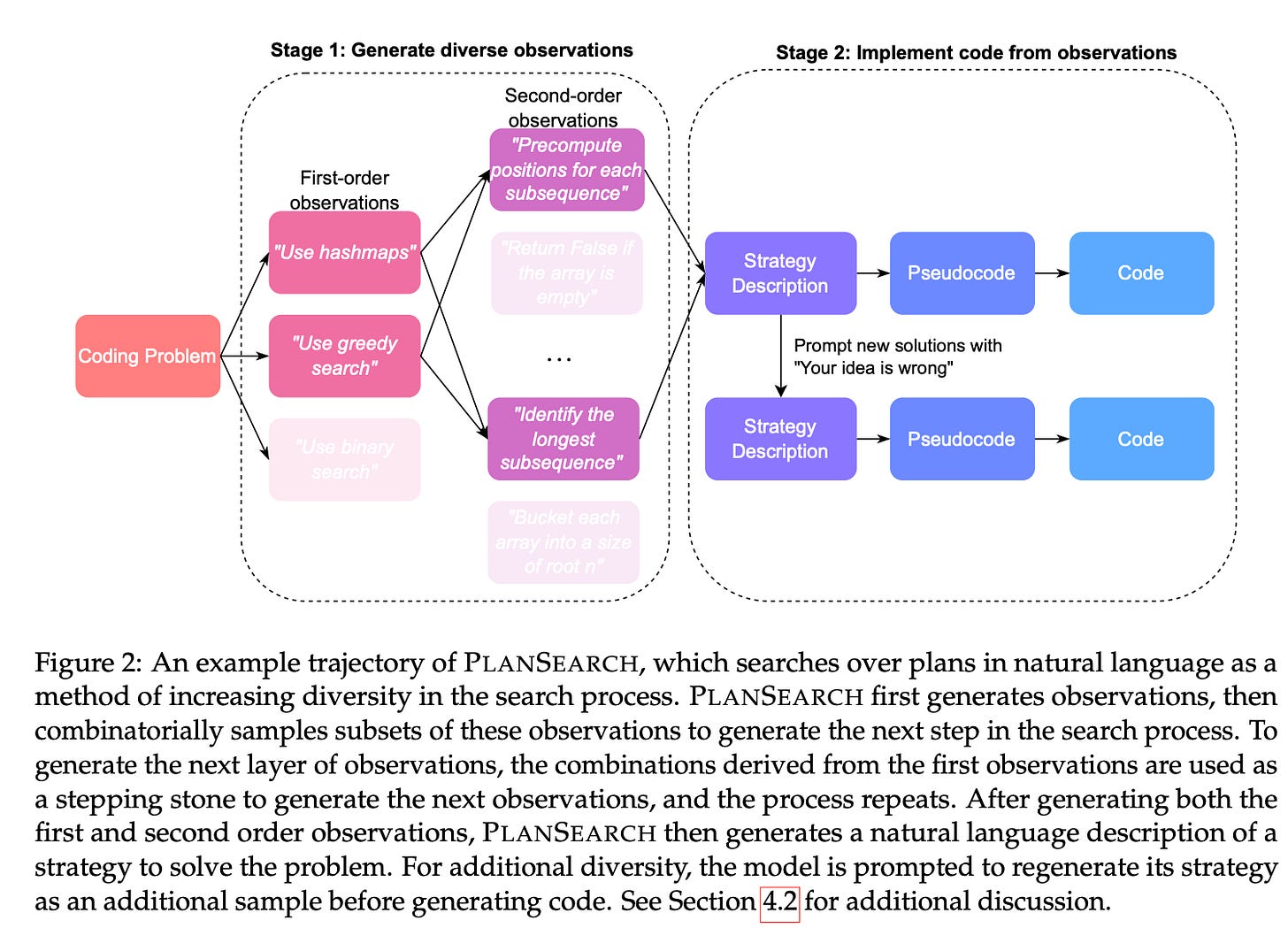

The key idea of PlanSearch is to search over higher-level, conceptual natural language descriptions of solutions rather than the solution code itself. The authors investigate this hypothesis first by exploring whether prompting an LLM with a correct natural language sketch of a solution improves code generation performance. They generate “backtranslated” sketches by feeding an LLM both a problem and a correct code solution and asking the LLM for a natural language description of the solution. They find the sketches significantly improve performance with longer sketches having even more benefit:

Next, they demonstrate the importance of having a good sketch and not just any sketch by showing that the accuracy of an LLM conditioned on a particular sketch trends towards either 0% or 100%:

Having thus established that sketches improve performance and that indeed having a good sketch can even make or break performance, the authors present a search algorithm for capitalizing on the importance of sketches. For a given LLM and coding problem, their algorithm PlanSearch involves:

Generating many first-order observations about the problem

Combinatorially sampling combinations of the first-order observations with which to generate second-order observations by prompting the LLM to use/merge the selected first-order observations

Generating a natural language description of a strategy (i.e. a sketch) to solve the problem based on the first and second order observations

Generating more solution sketches with the prompt “Your idea is wrong”

Generating a code solution based on the solution sketch

PlanSearch is evaluated on three coding benchmarks (LiveCodeBench, HumanEval+, and MBPP+) on top of four models (GPT-4o and 4o-mini, DeepSeek-Coder-V2, and Claude-Sonnet-3.5). The authors compare PlanSearch with 200 generated solutions (“PlanSearch@200”) to basic repeated sampling 200 times (“Pass@200”), single generation with no search (“Pass@1”) and IdeaSearch–which simply asks for a sketch then separately prompts the LLM to generate code that follows the proposed sketch (“IdeaSearch@200”):

PlanSearch performs extremely well, consistently outperforming the non-search baseline by 25-35 percentage points and “Pass@200” by 10-20 percentage points on LiveCodeBench.

Our Take

Exploring concept space rather than code solution space makes a lot of intuitive sense. 9 out of 10 computer science professors recommend sitting down with pen and paper to sketch out a plan before beginning to code. It doesn’t matter how good you are at writing code if your approach is wrong. Computer science legend Donald Knuth famously said “Premature optimization is the root of all evil.” Likewise just last week AI legend Noam Brown tweeted: “1 engineer working in the right direction beats 100 geniuses working in the wrong direction.” The principle is the same. The fact that this sort of bedrock comp sci wisdom ports to LLM performance is somehow comforting.

– Cole

New from the Gradient

What's Missing From LLM Chatbots: A Sense of Purpose

Davidad Dalrymple: Towards Provably Safe AI

Clive Thompson: Tales of Technology

Other Things That Caught Our Eyes

News

Apple Unveils New iPhones With Built-In Artificial Intelligence

Apple has unveiled its new iPhones, the iPhone 16, which come with built-in artificial intelligence (AI). The iPhone 16 comes in four different models and is designed to run Apple's generative AI system called Apple Intelligence. The phones will have features such as sorting messages, offering writing suggestions, and an improved Siri virtual assistant. This marks a departure from the predictable design of previous iPhones and introduces AI capabilities to enhance user experience.

US, EU, UK, and others sign legally enforceable AI treaty

The US, UK, and European Union, along with several other countries, have signed the first "legally binding" treaty on AI called the Framework Convention on Artificial Intelligence. The treaty aims to ensure that the use of AI aligns with human rights, democracy, and the rule of law. It lays out key principles that AI systems must follow, including protecting user data, respecting the law, and maintaining transparency. Each country that signs the treaty must adopt appropriate measures reflecting the framework. While the treaty is legally binding, enforcement primarily relies on monitoring, which is considered a relatively weak form of enforcement.

OpenAI Hits 1 Million Paid Users For Business Versions of ChatGPT

OpenAI has reached a milestone of over 1 million paid users for its corporate versions of ChatGPT, indicating a growing demand for its chatbot among businesses. This number includes users of ChatGPT Team and Enterprise services, as well as those using ChatGPT Edu at universities. OpenAI introduced ChatGPT Enterprise a year ago with enhanced features and privacy measures to generate revenue and offset the high costs of AI development. While the increase in paid corporate users is significant, it is unclear how many new businesses have signed up. OpenAI has not disclosed the average number of paid users per corporate customer. The majority of OpenAI's corporate users are based in the US, with Germany, Japan, and the United Kingdom being the most popular countries outside the US.

From ChatGPT to Gemini: how AI is rewriting the internet

The article discusses how big players like Microsoft, Google, and OpenAI are making AI chatbot technology more accessible to the general public. These companies are developing large language model (LLM) programs such as Copilot, Gemini, and GPT-4o. These AI tools work by using autocomplete-like programs to learn language and analyze the statistical properties of the language to make educated guesses based on previously typed words. However, it is important to note that these AI tools do not have a hard-coded database of facts and may present false information as truth since their focus is on generating plausible-sounding statements rather than guaranteeing factuality.

Big Tech ‘Clients’ of Jacob Wohl’s Secret AI Lobbying Firm Say They've Never Heard of It

Jacob Wohl and Jack Burkman, convicted fraudsters and right-wing activists, have been operating a company called LobbyMatic that claims to offer AI-powered lobbying services. However, it has been revealed that many of the major companies listed as clients of LobbyMatic have never heard of the company. LobbyMatic purports to use AI to help companies and lobbyists create lobbying strategies, analyze hearings and bills, and track legislative progress. The company was run under the pseudonyms "Jay Klein" and "Bill Sanders" by Wohl and Burkman. Despite claiming to have signed up Toyota, Boundary Stone Partners, and Lantheus as clients, these companies have denied any association with LobbyMatic. The company has since removed screenshots from its website that suggested major companies were using its software. Boundary Stone Partners, one of the few companies that did use the platform, terminated its contract due to the tool's ineffectiveness. Wohl and Burkman were convicted of felony telecom fraud in 2022 and were fined $5 million by the FCC.

How Self-Driving Cars Get Help From Humans Hundreds of Miles Away

Self-driving cars are not completely autonomous and often require human assistance to navigate challenging situations. Companies like Zoox, owned by Amazon, have command centers where technicians remotely guide self-driving cars when they encounter obstacles or unfamiliar scenarios. Technicians receive alerts and can send new routes to the cars using a computer mouse. They can also view video feeds from the car's cameras and make real-time adjustments to the car's path. While companies like Waymo and Cruise have started to acknowledge the need for human assistance, they have not disclosed the number of technicians employed or the associated costs. Remote assistance is one reason why robot taxis may struggle to replace traditional ride-hailing fleets operated by Uber and Lyft. Despite advancements in self-driving technology, human intervention is still necessary for safe and efficient operation.

OpenAI, Still Haunted by Its Chaotic Past, Is Trying to Grow Up

OpenAI, the prominent player in the field of artificial intelligence, is undergoing significant changes in its management team and organizational structure as it seeks investments from major companies. The company has hired notable tech executives, disinformation experts, and AI safety researchers, and has added seven board members, including a former four-star Army general. OpenAI is also in discussions with potential investors such as Microsoft, Apple, Nvidia, and Thrive, with a potential valuation of $100 billion. Additionally, the company is considering altering its corporate structure to attract more investors. These moves reflect OpenAI's efforts to present itself as a serious and responsible leader in the AI industry, while resolving past conflicts and focusing on its future goals.

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at editor@thegradient.pub and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! For feedback, you can also reach Daniel directly at dbashir@hmc.edu or on Twitter. If you enjoyed this newsletter, consider donating to The Gradient via a Substack subscription, which helps keep this volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!