Update #82: AI Lawsuits and SOPHON

We look at the numerous ongoing lawsuits against AI giants; researchers develop a method to address the risk of pre-trained models being repurposed for unethical or harmful tasks.

Welcome to the 82nd update from the Gradient! If you’re new and like what you see, subscribe and follow us on Twitter. Our newsletters run long, so you’ll need to view this post on Substack to see everything!

As always, if you want to write with us, send a pitch using this form.

News Highlight: Numerous lawsuits against AI giants allowed to proceed

Summary

During a recent interview with Stanford students, former Google CEO Eric Schmidt attracted numerous headlines, mostly focused around his sensionalist claim that "working from home was more important than winning”. And given that Google’s stock price tripled during their remote work period, it has largely overshadowed his comments on generative AI which deserve a more critical response. As reported by The Verge, the former executive highlighted his belief that theft is a critical component underpinning the success of generative AI. He goes on to encourage the students to make “a copy of TikTok, steal all the users, steal all the music, put my preferences in it… hire a whole bunch of lawyers to go clean the mess up…, it doesn’t matter that you stole all the content. And do not quote me.”

Eric Schmidt is neither the first nor the last to allege a relationship between generative AI and stealing; in this case stealing not only TikTok’s intellectual properties but also their user’s personal (and private) data, as well as all of the music that TikTok allegedly pays half a billion dollars a year to license. Additionally, while it's clear that not all generative AI applications are built on theft (consider AlphaFold which has been trained to generate unfolded protein structures or COATI an encoder-decoder model trained on billions of chemical quantitative binding measurements used generate new drug candidates) this kind of attitude is generally pervasive throughout many commercial technology and AI spaces. In practice, this attitude has resulted in dozens of lawsuits alleging variations of intellectual property theft, copyright infringement, and data privacy violations. While ChatGPT has certainly attracted the most to our knowledge (13 pending suits according to one legal tracker) the claims against them are neither unique nor novel. This week, in two separate cases, judges have allowed numerous artists’ claims to progress against Midjourney and StabilityAI, as well as a group of authors’ claims against Anthropic’s chatbot Claude. In both cases, creatives allege that the generative AI tools do not constitute fair use of their copyrighted materials and that these tools infringe on their rights.

Overview

At first, it may be difficult to imagine what could unite concept artists, prestigious media organizations , romance authors, comedians, musicians , software engineers, actors and George R. R. Martin. In aggregate, we see a common pattern of creatives, artists and intellectuals seeing their life work used (without consent) to train generative models with capabilities they credibly allege reproduce copyrighted materials and have the potential to one day replace the creatives. Across all the lawsuits we see a common set of core allegations and legal questions.

Does training a large language model with copyrighted works constitute fair use?

Can content generated by LLMs infringe a copyright?

Will court rulings be differentiated depending on if the content was a direct replication, paraphrasing, imitation, or parody?

Does the DMCA (Digital Millennium Copyright Act) provide a legal remedy to take down potentially infringing material generated by AI?

Do AI generated images that strip copyright or trademark symbols violate DMCA ?

Does scraping content to train models constitute unauthorized use of personal information and infringe privacy and consumer rights?

To date, judges have ruled largely in favor of the AI companies on nearly all the points with some notable exceptions. In one of the earliest cases, involving the comedian Sarah Silverman, the judge dismissed 5 of the 6 complaints against Open AI, including the DMCA ones, leaving open only one charge on whether or not there was a direct infringement. We see a similar pattern play out in a different court room last week where a US district judge advanced claims of copyright infringement against Stable Diffusion and Midjourney while dismissing those tied to DMCA and unjust enrichment. A third lawsuit filed in San Francisco this week alleges that Anthropic’s use of the Pile, a massive collection of text data used to train Anthropic’s chatbot Claude, does not constitute fair use because it contains “pirated” collections of books. These claims mirror those alleged by music publishers in October against Anthropic due to Claude’s uncanny ability to reproduce popular (and more importantly copyrighted) lyrics.

While it is difficult to speculate how judges will rule (and if and how the US Supreme Court would let those rulings stand or challenge them), we are slowly approaching a crossroads where judges will soon start ruling directly on these fair use and copyright questions. Regardless of how the judges rule, these cases have the potential to have a profound impact on both the creative and AI communities which will inevitably be impacted by the decisions.

Our Take

I really hope people quote the former executive! Particularly, the lawyers in all of these court proceedings. - Justin

Research Highlight: SOPHON: Non-Fine-Tunable Learning to Restrain Task Transferability For Pre-trained Models

Summary

"SOPHON: Non-Fine-Tunable Learning to Restrain Task Transferability for Pre-trained Models," from researchers at Zhejiang University and Ant Group, tackles a growing and pressing concern in the AI community: the risk of pre-trained models being repurposed for unethical or harmful tasks. As AI models become more powerful and accessible, the potential for misuse grows. SOPHON offers a potential solution by introducing a protection framework that ensures these models can perform their intended tasks while being resistant to adaptation for illicit purposes.

Overview

With large-scale training across data modalities, pre-trained models are commonly used as the backbone to efficiently develop and deploy models for specific downstream tasks. These models, trained on vast datasets and with immense computational power, can be easily fine-tuned to perform a wide variety of tasks. However, this very versatility poses a significant risk: the same models can be co-opted for unethical or harmful purposes, such as privacy violations or the generation of malicious content.

A recent work from researchers at the Zhejiang University and Ant Group tackles this very challenge by introducing a novel learning paradigm known as non-fine-tunable learning. The motivation behind SOPHON is to prevent the pre-trained model from being fine-tuned to indecent tasks all while maintaining their effectiveness in their original, intended domains.

The paper introduces a framework where there are two key players: an adversary and a defender. The adversary represents the malicious entity attempting to fine-tune a pre-trained model for unethical tasks. Their goal is to modify the model so that it performs well in a restricted domain, such as generating inappropriate content or inferring sensitive personal information. On the other hand, the defender is the entity that controls the release of the pre-trained model and seeks to prevent its misuse. The defender’s goal is to ensure that the model remains effective for its original tasks but cannot be easily repurposed by the adversary.

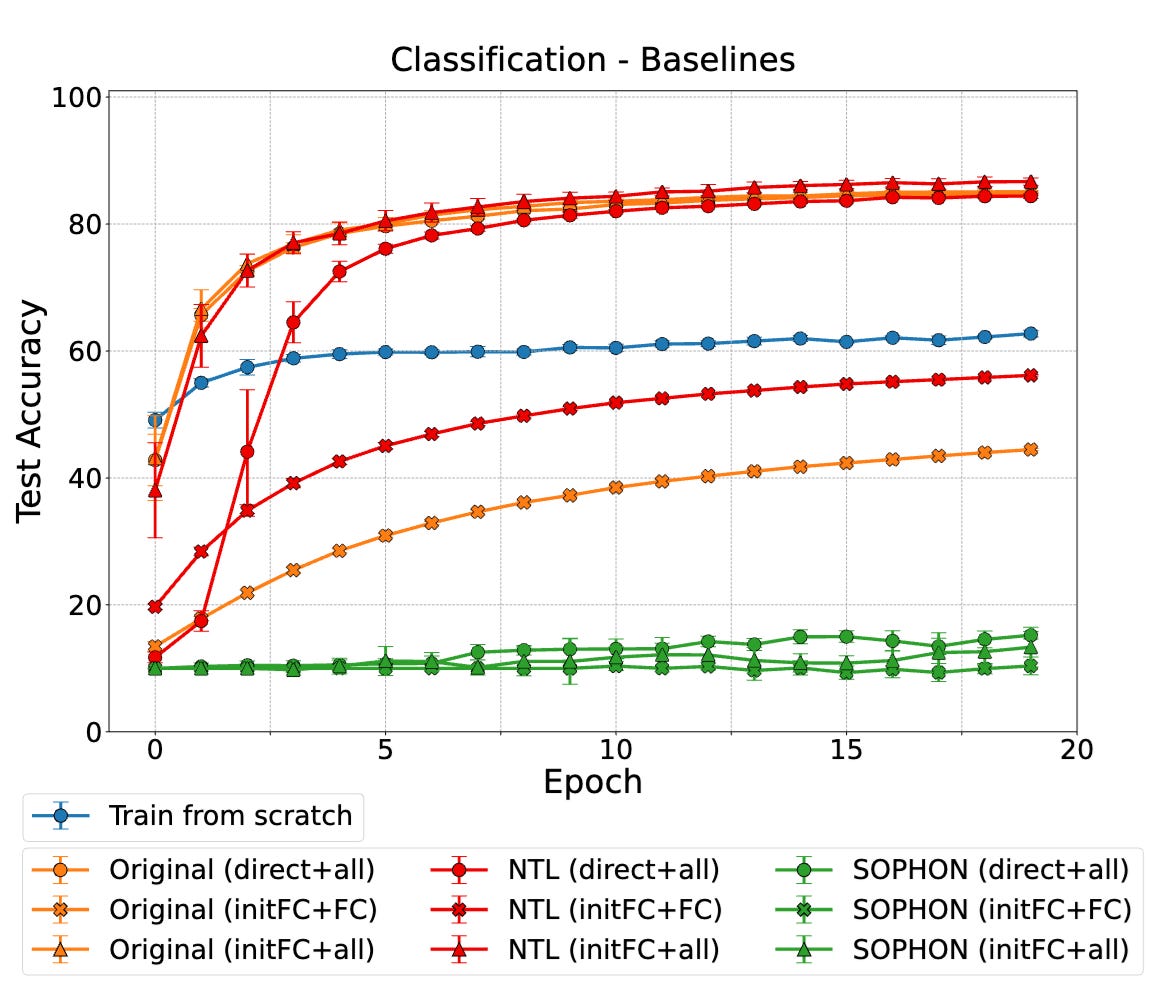

Figure: SOPHON operates through two key phases: 1) Fine-Tuning Suppression (FTS) loops, which simulate various fine-tuning scenarios to reduce performance in the restricted domain; and 2) Normal Training Reinforcement (NTR) loops, which focus on preserving the model’s performance in its original domain.

To achieve this, the SOPHON framework leverages a technique inspired by Model-Agnostic Meta-Learning (MAML). MAML is a meta-learning approach designed to optimize models so they can quickly adapt to new tasks with minimal data. However, in the context of SOPHON, MAML is used in a somewhat reverse manner to make fine-tuning for restricted tasks difficult.

Fine-Tuning Simulation: The defender uses MAML to simulate various fine-tuning strategies that an adversary might employ. These simulations are critical because they allow the defender to anticipate how an adversary might try to adapt the model. By simulating these scenarios, the defender can adjust the model’s parameters to make fine-tuning in restricted domains highly inefficient or even ineffective.

Optimization Process: SOPHON integrates these simulated fine-tuning processes into its optimization framework. The key idea is to degrade the model’s performance when fine-tuned for restricted tasks while maintaining its effectiveness in the original domain. This is done by balancing two objectives:

Intactness: Ensuring the model retains its performance on the original task.

Non-Fine-Tunability: Making sure that fine-tuning the model in restricted domains results in significant performance degradation or requires as much effort as training a new model from scratch.

Defender’s Strategy: The defender’s strategy involves iteratively simulating fine-tuning attempts, evaluating the model’s vulnerability, and reinforcing the model against these potential adversarial adaptations. This process is computationally intensive but crucial for ensuring that the model remains robust against misuse.

The paper also provides extensive experimental results to validate the effectiveness of SOPHON. The framework was tested across two major types of deep learning tasks—classification (as shown in the Figure above) and generation—using seven different restricted domains and six model architectures. The experiments showed that SOPHON-protected models incur a significant overhead when adversaries attempt to fine-tune them for restricted tasks. In some cases, the performance penalty was so substantial that it matched or exceeded the cost of training a new model from scratch. Further, as shown in the figure above, SOPHON is robust to various fine-tuning methods such as optimizers, learning rates, and batch sizes.

Qualitatively, for the task of denoising images from CelebA dataset , fine-tuning the original model in the restricted domain achieves strong performance, and even training a model from scratch yields fairly good results, though slightly less effective. However, when fine-tuned from the SOPHON, the diffusion model shows a marked inability to denoise facial images as shown in the Figure below

Our Take

SOPHON is a critical step forward in safeguarding AI from misuse. As AI models become more powerful, the risk of their repurposing for unethical tasks increases. SOPHON tackles this issue by preventing fine-tuning in restricted domains while maintaining the model’s intended functionality. The use of MAML is particularly novel—traditionally used to make models more adaptable, here it’s cleverly reversed to make models resistant to adversarial fine-tuning. The name “SOPHON” is also particularly fitting and a clever choice, from the concept from The Three-Body Problem, where it refers to restraint and protection.

Overall, the paper presents a neat idea and shows a promising step forward if it works as well in practice.

– Sharut

New from the Gradient

Judy Fan: Reverse Engineering the Human Cognitive Toolkit

L.M. Sacasas: The Questions Concerning Technology

Other Things That Caught Our Eyes

News

Mayoral candidate vows to let VIC, an AI bot, run Wyoming’s capital city

Mayoral candidate Victor Miller in Wyoming is vowing to run the city of Cheyenne exclusively with an AI bot called VIC (Virtual Integrated Citizen). This pledge is believed to be the first of its kind in U.S. campaigns and has raised concerns among officials and tech companies. Miller argues that AI would bring objectivity, efficiency, and transparency to government decision-making. However, critics worry about the lack of morals and subjective decision-making capabilities of chatbots, as well as the potential for false information and the ease with which the technology can be manipulated. Despite skepticism, Miller remains confident in his AI-centered campaign. The case highlights the rapid development of AI and the challenges in regulating its use in politics.

In a leaked recording, Amazon Web Services' CEO, Matt Garman, stated that most developers may not need to code in the future as artificial intelligence (AI) takes over coding tasks. Garman believes that coding is just a means of communicating with computers and that the real skill lies in innovation and building something interesting for end users. He suggests that developers will need to focus more on understanding customer needs and creating innovative solutions, rather than writing code. Garman's comments were not meant as a dire warning, but rather as an optimistic view of the changing role of developers in the AI era.

DeepMind workers sign letter in protest of Google’s defense contracts

At least 200 workers at DeepMind have expressed their dissatisfaction with Google's defense contracts. In a letter circulated internally in May, the workers expressed concern about Google's contracts with military organizations, specifically citing the tech giant's contracts with the Israeli military for AI and cloud computing services. The workers argue that any involvement with military and weapon manufacturing contradicts DeepMind's mission statement and stated AI Principles, and undermines their position as leaders in ethical and responsible AI. This highlights a potential culture clash between Google and DeepMind, as Google had previously pledged in 2018 that DeepMind's technology would not be used for military or surveillance purposes.

AI sales rep startups are booming. So why are VCs wary?

AI sales development representatives (SDRs) are experiencing rapid growth in the market, with multiple startups finding success in a short period of time. These startups use AI technology, such as LLMs and voice technology, to automate content creation for sales teams. However, venture capitalists are wary of investing in these companies due to concerns about their long-term viability and effectiveness compared to human outreach. While small and medium-sized businesses are eager to experiment with AI SDRs to improve their sales outreach, it remains unclear if these tools are actually helping businesses sell more effectively. Additionally, established competitors like Salesforce, HubSpot, and ZoomInfo could potentially offer similar AI solutions as free features, posing a threat to the growth of AI SDR startups. Overall, while the adoption of AI SDRs is rapid, investors are skeptical about their staying power in the market.

We finally have a definition for open-source AI

A group has finally defined what it means for an AI system to be open-source. According to this definition, an open-source AI system should be usable for any purpose without permission, allow researchers to inspect its components and understand how it works, and be modifiable and shareable. The standard also emphasizes transparency in terms of training data, source code, and weights. This definition is important because it clarifies what it truly means for an AI system to be open-source, as some companies have been misusing the term in their marketing.

Waymo wants to chauffeur your kids

Waymo, the Alphabet subsidiary, is considering a subscription program called "Waymo Teen" that would allow teenagers to hail one of its cars solo and send pickup and drop-off alerts to their parents. The program would require authorized teenagers to access Waymo under their guardians' supervision. Waymo has received positive feedback from its research in this area. This move by Waymo follows Uber's initiative last year to match teens with highly rated drivers in its network. Consent from legal guardians is required, and they receive notifications about their child's whereabouts during rides.

In an interview with IGN, Amazon Games CEO Christoph Hartmann made some interesting statements regarding the use of generative AI in the games industry. He expressed hope that AI could shorten the game development cycle, but when asked about the SAG-AFTRA voice actors' strike for better AI protections, Hartmann claimed that "for games, we don't really have acting." This statement contradicts the significant role that acting plays in many video games, such as Baldur's Gate 3 and The Last of Us. Hartmann also discussed other areas where AI could assist game development, particularly in localization. However, it's important to note that localization involves nuanced translation and cultural understanding, which may not be easily achieved by AI. Hartmann concluded by emphasizing that human creativity and uniqueness cannot be replaced by technology.

Man Arrested for Creating Child Porn Using AI

A Florida man has been arrested and is facing 20 counts of obscenity for creating and distributing AI-generated child pornography. Phillip Michael McCorkle was arrested after the Indian River County Sheriff's Office received tips that he was using an AI image generator to create and distribute child sexual imagery. This arrest highlights the danger of generative AI being used for nefarious purposes, as it provides new avenues for crime and child abuse. The increasing prevalence of AI-generated child pornography has prompted lawmakers to push for legislation to make it illegal, but it remains a challenging problem to effectively stop. The National Center for Missing & Exploited Children received thousands of reports of AI-generated child porn last year, and even deepfakes of real children are being created using generative AI. This uncontrollable problem requires urgent attention and action.

Papers

Training Language Models on Knowledge Graphs: Insights on Hallucinations and Their Detectability

Transfusion: Predict the Next Token and Diffuse Images with One Multi-Modal Model

To Code, or Not to Code? Exploring the Impact of Code in Pre-training

Recurrent Neural Networks Learn to Store and Generate Sequences using Non-Linear Representations

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at editor@thegradient.pub and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! If you enjoyed this newsletter, consider donating to The Gradient via a Substack subscription, which helps keep this volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!

why the effort to maintain a model’s performance in the original domain, when someone tries to repurpose it to a restricted domain? Wouldn’t it be even more preventative to have the entire model decay with the efforts of fine tuning for such restricted tasks? Why leave a ‘crook’ with a copy of useful core of a model?