Update #81: The EU AI Act's Enforcement and Self-Taught Evaluators

The EU AI Act is now in force; Meta researchers present a method for improving language models’ abilities to judge without needing human annotation.

Welcome to the 81st update from the Gradient! If you’re new and like what you see, subscribe and follow us on Twitter. Our newsletters run long, so you’ll need to view this post on Substack to see everything!

As always, if you want to write with us, send a pitch using this form.

News Highlight: The EU AI Act is now in force

Summary

The EU AI Act has long been in development and, after receiving a final green light earlier this year, will now be enforced. The legislation follows a risk-based approach that imposes stricter rules on higher risk systems. Having been in the works for a while, there has been plenty of time for commentators and analysis to evaluate the Act and its implications.

Overview

Based on the Act’s website, there are four key takeaways for the AI Act:

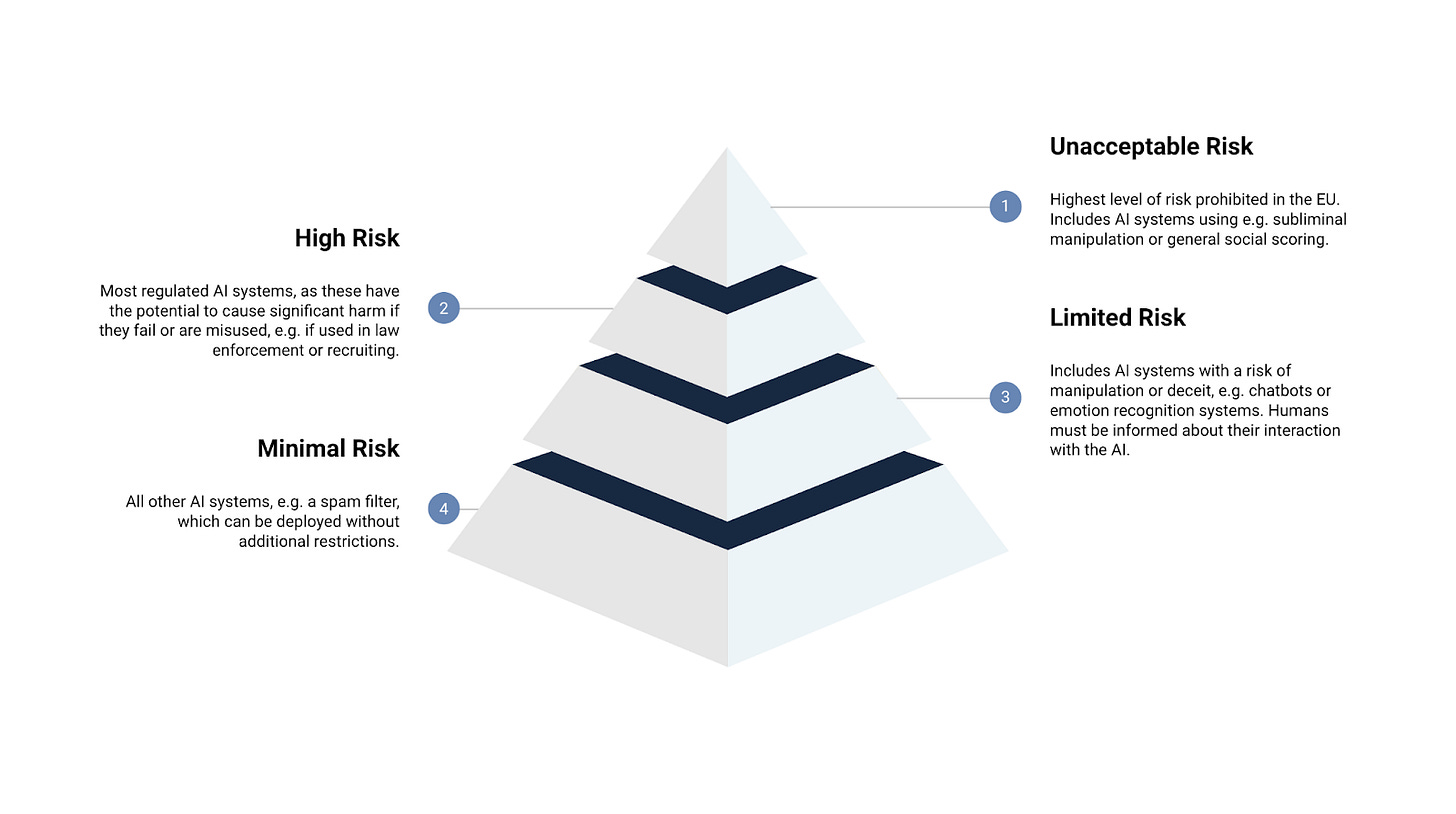

The Act classifies AI according to its risk: unacceptable risk (e.g. social scoring systems) is prohibited; high-risk AI systems are regulated; limited-risk AI systems are subject to lighter transparency obligations; minimal risk systems (the majority of AI applications available in Europe, like AI-enabled video games) are unregulated.

The bulk of obligations fall on providers of high-risk AI systems: that is, those who intend to put high-risk AI systems into service in the EU, regardless of whether they are based in the EU.

Users are natural or legal persons that deploy an AI system in a professional capacity: these users, or deployers, of AI systems have some obligations, though fewer than developers.

General purpose AI (GPAI) models have specific guidelines: all must provide technical documentation, instructions for use, comply with the Copyright Directive, and publish a summary of the content used for training. Free and open license GPAI providers have fewer requirements, and providers whose systems present a systemic risk are subject to further guidelines.

You can read the rest of the page’s High-Level Summary for more information — I’ll focus the rest of this overview on responses and analysis. In early 2023, Lawfare raised the concern that, given our current understanding of AI, the Act’s standards may not be technically feasible. In particular, their article raised the salient point that it’s nearly impossible to anticipate most of a neural network’s potential failure modes, such as producing dangerous content or identifying and learning irrelevant patterns in data — this unpredictability makes it difficult to test whether systems would adhere to principles like the EU’s. There is work in this direction — Anthropic and OpenAI both do red-teaming, and the recent Guaranteed Safe AI paper is part of a larger effort to develop systems whose safety and reliability can be formally verified, but it remains unclear whether we could construct standards around these approaches and whether approaches like complete formal verification for safety could, even in principle, work.

Unsurprisingly, the act has myriad implications. An article from the Fordham Journal of Corporate and Financial Law covers criticisms and backlash against the Act: an open letter raised the concern that heavy-handed regulation of AI systems in the EU could make compliance costs high and impede competitiveness, driving companies to leave the EU. Indeed, the regulations have prevented companies from engaging with the EU — Meta has said it won’t launch its upcoming multimodal Llama model due to unpredictability in the European regulatory environment.

While we know the general shape and thrust of the regulations, much remains unclear. Those few applications classified as high-risk will need to meet certain obligations such as a pre-market conformity assessment, while GPAI developers will face light transparency requirements. But, full compliance measures for GPAI developers are still under discussion — the EU’s AI Office has called for help with shaping rules for these systems, and companies like OpenAI will be working closely with the Office over the coming months.

Our Take

The AI Act is, understandably, imperfect. In fact, it’s imperfect in ways that could be fixed before applying it. But, we once again have a classic story: the EU is regulating too much, and therefore will hamper its competitiveness. There’s truth to this, or it wouldn’t bear repeating. But, let’s remember that only “high-risk” systems will be carefully regulated — this includes components and products already subject to existing safety standards (such as medical devices), or systems used for a sensitive purpose such as biometrics, education, or law enforcement.

The question, then, is whether rolling out regulations for systems with these use cases is jumping the gun. By nature, any regulation placed on an evolving technology like AI will fail to capture all possible use cases and impose unwanted restrictions. Laura Caroli, lead technical negotiator and policy advisor to AI Act co-rapporteur Brando Benifei, wrote about some of these challenges and argued that critics underestimate the Act’s flexibility. She also argues that comparisons to the GDPR don’t hold up, since under the AI Act, market surveillance authorities intervene where infringements of the law take place, as opposed to where a provider is established — this addresses that risk that a single authority is overwhelmed by enforcement cases.

She does concede that the Act’s emphasis on the intended purpose of a narrow high-risk AI system is a major flaw that could render most of the regulation impossible to implement — but, as she says, it’s only possible to verify the Act’s feasibility in a few years, and the current version of the Act serves as a basis that can be built on in the future. I tend to agree that the Act is very much an experiment in governance that we will and should learn from — but regardless of its intentions, the perception of the Act and the responses to that perception matter. The EU has to play a game of not only developing good policy, but convincing the world and would-be EU-based founders that they support the innovation they’re so often accused of stymieing.

—Daniel

Research Highlight: Self-Taught Evaluators

Summary

This paper seeks to further reduce the pesky bottleneck that is the need for human-labeled data to improve language models. It presents a method for improving language models’ abilities to judge (i.e. label) prompt-response pairs without needing any human annotations (as is typically the case for RLHF). Using this Self-Taught Evaluator method, the authors are able to improve a Llama3-70B-Instruct model from 75.4 to 88.3 on RewardBench–a recently published benchmark specifically for LLM reward models. The Self-Taught Evaluator model outperforms commonly used LLM judges like GPT-4 and matches top reward models trained with human-labeled examples. The method demonstrates the potential for creating strong LLM evaluators which can then be used as part of a larger RLHF or RLAI pipeline.

Overview

The crux of RLHF is training a reward model that emulates human judgment. Traditionally (by which I mean since 2022) reward models for evaluating LLMs require human preference judgments, which can be costly, time-consuming, and become outdated as models improve. The authors of this paper propose an iterative self-improvement scheme that instead only requires human-generated prompts.

Their method starts with these human-generated prompts and a seed LLM. For each prompt it generates a contrasting pair of model outputs, designed such that one response is likely inferior to the other. Rather than just asking for a good response and a worse response they first generate a modified version of the prompt that is “highly relevant but not semantically identical”. The model’s response to this relevant but not-quite-the-same prompt is the “bad response”, while the response to the real prompt is the “good response”.

Next, using the model as an LLM-as-a-Judge, they generate binary judgments and reasoning traces about these judgments for each synthetic pair of responses. The judgments can be labeled as correct or incorrect based on the synthetic preference pair design. The reasoning traces and judgements are filtered for the cases when the judgment is correct (i.e. the model prefers the response to the real prompt rather than the response to the slightly off prompt). Then this dataset consisting of tuples of the form (prompt, good response, bad response, judgment, reasoning for judgment) is used to do supervised finetuning of the LLM-as-a-Judge. The process can then be iterated for further self-improvement.

In their experiments, the authors used Llama3-70B-Instruct as the initial seed model. Through their iterative training process, they improved its performance on RewardBench from 75.4 to 88.3 (or 88.7 with majority voting). This result outperforms commonly used LLM judges such as GPT-4 and matches the performance of top-performing reward models trained with labeled examples. The paper also presents various ablation studies and analyses, including comparisons with models trained on human-annotated data, experiments with different data sources, and investigations into the impact of instruction complexity and data mixing strategies.

The Self-Taught Evaluator approach demonstrates the potential for creating strong evaluation models using only synthetic data. This method addresses the need for expensive human annotations and the problem of data becoming outdated as models improve. The authors suggest that their technique could be valuable for scaling to new tasks or evaluation criteria and could potentially empower the entire workflow of LLM development, including training, iterative improvement, and evaluation. However, they also note some limitations, such as the higher inference cost of generative LLM-as-a-Judge models compared to simple classifier-based reward models.

Our Take

Evaluating natural language generation is hard, even for humans. The history of automating evaluation of NLG is somewhat fraught: first there were metrics designed to measure how close some generated text was to some reference text–ROUGE for summarization, BLEU for translation, and more recently MAUVE for open-ended generation. But metrics lack the endless nuance of language and using them as rewards in an RL system didn’t work too well: optimizing for either BLEU or ROUGE resulted in models whose outputs got really good ROUGE and BLEU scores but didn’t read well to actual humans.

Of course If you’ve built a system to process natural language at scale and you have any faith in your system, the natural thing to do is to use your system for processing natural language to automate evaluation. And indeed RLHF and RLAI have been massively successful for creating chatbots that are helpful. Training on large amounts of synthetic data is the norm for state-of-the-art language models these days. The always well-informed Nathan Lambert reports “rumors that OpenAI is training its next generation of models on 50 trillion tokens of largely synthetic data”. Likewise Google DeepMind’s report on synthetic data for language models from April of this year is largely bullish about the trend noting that “As we approach human-level or even superhuman-level intelligence, obtaining synthetic data becomes even more crucial, given that models need better-than-average-human quality data to progress.”

And yet synthetic language data, particularly when its justification is “number go up on benchmark”, leaves a bad taste in my mouth. LLMs are not humans and LLM-generated text is not a perfect substitute for human-generated text. RLHF has been show to reward sycophancy and reduce the diversity of model outputs and I’m kept up at night by the thought that methods like Self-Taught Evaluators, which further remove human guidance/judgment from the training objective, could be having negative effects on outputs in ways that aren’t captured by existing benchmarks.

–Cole

New from the Gradient

Pete Wolfendale: The Revenge of Reason

We Need Positive Visions for AI Grounded in Wellbeing

Peter Lee: Computing Theory and Practice, and GPT-4’s Impact

Other Things That Caught Our Eyes

News

College Football 25 wouldn’t have been possible without AI, EA boss says

EA Sports' College Football 25, the latest installment in the beloved sports simulation franchise, was made possible by the use of AI and machine learning technology, according to Electronic Arts CEO Andrew Wilson. The game features 150 unique stadiums and over 11,000 player likenesses, which would not have been achievable without the power of AI. While the player likenesses were enhanced by talented artists, the algorithmic work provided a foundation for their creation. Wilson emphasized that AI was crucial in delivering the level of gameplay and visual fidelity seen in College Football 25. This reliance on AI and machine learning will also extend to the upcoming EA Sports FC 25, the successor to the FIFA franchise. The use of AI technology will drive enhanced tactical sophistication and realism in gameplay. The availability of College Football 25 is currently limited to PlayStation 5 and Xbox Series X.

This Week in AI: Companies are growing skeptical of AI’s ROI

A recent report by Gartner suggests that around one-third of generative AI projects in the enterprise will be abandoned after the proof-of-concept phase by the end of 2025. The main barrier to adoption is the unclear business value of generative AI. The cost of implementing generative AI organization-wide can range from $5 million to $20 million, making it difficult for companies to justify the investment when the benefits are hard to quantify and may take years to materialize. Additionally, a survey by Upwork found that AI tools have actually decreased productivity and added to the workload of workers. This growing skepticism towards AI's return on investment indicates that companies are expecting more from AI and vendors need to manage expectations.

Opinion | I hate the Gemini ‘Dear Sydney’ ad more every passing moment

The article criticizes the Gemini "Dear Sydney" ad for Google's AI product. The ad features a little girl who wants to write a letter to her idol, Olympic hurdler Sydney McLaughlin-Levrone. Instead of letting his daughter write the letter herself, the girl's dad asks Gemini AI to write it on her behalf. The author argues that this ad misses the point of writing, which is to express one's own thoughts and ideas. They believe that relying on AI to write for us takes away our ability to think for ourselves. The article criticizes the idea of replacing human creativity and personal connection with AI-generated content.

‘You are a helpful mail assistant,’ and other Apple Intelligence instructions

Apple's latest developer betas include generative AI features that will be available on iPhones, iPads, and Macs in the coming months. These features are supported by a model that contains backend prompts, which provide instructions to the AI tools. The prompts are visible on Apple computers and give insight into how the features work. For example, there are prompts for a "helpful mail assistant" that instruct the AI bot on how to ask questions based on the content of an email. Another prompt is for the "Rewrite" feature, which provides instructions on limiting answers to 50 words and avoiding the creation of fictional information. The files also contain instructions for generating "Memories" videos with Apple Photos, with guidelines to avoid religious, political, harmful, or negative content. These prompts give users a glimpse into the inner workings of Apple's AI tools.

Waymo expands robotaxi coverage in Los Angeles and San Francisco

Waymo, the self-driving car company owned by Alphabet, is expanding its robotaxi service area in Los Angeles and San Francisco. In San Francisco, Waymo is adding 10 square miles to its service area, including Daly City, Broadmoor, and Colma. In Los Angeles, it is adding 16 square miles, including Marina del Rey, Mar Vista, and Playa Vista. Waymo has been working to scale its commercial operations and increase its customer base. It has provided over two million paid trips to riders across all Waymo One markets and serves over 50,000 paid trips each week. Waymo's operations are still supported by Alphabet, which recently announced a $5 billion investment. The expansion comes after receiving approval from the California Public Utilities Commission. Waymo currently has about 300 vehicles in San Francisco, 50 in Los Angeles, and 200 in Phoenix. The company has seen increased demand in Los Angeles, with over 150,000 people signing up for the waitlist.

Your Next Home Insurance Nightmare: AI, Drones, and Surveillance

The article discusses the use of AI-powered aerial surveillance by insurance companies, specifically focusing on the case of Travelers. The author, who is a privacy advocate, shares their personal experience of having their homeowner's insurance policy revoked due to AI surveillance detecting moss on their roof. The author raises concerns about the lack of transparency and accountability in the use of AI surveillance by insurance companies, highlighting the potential for unnecessary home repairs and the pressure on homeowners. The article emphasizes the need for updated laws and regulations to protect consumers from the risks of AI surveillance.

Researchers have found that robotic vehicles can improve traffic flow in cities even when mixed with vehicles driven by humans. Using reinforcement learning, the researchers developed algorithms that allow robot vehicles to optimize traffic flow by communicating with each other. The experiments showed that when robot vehicles made up just 5% of traffic, traffic jams were eliminated, and when they made up 60% of traffic, traffic efficiency was superior to traffic controlled by traffic lights. This research is significant because it offers a potential solution to the worsening traffic problem in cities, without requiring all vehicles to be autonomous. The researchers plan to expand their framework and test it under real-world conditions.

Britain cancels $1.7 billion of computing projects in setback for global AI ambitions

The U.K. government has canceled £1.3 billion ($1.7 billion) worth of computing infrastructure projects, including a £500 million pledge for the AI Research Resource and an £800 million commitment for a next-generation exascale computer. These projects were aimed at bolstering the U.K.'s compute infrastructure and its ability to run advanced AI models. The cancellation is a setback for the country's ambitions to become a world leader in artificial intelligence. The government cited the need to prioritize other fiscal plans and address unfunded commitments. The Labour government is now looking to bring in new statutory regulations for the AI industry.

Figure’s new humanoid robot leverages OpenAI for natural speech conversations

Figure has unveiled its latest humanoid robot, the Figure 02, which leverages OpenAI for natural speech conversations. The robot is equipped with speakers and microphones to communicate with humans in the workplace. The use of natural language capabilities in humanoid robots adds transparency and allows for better instruction and safety. Figure's partnership with OpenAI, which helped raise a $675 million Series B funding round, highlights the importance of neural networks in the robotics industry. The Figure 02 also features a ground-up hardware and software redesign, including improved hands, visual language models, and six RGB cameras. The company hints at a future expansion beyond the warehouse/factory floor to commercial and home applications.

Colorado schools have AI roadmap to guide students and teachers into brave new world

The Colorado Education Initiative has released a roadmap to guide the integration of artificial intelligence (AI) into education policy and curriculums. The roadmap focuses on three main areas: teaching and learning, advancing equity, and developing policy for transparent and ethical use. It emphasizes the importance of students understanding concepts like AI hallucinations, data privacy, and potential bias in AI. The roadmap provides examples of how AI can support students, such as tailoring curriculum to match their learning pace and serving as a real-time tutor. For educators, AI has the potential to increase the amount of time available to work directly with students and reduce time spent on administrative work. The roadmap also highlights the need to engage all students, including English language learners and rural families, in learning about AI and recommends conducting audits to identify disparities in access to classroom technology. The roadmap encourages fluid guidance over rigid policies due to the rapidly changing nature of AI and emphasizes the importance of transparency and ethical use of data. The next steps include creating a task force on AI and updating conduct and discipline policies. The roadmap will be revised based on the learnings from a pilot program called Elevate AI.

Reddit to test AI-powered search result pages

Reddit plans to test AI-powered search result pages that will provide AI-generated summaries and content recommendations at the top of search results. The goal is to help users explore content and discover new Reddit communities. The company will use a combination of first-party and third-party technology to power this feature, and the experiment will begin later this year. This initiative aligns with Reddit's partnership with OpenAI, which allows them to leverage OpenAI's language models and build AI-powered features. During the earnings call, Reddit's CEO also highlighted the success of their AI-powered language translation feature and the company's growth in user base and revenue.

ProRata.ai, a generative AI startup, has secured licensing deals with major media and music companies, including the Financial Times, Axel Springer, The Atlantic, Fortune, and Universal Music Group. The company promises accurate attribution to source material and a revenue share plan on a per-use basis with publishers. ProRata aims to reframe the industry standard by analyzing AI output and content value to determine proportional compensation for publishers. The company also plans to launch its own AI chatbot. The startup raised $25 million in a Series A fundraising round and will be led by tech entrepreneur Bill Gross.

Audible is testing an AI-powered search feature

Audible, the audiobook company owned by Amazon, is testing an AI-powered search feature called Maven. Maven is a personal recommendation expert that uses natural language processing to provide tailored audiobook recommendations based on users' specific requests. The feature is currently available to select U.S. customers on iOS and Android devices and is limited to a subset of Audible's catalog. Audible is also experimenting with AI-curated collections and AI-generated review summaries. The company did not specify which AI models are powering Maven but stated that it will continuously evaluate and enhance the feature. This announcement comes after the backlash from creatives over the increasing use of AI-voiced audiobooks.

Papers

Toward learning a foundational representation of cells and genes

Speech-MASSVE: A Multilingual Speech Dataset for SLU and Beyond

Scaling LLM Test-Time Compute Optimally can be More Effective than Scaling Model Parameters

Let Me Speak Freely? A Study on the Impact of Format Restrictions on Performance of LLMs

CoverBench: A Challenging Benchmark for Complex Claim Verification

MedTrinity25M: A Large-scale Multimodal Dataset with Multigranular Annotations for Medicine

MMIU: Multimodal Multi-image Understanding for Evaluating Large Vision-Language Models

ProCreate, Don’t Reproduce! Propulsive Energy Diffusion for Creative Generation

VidGen-1M: A Large-Scale Dataset for Text-to-video Generation

GPUDrive: Data-driven, multi-agent driving simulation at 1 million FPS

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at editor@thegradient.pub and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! For feedback, you can also reach Daniel directly at dbashir@hmc.edu or on Twitter. If you enjoyed this newsletter, consider donating to The Gradient via a Substack subscription, which helps keep this volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!