Update #61: AI in Smartphone Cameras and MatFormer

We consider the implications of automated photo editing; researchers improve transformer inference efficiency.

Welcome to the 61st update from the Gradient! If you’re new and like what you see, subscribe and follow us on Twitter :) You’ll need to view this post on Substack to see the full newsletter!

Want to write with us? Send a pitch using this form.

News Highlight: AI in Smartphone Cameras

Summary

New smartphones, such as the Google Pixel 8, now come with more and more computational photography technologies that allow for automated editing of photos. This enables nearly effortless image editing, and new generative AI tools allow for dramatic changes to images. However, this also reduces the authenticity of photos taken with the camera, and has implications for how we document, share, and consume visual experiences.

Overview

New smartphones have many AI-based computational photography technologies for processing and editing photos. Progress in these technologies has been occurring for many years, in phones by industry leaders such as Apple, Samsung, and Google. Recent progress in generative AI has led to new features that allow for even larger changes to be made to photos, directly on a smartphone.

For example, the recently announced Google Pixel 8 has a Magic Editor tool that uses generative AI to move or erase objects in a scene. This tool can even fill in parts of objects that were not included in the original photo: for instance, the right side of a dog could be cut out of an image, but after a user moves the dog to the left, the tool could generate the part of the dog that was not caught in the original image. The Best Take tool can blend a series of photos so that the best elements of each photo in the series can be present in the final product; for instance, several group photos can be merged so that everyone has their eyes open and a nice looking smile in the blended photo.

Why does it matter?

A large majority of all photos taken worldwide are taken on smartphone cameras. These photos play a key role in documenting, remembering, and sharing life experiences. As new AI features are being tightly integrated into the smartphone photography process, AI will play a larger role in how people remember and share their experiences.

For example, one may imagine that people begin to remember visual experiences differently, affected by significant AI-driven edits to the images of these experiences. Then, revisiting the same vacation spots or re-meeting old friends may be less enjoyable, given that they look different from the AI-influenced “memories”. These computational photography tools can also exacerbate existing social issues; for instance, teenage self esteem and mental health struggles caused by self-comparison with Instagram photos may be even worsened if AI allows for more accessible, more dramatic edits to photos.

Sources

Google Blog: Pixel 8 Pro

WIRED: None of Your Photos Are Real

Editor Comments

Daniel: Selective memory already impacts how we look at the past in the absence of technology. Features like AI in smartphone cameras feel like they could, in an odd way, both increase and decrease the amount of agency we have over what we remember. We can choose to select the “best”—our best smiles combined with our friends’ best smiles, the best combinations of lighting and background and everything else we might want in a photo. But the definition of “best” isn’t always ours, isn’t always something we can even articulate to ourselves. For some of the same reasons I find AI-enhanced images of ourselves potentially troubling, so too do I think it’s worth spending time thinking about what we want from technologies like Magic Editor and clarifying their benefits and drawbacks. At the same time, technologies like Magic Editor are fun and lower the barrier to certain creative pursuits. It’s worth being careful about the direction a technology might portend, but not overblowing what it is and what it’s capable of.

Research Highlight: MatFormer: Nested Transformer for Elastic Inference

Summary

"MatFormer: Nested Transformer for Elastic Inference" delves into the pressing challenge of efficiently deploying Transformer models across a myriad of settings with diverse computational capacities. Recognizing the limitations of current practices, which often involve resource-intensive training of multiple model sizes, the authors introduce MatFormer. By allowing the extraction of numerous smaller models from a single trained model without additional optimization, MatFormer promises to revolutionize how we think about model deployment across different scales.

Overview

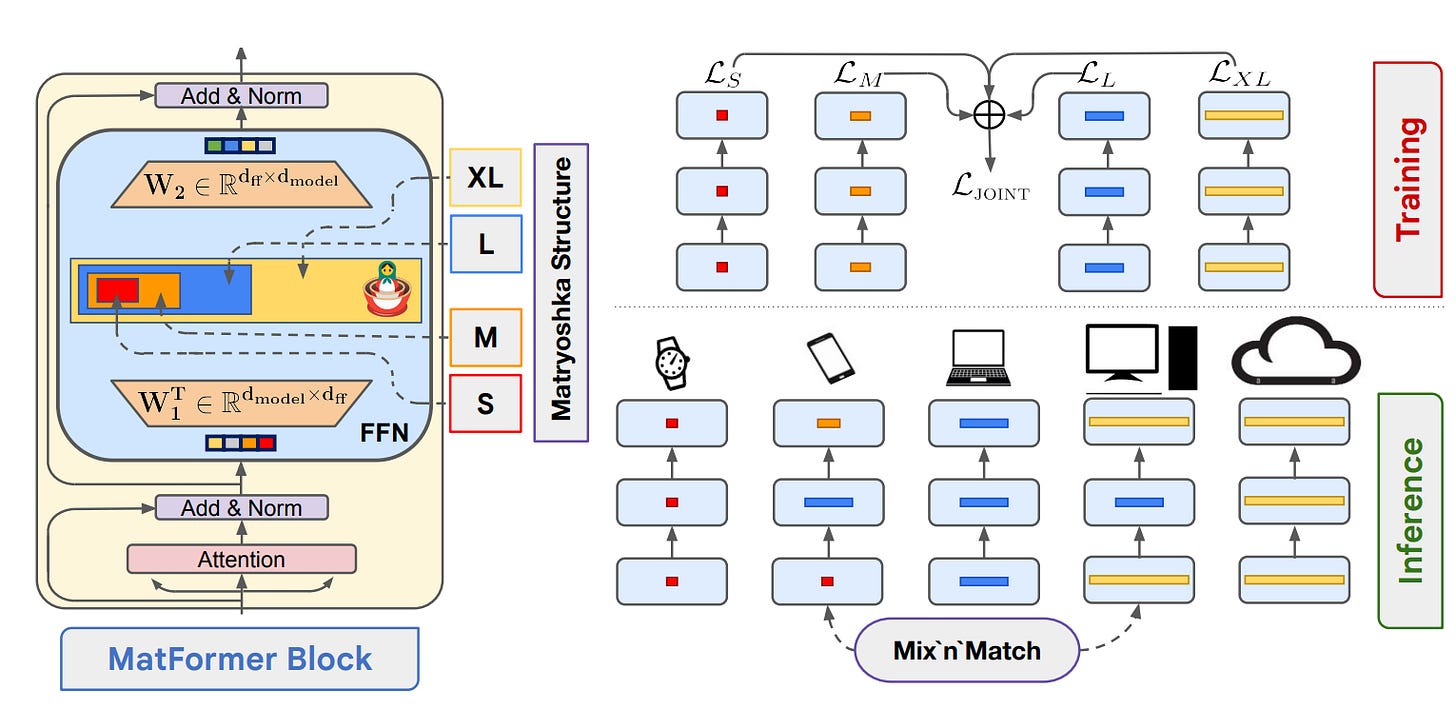

MatFormer refines the deployment of Transformer architectures by crafting a solution that melds efficiency with adaptability across diverse computational landscapes. The methodology revolves around a nested design within the Transformer's Feed Forward Network (FFN) block. The FFN block in a Transformer is essentially a two-layered linear transformation processing the output from the attention blocks. This nested design choice is motivated by the FFN block's role in determining both model size and computational cost. The nested structure is composed of g Transformer blocks for some number g, with each block being a subset of its successor, much like Russian Matryoshka dolls. Given the different granularities (or sizes) of the nested blocks, each block has its own unique set of FFN operations. In particular, the authors choose four exponentially spaced granularities, resulting in nested hidden neurons of diverse sizes.

The training strategy of MatFormer is particularly distinctive, focusing on the joint optimization of all g nested submodels. This unified approach not only streamlines the training process but also achieves a 15% speed-up compared to training equivalent submodels individually. The result of this training is a range of smaller submodels, all of which exhibit behavior in line with the universal model.

MatFormer additionally introduces the novel concept of "Mix'n'Match", a method of customizing model structures by mixing different levels of complexity across its layers. While it's trivial to extract g submodels by stacking the corresponding Transformer block across layers, Mix'n'Match takes it a step further. By selecting different granularities for each layer, a combinatorially large number of accurate smaller models can be generated.

When it comes to deployment, MatFormer caters to both static and dynamic workloads. Static workloads refer to computational tasks where compute resources are known beforehand and the inputs remain relatively similar in difficulty. In such environments, the most fitting submodel can be pinpointed using Mix'n'Match. On the other hand, dynamic workloads pertain to unpredictable computational resources that can vary significantly on the fly. Under such scenarios, MatFormer's universal model can dynamically adjust and extract the optimal submodel based on evolving conditions. A standout feature of these deployments is the pronounced behavioral consistency across all submodels, ensuring streamlined inference and minimized prediction drift.

Empirically, the authors showcase the efficacy of MatFormer across two domains: language models, termed MatLM, and vision encoders, termed MatViT. Their findings reveal that MatFormer scales as reliably and accurately as a standard Transformer. Specifically, for image classification tasks, MatViT models either match or surpass the performance of traditional Vision Transformers, as evidenced in the Figure below. Similarly, for language models, MatLM demonstrates significant advantages in terms of accuracy and computational efficiency.

Why does it matter?

In today's diverse digital landscape, the widespread adoption of AI models, especially Transformer models, for many uses underscores the importance of their efficient deployment. The current approach of training distinct models for different deployment scenarios is resource-intensive and not always optimal. MatFormer offers a solution to this challenge. Instead of training multiple models, MatFormer allows for the extraction of several submodels from a single trained model, making deployment more flexible and cost-effective.

In a broader context, the efficient deployment of AI models has environmental implications—the computational resources used to train AI models have a large carbon footprint. By reducing the need for repeated model training, MatFormer not only optimizes computational efficiency but also moves towards a sustainable and more responsible AI development approach.

New from the Gradient

Nao Tokui: “Surfing” Musical Creativity with AI

Divyansh Kaushik: The Realities of AI Policy

Other Things That Caught Our Eyes

News

Ukrainian AI attack drones may be killing without human oversight “Ukrainian attack drones equipped with artificial intelligence are now finding and attacking targets without human assistance, New Scientist has learned, in what would be the first confirmed use of autonomous weapons or ‘killer robots’.”

First supernova detected, confirmed, classified and shared by AI “A fully automated process, including a brand-new artificial intelligence (AI) tool, has successfully detected, identified and classified its first supernova.”

Stack Overflow lays off over 100 people as the AI coding boom continues “Coding help forum Stack Overflow is laying off 28 percent of its staff as it struggles toward profitability. CEO Prashanth Chandrasekar announced today that the company is ‘significantly reducing the size of our go-to-market organization,’ as well as ‘supporting teams’ and other groups.”

Waymo-Zeekr robotaxi poised for US testing by end of 2023 “Nearly two years after Waymo and Geely struck a deal to develop robotaxis for the U.S. market, we are seeing concrete progress in the collaboration.”

Millions of Workers Are Training AI Models for Pennies “In 2016, Oskarina Fuentes got a tip from a friend that seemed too good to be true. Her life in Venezuela had become a struggle: Inflation had hit 800 percent under President Nicolás Maduro, and the 26-year-old Fuentes had no stable job and was balancing multiple side hustles to survive.”

America Is About to See Way More Driverless Cars “Robotaxis are now picking up riders in L.A. and Houston—and facing a whole new set of challenges. The future of driverless cars in America is a promotional booth with a surfboard and a movie director’s clapboard.”

Biden to cut China off from more Nvidia chips, expand curbs to more countries “The Biden administration said on Tuesday it plans to halt shipments to China of more advanced artificial intelligence chips designed by Nvidia and others, part of a suite of measures aimed at stopping Beijing from getting cutting-edge U.S.”

China launches AI framework, urges equal AI rights for all nations “China’s initiative called for countries to uphold mutual respect when developing AI, suggesting that all nations ‘regardless of their size, strength or social system’ should have ‘equal rights’.”

Adobe May Be Tech’s Biggest AI Bet Yet “How hot is Adobe’s artificial intelligence act right now? Hot enough that it makes financial analysts forget about finance.”

Can You Hide a Child’s Face From A.I.? “There are two distinct factions of parents on TikTok: those who will crack eggs over their kids’ heads for likes and those who are trying desperately to make sure the internet doesn’t know who their children are.”

Anthropic brings Claude AI to more countries, but still no Canada (for now) “Anthropic, the ‘Constitutional AI’ foundation model startup from San Francisco that is perhaps the foremost rival to OpenAI, made a big move this week, bringing its Claude 2 large language model (LLM) chatbot to 95 countries total”

WHO outlines considerations for regulation of artificial intelligence for health “The World Health Organization (WHO) has released a new publication listing key regulatory considerations on artificial intelligence (AI) for health.”

Now is the time to stop AI from stealing our words “Tech companies are getting even richer by vacuuming up the work of writers without permission.”

Clearview AI Successfully Appeals $9 Million Fine in the U.K. “Clearview AI, a New York company that scraped billions of photos from the public internet to build a facial recognition app used by thousands of U.S. law enforcement agencies, will not have to pay a fine of 7.5 million pounds, or $9.1 million, issued by Britain’s chief data protection agency.”

Papers

Daniel: First off, a huge congratulations to the Generative Agents team—their work received a UIST 2023 Best Paper Award. If you want to learn more about Generative Agents, you should absolutely listen to my ~2.5-hour conversation with lead author Joon Park. In other news, OpenAI’s presentation of DALL-E 3 found that… surprise, surprise, improving the captions a text-to-image model is trained on improves the model’s ability to follow detailed image descriptions. This recent paper presents Eureka, an LLM-powered reward design algorithm that performs evolutionary optimization over reward code. These rewards can be used in reinforcement learning algorithms for acquiring complex skills.

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at editor@thegradient.pub and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! For feedback, you can also reach Daniel directly at dbashir@hmc.edu or on Twitter. If you enjoyed this newsletter, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!