Update #60: Wearable AI and Efficient Streaming Language Models

A slew of competitors announce AI-powered wearables, and researchers improve the performance of streaming language models by exploiting attention sinks.

Welcome to the 60th update from the Gradient! If you’re new and like what you see, subscribe and follow us on Twitter :) You’ll need to view this post on Substack to see the full newsletter!

Want to write with us? Send a pitch using this form.

News Highlight: AI That You Can Wear

Summary

At Paris Fashion Week, designer Coperni showcased a new wearable tech device called the "Humane Ai Pin." This device, previously shrouded in mystery, was displayed on several models, hinting at its design and potential use in daily fashion. Meanwhile, multiple other competitors—Meta, Tab, and Rewind—have announced their own AI wearables. Jony Ive, the former lead Apple designer, is said to be planning the “iPhone of AI” with OpenAI.

Overview

Humane, a company with roots tracing back to former Apple employees, has been hinting at a transformative approach to technology—their "Humane Ai Pin" has been a topic of intrigue for tech enthusiasts. Until the Paris Fashion Week, the device had only been seen in vague silhouettes on Humane's website and during a TED demo by its co-founder, Imran Chaudhri.

Humane describes the pin as a "screenless, standalone device and software platform built from the ground up for AI" and designed to be "privacy-first": its designers eschewed features like wake words, ensuring it doesn't engage in "always-on" listening. At the fashion event, the Ai Pin was prominently pinned to the attire of several Coperni models, giving the world a clearer glimpse of its design. However, while its appearance is now somewhat demystified, its exact functionalities and interaction methods remain largely undisclosed.

Meta introduced its next-generation Ray-Ban Meta smart classes collection at a recent event—the glasses now integrate the Meta AI conversational assistant, which can be engaged with a wakeword. Users can use the assistant to, in Meta’s words, “spark creativity, get information, and control [their] glasses.”

The Rewind Pendant is a wearable that looks like a necklace and captures audio from the real world—it then transcribes, encrypts, and stores that information locally on a user’s phone. The pendant looks bare-bones—a simple necklace with a cylindrical pendant—and is sold as being “powered by truly everything you’ve seen, said, or heard.”

Tab’s founder, Avi Schiffmann, announced and demoed the device at a recent event—Tab is also a necklace, and Schiffmann presented the device as a database of users' conversations and daily lives that can help them with work, idea generation, and more. The personal assistant can extract information from multiple sources, and Avi describes interaction with the system as “a late night conversation with a friend, as the AI truly understands you.” Tab also does not store transcripts or audio recordings for privacy and security reasons.

Why does it matter?

The different competitors in the wearable AI space have a number of different reasons for developing their systems. Meta and Amazon have a particular interest in seeking smartphone alternatives because of Google and Apple’s duopoly in the smartphone space, and so Meta might seek to make its device an explicit replacement for the smartphone. The Rewind Pendant, however, stores its data on users’ phones and doesn’t present itself as an alternative. Tab’s presentation also doesn’t position it explicitly against the smartphone. While there are few details on the Humane Ai Pin, it is described as a “post-smartphone” device.

Whether or not it seeks to oust the smartphone, each competitor in the wearable AI space presents a picture of how conversational AI systems could integrate more seamlessly into our daily lives. A user might, following a long conversation with coworkers about a product, leave the room and ask her device to help her brainstorm ideas for the product’s future direction and align those with her coworkers’ opinions.

Wearable AI devices present a compelling picture of how our interactions with AI systems might look different from they do today. These devices are likely to perform well with early adopters who want to try something new, but the journey to a truly “post-smartphone” era or a fundamentally altered way of interacting with AI systems will be a longer one.

Editor Comments

Daniel: I’m pretty excited to see this array of new device announcements—the primary reason is that they gesture towards more imaginative ways that we could use AI systems. I don’t know if the “final form” of a wearable AI device will look like a pendant or a pin or something else, but I hope to see further exploration of possibilities for interacting with AI systems that involve both software and hardware: we can move beyond text boxes, and we might have conversational sparring partners at our constant disposal. But the privacy and observability angles are important, also. These devices take different approaches to the “always on” question, for instance. It seems important that we should know when our conversations and interactions are being transcribed and have easy access to delete this information. I, for one, don’t think that immediate access to every conversation we’ve ever had sounds like the best idea in the world. Ted Chiang’s short story “The Truth of Fact, the Truth of Feeling” explores a world in which a lifeblogging system called Remem effectively grants its users eidetic memory over everything that ever happened to them. The picture of human interaction that this story paints feels uncomfortable. I don’t mention this story to say that these wearable AI devices are anything like Remem or that they are barreling in this direction, but those of us who build and use these devices should think carefully about ways they might be used and how they could affect our interactions with others, so that they’re designed thoughtfully.

I think, too, that the advantage a company like Meta has in distribution will manifest in how tools like “AI glasses” might look over time: accessories like the Apple Watch or VR glasses were launched and tinkered with long before we could expect widespread adoption. Meta plans to expand availability and what its glasses-bound AI can be asked over time, and the feedback and experience of early users will play a part in the direction it takes.

Research Highlight: Efficient Streaming Language Models with Attention Sinks

Summary

A team from MIT, Meta AI, and Carnegie Mellon discovered a simple and efficient way to improve the performance of streaming language models, which iteratively generate long sequences of text. Their method is based on the observation that such language models appear to use the first few tokens in the sequence as “attention sinks”, whereby later tokens attend strongly to the first tokens even when the first tokens may not be semantically relevant. Keeping such attention sinks in the context of a streaming language model or pretraining a language model with special attention sink tokens then efficiently improves language modelling performance on long sequences.

Overview

As covered in Update 54, several recent works have developed methods for increasing the context length of Transformer-based LLMs — the number of tokens that they can process at once. This new work tackles the related problem of generating long sequences in the streaming setting, where an autoregressive LLM iteratively generates long sequences of text one token at a time, using cached keys and values of previously generated tokens. For instance, if using an LLM as a personal assistant, then keys and values of recent parts of the conversation can be cached, so that new responses can be generated quickly. The authors leverage an “attention sink” phenomenon that they discover in LLMs.

This attention sink phenomenon refers to the authors’ empirical observation that LLMs such as Llama-2, MPT, Falcon, and Pythia appear to focus a lot of attention on initial tokens in a sequence, even if they are not semantically relevant to the current token being generated. Much like the softmax plus one trick, this allows for a token to not update itself much based on the context, unlike standard dense attention in which a token must attend to its context with attention weights that sum to one. They hypothesize that the reason initial tokens appear to be consistently used as attention sinks, as opposed to other tokens, is that initial tokens can be attended to by all later tokens in the sequence during training, so they can be consistently attended to by almost all tokens. This observation is similar to the discovery in ViTs of tokens with high attention scores but little semantic relevance to downstream tasks (see Mini-Update 27).

The authors then leverage this discovery of attention sinks to efficiently improve inference of pretrained LLMs without any further training. As illustrated in the Figure above, their StreamingLLM method uses a sliding window of fixed context length (2044 tokens for an LLM trained on sequences of length 2048), and also maintains the first 4 initial tokens to serve as consistent attention sinks. This significantly improves language modelling performance on long sequences for Llama-2, MPT, Falcon, and Pythia.

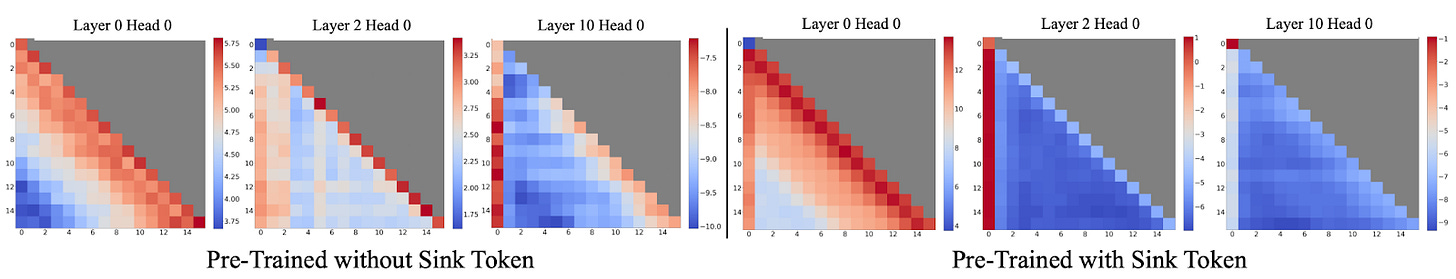

Another way to achieve similar results is to train the LLM with a designated sink token with a learnable embedding that is preprended to every training sequence. As seen in the above Figure, an LLM trained with a designated sink token more consistently uses that single token as an attention sink, and does not require several initial tokens to be used as attention sinks.

Why does it matter?

For several application areas, it is desirable to use LLMs that are capable of generating many tokens in a streaming setting. This work develops simple methods that are applicable to pretrained LLMs that improves performance and efficiency of LLMs in this setting. Compared to the baseline that uses a sliding window with recomputation, the authors find that StreamingLLM can achieve up to a 22.2x speedup per token generated.

Furthermore, from an interpretability standpoint, the use of attention sinks in standard LLMs is unexpected and does not appear to have an analogy in human behavior. Thus, it may be possible that the approach of pretraining with a designated sink token leads to more interpretable behavior. Either way, it is important to study these behaviors in the powerful AI models that many people use these days.

New from the Gradient

Tal Linzen: Psycholinguistics and Language Modeling

Kevin K. Yang: Engineering Proteins with ML

Other Things That Caught Our Eyes

News

Dead grandma locket request tricks Bing Chat’s AI into solving security puzzle “Bing Chat, an AI chatbot from Microsoft similar to ChatGPT, allows users to upload images for the AI model to examine or discuss. Normally, Bing Chat refuses to solve CAPTCHAs, which are visual puzzles designed to prevent automated programs (bots) from filling out forms on the web.”

Why ‘AI copilot’ startups are so hot with VCs right now “As a small sampling: Corti, a Copenhagen-based startup using AI to assist healthcare providers in assessing patients, just raised a $60 million round in late September…”

NSA starts AI security center with eye on China and Russia as general warns U.S. lead ‘should not be taken for granted’ “Army Gen. Paul Nakasone said the center would be incorporated into the NSA’s Cybersecurity Collaboration Center, where it works with private industry and international partners to harden the U.S. defense-industrial base against threats from adversaries led by China and Russia.”

New roleplaying chatbots promise to indulge your sexual fantasies “AI has thrust deeper into carnal pleasures with the launch of a new roleplaying chatbot system. The feature is the brainchild of Bloom, an erotic audio platform based in Germany. The app also features 800 original sex stories, which range from the vanilla to the kinky (anyone for splashing?).”

Meta’s AI stickers are here and already causing controversy “Well, that didn’t take long: Just a week after Meta announced a ‘universe of AI’ for Facebook, Instagram and WhatsApp, the company’s new AI-generated stickers are already causing controversy.”

Coca-Cola's New AI-Generated Soda Flavor Falls Flat “As if the hype around AI couldn’t get any more exasperating, Coca-Cola had to hop on the bandwagon. The massive beverage company has tapped an artificial intelligence to serve as its advisor in developing a new flavor of its titular soft drink.”

How an AI deepfake ad of MrBeast ended up on TikTok “AI deepfakes are getting so good that a fraudulent MrBeast ad slipped past TikTok’s ad moderation technology to end up on the platform. In the ad, the massively influential creator appeared to be offering 10,000 viewers an iPhone 15 Pro for just $2.”

Job postings mentioning AI have more than doubled in two years, LinkedIn data shows “New data from LinkedIn shows that job postings that mention AI have soared, and professionals are responding to the shift.”

Google Bard is gaining a new 'Memory' toggle to remember key details “Google Bard now has the capability to learn more about users to provide personalized responses. Users can choose to enable or disable this feature, called ‘Memory,’ based on their preferences. Previously, each conversation with the Google Bard AI chatbot would begin from scratch.”

Anthropic Seeking $2 Billion Via Google After Amazon Pledge “Red-hot AI startup Anthropic is reportedly in talks to raise $2 billion in funding from Google and others, just days after Amazon committed to invest upwards of $4 billion in the startup.”

Microsoft introduces AI meddling to your files with Copilot in OneDrive “Microsoft is to overhaul OneDrive in a move that will bring Copilot to the cloud storage service and herd users towards the tool's web interface.”

ChatGPT-owner OpenAI is exploring making its own AI chips “OpenAI, the company behind ChatGPT, is exploring making its own artificial intelligence chips and has gone as far as evaluating a potential acquisition target, according to people familiar with the company’s plans.”

How Will A.I. Learn Next? “The Web site Stack Overflow was created in 2008 as a place for programmers to answer one another’s questions. At the time, the Web was thin on high-quality technical information; if you got stuck while coding and needed a hand, your best bet was old, scattered forum threads that often led nowhere.”

Bing’s AI image generator apparently blocks prompts about the Twin Towers “After some users of Bing’s DALL-E 3 integration found a loophole in the tool’s guardrails and generated art featuring several beloved animated characters and the Twin Towers, Microsoft seems to have blocked the ability to prompt anything related to the Twin Towers.”

ChatGPT provided better customer service than his staff. He fired them. “Artificial intelligence chatbots will upend how call centers and customer service hotlines operate. Countries like India and the Philippines are worried.”

Snap might have to withdraw its AI chatbot, watchdog says “The UK's data watchdog has told Snapchat it might have to stop offering its generative AI chatbot My AI. An initial probe into the company suggested a ‘worrying failure’ by parent company Snap over potential privacy risks, especially to children.”

AI Watermarks Are No Match for Attackers “Soheil Feizi considers himself an optimistic person. But the University of Maryland computer science professor is blunt when he sums up the current state of watermarking AI images. ‘We don’t have any reliable watermarking at this point,’ he says. ‘We broke all of them.’”

Papers

Daniel: I like this paper that aims to leverage AI for democratic discourse—its authors recruited over 1500 people who disagree on gun control and paired them off in online chat rooms to discuss the issue. An LM would intermittently read the conversation and suggest rephrasings of a user’s messages (to be more polite, understanding, etc.) before those messages were sent; the authors found significant increases in conversation quality and democratic reciprocity. Waymo released a neat paper that casts multi-agent motion forecasting as language modeling; their MotionLM represents continuous trajectories as sequences of tokens, and autoregressively produces joint distributions over interactive agent futures. A paper from Max Tegmark’s group that finds an internal world model in Llama-2 got a fair amount of pushback on Twitter for being unoriginal and not engaging with prior literature (Jim Fan also registered disagreement with the paper’s definition of “world model”).

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at editor@thegradient.pub and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! For feedback, you can also reach Daniel directly at dbashir@hmc.edu or on Twitter. If you enjoyed this newsletter, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!