Update #55: Hollywood on Strike and RetNet

Writers and screen actors strike due to concerns about AI systems; Microsoft researchers introduce a new architecture that improves on transformers' sequence length complexity and inference cost.

Welcome to the 55th update from the Gradient! If you’re new and like what you see, subscribe and follow us on Twitter :) You’ll need to view this post on Substack to see the full newsletter!

Want to write with us? Send a pitch using this form.

News Highlight: Hollywood is on strike with AI-related bargaining demands at the center

Summary

On April 18th, 97.85% of the Writers Guild of America (WGA) voted to strike against the Alliance of Motion Picture and Television Producers (AMPTP) and began picketing on May 2nd, 2023. About one month later, 97.91% of the Screen Actors Guild – American Federation of Television and Radio Artists (SAG-AFTRA) similarly voted to strike against AMPTP and began picketing on July 14th, 2023. In both cases, the role of artificial intelligence appears to represent one of the largest areas of disagreement between the striking groups and the AMPTP. In their latest negotiations update, the WGA outlines their positions around regulating the use of AI on WGA covered projects. Their demands include provisions that “AI can’t write or rewrite literary material; can’t be used as source material; and …covered material can’t be used to train AI.” SAG-AFTRA’s members share similar concerns, explaining AI “can mimic members’ voices, likenesses and performances” and they are seeking agreement around “acceptable uses, bargain protections against misuse, and ensure consent and fair compensation for the use of [actors’] work to train AI systems and create new performances.”

Overview

Since the strike authorizations, around 20 thousand writers and 160 thousand actors have agreed to a stoppage of on-camera acting, singing, dancing, stunt work, background acting, writing services, future project negotiations, and more, effectively shutting down the vast majority of movie and television productions including current TV hits like Stranger Things, Yellow Jackets, Abbot Elementary as well upcoming films like Deadpool 3, Mission Impossible 8, Venom 3 and more. In the months since, numerous actors and writers have taken their messages from the picket lines to traditional and social media organizations to articulate how they see current AI proposals as a threat to their livelihoods.

According to the chief negotiator for SAG-AFTRA, AMPTP “propose[s] that [their] background performers should be able to be scanned, get paid for one day’s pay and their company should own that scan,..and to be able to use it for the rest of eternity… with no consent and no compensation.” However the AI proposals are not unique to background actors. At a recent picket line in Los Angeles, Scrub’s Star Donald Faison articulates his own anxieties about studios using AI to recreate his likeness after his death, “saying things he wouldn’t say” and leading to his family not being properly compensated. Similar sentiment has been shared by many other writers and actors including Bryan Cranston, Aubrey Plaza, Rosario Dawson, Bowen Yang, Mandy Moore, Tim Wareheim and Eric Heidecker.

Why does it matter?

In the words of SAG-AFTRA member and all around very funny person Rob Delaney, “AI is… going to be used to further marginalize workers.” Not only are the writers and actors going to be marginalized, but they are being asked to facilitate their own replacements by providing training data (scans + scripts) for studios and technology companies to use the actors and their likeness in future projects, without their consent, for little to no compensation.

This is also not unique to the film industry. Across industries, we are seeing a growing phenomena of workers being expected to help refine AI models, either explicitly as Human in the Loop or indirectly as one’s work products (scripts, films, legal briefs, patient charts etc) become ingested in the training pipeline. We can see these dynamics play out in call centers, law firms, and doctors’ offices where workers are questioning how the decisions they make in their day to day jobs will be ingested into large AI models and could one day lead to their replacement.

We can see further parallels between the power dynamics and the issues at play for Hollywood workers striking for control and compensation over their inclusion in AI training materials and generated outputs to the exploitation highlighted in a recent Atlantic article titled “America Already Has An AI Underclass.” The authors spoke with numerous Humans in the Loop that support Reinforcement Learning With Human Feedback (RLHF) techniques. These techniques are used by companies like OpenAI (Microsoft), Google, Amazon (where it’s sold as a service), and Appen (who provides labeling services to each of those companies & more) where they are currently valued around $7-14 Billion Dollars and pay their workers in Africa as little as $1.50 while some US-based workers make between $12.75 and $14.50 an hour.

In each case, the media and technology companies, who are reporting that profits are the “best ever …propelled by Big Tech and A.I. hype”, are resisting their workers’ attempts to be fairly compensated (or have the option to opt out) for their inclusion in AI training sets, as well as the ability to have informed consent regarding the usage of outputs generated from those models, and finally for them to be fairly compensated for those outputs. Without the writers, the actors, the data labelers (and many more people!), there would be no foundation for their foundational models’ success.

Research Highlight: Retentive Networks

Summary

The Transformer model, since its introduction, has been used in various applications, from machine translation to text generation, and has been the basis for many state-of-the-art models like GPT4, ChatGPT and others.

However, despite its success, the Transformer model has some limitations: 1) It struggles with long sequence lengths due to its quadratic complexity; 2) its inference cost is high, which can pose a challenge when deploying models in resource-constrained environments.

To address these concerns, a recent work from Microsoft introduces a new architecture called Retentive Network (RetNet). It addresses the above-mentioned limitations of the Transformer through a mechanism called "retention," which we cover in detail below.

Overview

The Retentive Network (RetNet) architecture is stacked with identical blocks, similar to the Transformer model. Each RetNet block contains two modules: a multi-scale retention (MSR) module, and a feed-forward network (FFN) module.

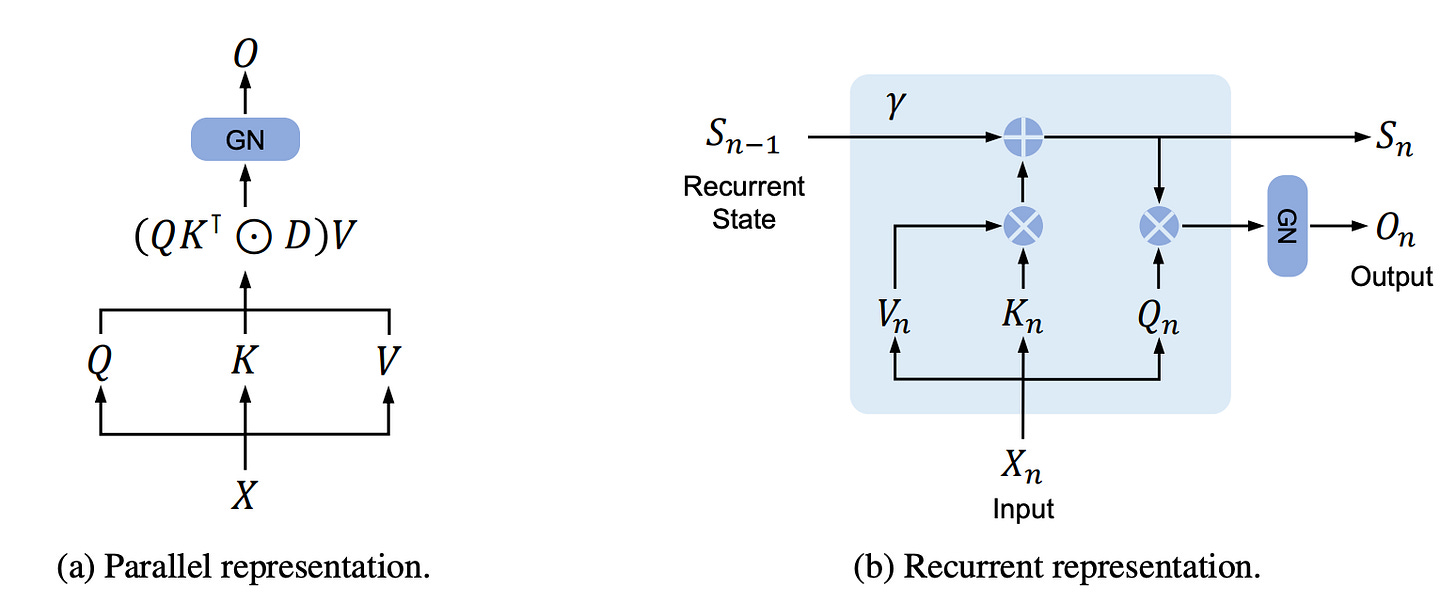

The architecture of RetNet is based on the concept of "retention," which supports three computation paradigms: parallel, recurrent, and chunkwise recurrent. The retention mechanism has a dual form of recurrence and parallelism, allowing the models to be trained in a parallel way while recurrently conducting inference.

Multi-Scale Retention (MSR) Module: The MSR module is the core of the RetNet architecture. It projects the input sequence into a one-dimensional function and then maps this function to output through states. The projection and mapping are formulated in a recurrent manner, allowing the model to encode sequence information recurrently. The projections are made content-aware, meaning they are influenced by the content of the input sequence. The MSR module also introduces a mechanism called "retention," which allows the model to retain information from previous states in the sequence.

Feed-Forward Network (FFN) Module: The FFN module in RetNet works similarly to the one in the Transformer model. It takes the output from the MSR module and applies a series of linear transformations and non-linear activation functions to generate the final output.

The architecture of RetNet allows it to handle training parallelism, low-cost inference, and efficient long-sequence modeling:

Parallel Retention: This allows for training parallelism, which means that multiple computations can be performed simultaneously, speeding up the training process.

Recurrent Retention: The recurrent representation of retention is employed during inference, which nicely fits autoregressive decoding. The O(1) complexity reduces memory and inference latency while achieving equivalent results.

Chunkwise Retention: This facilitates efficient long-sequence modeling with linear complexity. Each chunk of data is encoded in parallel, while the chunks are summarized recurrently.

The paper also presents experimental results comparing RetNet and the Transformer model. The results show that RetNet achieves better scaling results, parallel training, low-cost deployment, and efficient inference. The authors also note that RetNet tends to outperform Transformer when the model size is larger than 2 billion parameters.

Why does it matter?

The development of RetNet is significant because it addresses some of the key limitations of the widely-used Transformer model. By enabling training parallelism, low-cost inference, and efficient long-sequence modeling, RetNet can potentially lead to more efficient and cost-effective large language models. In particular, the low inference cost makes RetNets suitable for low latency applications on the edge devices. The experimental results presented in the paper provide strong evidence of RetNet's advantages over the Transformer model, making it a promising new architecture for large language models.

Author Q&A

Q: What is the story of how this project started? For instance, one could plausibly arrive at RetNets by seeking to improve Transformers, or by trying to bridge the gap between recurrent and transformer architectures.

The workstream of advancing foundation architectures lasts for years in our group. After my colleagues and I developed self-attention [1], we always had a dedicated effort to improve it. For example, we interpret the underlying mechanisms [2, 3, 4], stabilize the training of very deep Transformers [5], make it more general-purpose, and scale up the sequence length [7, 8]. It is uneasy to beat Transformers, as we need to care about performance, scalability, training stability, efficiency, simplicity, etc. Last year we realized that Transformers sacrifice the inference benefits of recurrent models, although the motivation of Transformer is to improve training parallelism over LSTMs. Then we started to bridge the gap between two modeling paradigms and developed RetNet.

Q: Do you see any promising future directions for improving RetNets?

During the development of RetNet, we always keep in mind that “everything should be made as simple as possible, but not simpler”. The simplicity of the proposed retention makes it easier to utilize mathematical tools to further analyze the architecture design. We expected that the method could be improved with theoretical guarantees.

Q: Are there any interesting new lines of work that RetNets allow? For instance, the success of Vision Transformers allowed for lots of patch-based methods like Masked Autoencoders to succeed again for vision, whereas such methods would not work as naturally with convolutional networks.

RetNet is suitable for long-sequence modeling, because the chunk wise recurrent representation trains the models with linear memory complexity. It also enables new distributed sequence parallelism algorithms.

Besides large language models, video modeling and time-series data are good applications that can be powered by RetNet.

The simplicity of RetNet enables novel theoretical work, as mathematical analysis becomes easier. It also helps us better understand the underlying mechanisms of trained models (like [4]).

It is promising to deploy RetNet models on edge devices, such as mobile phones, due to the nice properties.

Q: LLMs often lack in their planning capabilities. What do you think would be the effect of replacing transformers with RetNets on such capabilities?

With the lower inference cost, we can efficiently deploy the RetNet model in the environment and learn to plan from the interactions and feedback at scale.

Q: As also mentioned in your paper, linear attention can’t encode positional information effectively. However, RetNet has clearly shown superior performance with respect to softmax attention in Transformers. What do you think is the reason for that?

As shown in Equation (3) of the paper, the derivations of RetNet naturally take care of position modeling, which has a similar formulation as xPos [7]. The design is more theoretically grounded.

References for Q&A

[1] Long Short-Term Memory-Networks for Machine Reading

[2] Self-Attention Attribution: Interpreting Information Interactions Inside Transformer

[3] Knowledge Neurons in Pretrained Transformers

[4] Why Can GPT Learn In-Context? Language Models Secretly Perform Gradient Descent as Meta-Optimizers

[5] DeepNet: Scaling Transformers to 1,000 Layers

[6] Magneto: A Foundation Transformer

[7] A Length-Extrapolatable Transformer

[8] LongNet: Scaling Transformers to 1,000,000,000 Tokens

New from the Gradient

Peli Grietzer: A Mathematized Philosophy of Literature

Ryan Drapeau: Battling Fraud with ML at Stripe

Other Things That Caught Our Eyes

News

Bing Chat is now available in Google Chrome and Safari “Watch out Bard — Bing’s AI chatbot is now available in Google Chrome and Safari. As first spotted by Windows Latest (via 9to5Google), you can now access the chatbot from Bing.com in both browsers. There are some limitations to using Bing Chat on Chrome and Safari, though.”

OpenAI Quietly Shuts Down Its AI Detection Tool “In January, artificial intelligence powerhouse OpenAI announced a tool that could save the world—or at least preserve the sanity of professors and teachers—by detecting whether a piece of content had been created using generative AI tools like its own ChatGPT.”

NYC subway using AI to track fare evasion “Surveillance software that uses artificial intelligence to spot people evading fares has been quietly rolled out to some of New York City’s subway stations and is poised to be introduced to more by the end of the year, according to public documents and government contracts obtained by NBC News.”

The Workers Behind AI Rarely See Its Rewards. This Indian Startup Wants to Fix That “In the shade of a coconut palm, Chandrika tilts her smartphone screen to avoid the sun’s glare. It is early morning in Alahalli village in the southern Indian state of Karnataka, but the heat and humidity are rising fast.”

New AI Tool 'FraudGPT' Emerges, Tailored for Sophisticated Attacks “Following the footsteps of WormGPT, threat actors are advertising yet another cybercrime generative artificial intelligence (AI) tool dubbed FraudGPT on various dark web marketplaces and Telegram channels.”

SEC targets AI in new Wall Street internet reforms plan “The Securities and Exchange Commission announced Wednesday proposed new rules that SEC chair Gary Gensler says will address potential conflicts of interest in the use of artificial intelligence on Wall Street.”

Microsoft to supply AI tech to Japan government, Nikkei reports “Microsoft Corp (MSFT.O) will provide artificial intelligence technology to the Japanese government after enhancing the processing power of its data centres located within the country, the Nikkei newspaper reported on Thursday.”

AI companies form new safety body, while Congress plays catch up “The industry-led initiative is the latest sign of companies racing ahead of government efforts to craft rules for the development and deployment of AI”

Which U.S. Workers Are More Exposed to AI on Their Jobs? “Pew Research Center conducted this study to understand how American workers may be exposed to artificial intelligence (AI) at their jobs.”

AI researchers say they've found 'virtually unlimited' ways to bypass Bard and ChatGPT's safety rules “Researchers say they have found potentially unlimited ways to break the safety guardrails on major AI-powered chatbots from OpenAI, Google, and Anthropic. Large language models like the ones powering ChatGPT, Bard, and Anthropic's Claude are extensively moderated by tech companies.”

U.S. lawmakers urge Biden administration to tighten AI chip export rules “Two U.S. lawmakers who head a committee focused on China on Friday urged the Biden administration to tighten export restrictions on artificial intelligence chips in the wake of industry lobbying to leave the rules unchanged.”

Papers

Daniel: In the realm of steering language models, one recent technique has involved “editing” language models to update propositions encoded by a model—if a model has picked up an incorrect proposition from its training data, that proposition might be corrected. This paper makes the important observation that, since these facts do not live in isolation, a proper evaluation of editing methods should examine the “ripple effects” that correcting certain statements will have on a language model’s knowledge base: if a language model’s false “belief” that Jack Depp is the son of Bob Depp is corrected to the statement that Jack Depp is the son of Johnny Depp, then additional statements (e.g. about who Jack Depp’s siblings are) will also need to be updated. Importantly, the authors of this paper find that current editing methods fail to introduce such consistent changes in a model’s knowledge, but that a simple in-context editing baseline obtains the best scores on their benchmark.

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at editor@thegradient.pub and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! For feedback, you can also reach Daniel directly at dbashir@hmc.edu or on Twitter. If you enjoyed this newsletter, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!