Update #52: The Ironies in Pausing AI and Finetuning LLMs without Backpropagation

We consider responses to recent letters calling for pauses on AI experiments and a memory-efficient optimization method that fine-tunes LLMs without using gradients.

Welcome to the 52nd update from the Gradient! If you’re new and like what you see, subscribe and follow us on Twitter :) You’ll need to view this post on Substack to see the full newsletter!

Want to write with us? Send a pitch using this form.

News Highlight: We’re all trying to figure out who can pause AI

Summary

In recent weeks, the AI community has been polarized by two community letters, one purported to “pause giant AI experiments,” the other warning of far off “existential threats” sewed from the seeds of current generative AI models. While the letters contain some kernels of truths regarding the harms of large generative AI experiments and their latent potential to transform vast swaths of society, there is a rich irony that many of the signatories of these letters are exactly the people best positioned to stop or change the pace of development. We at The Gradient are also not alone in recognizing the irony. Meredith Whittaker, the president of Signal and co-founder of NYU’s AI Now Institute, recently sat down for an interview with the Guardian. Throughout the interview, she critically engages with the arguments of both the AI hypers and the doomers, recognizes the irony that those calling for AI pauses are (a) the ones positioned to do it and (b) the ones least likely to actually pause and distills many of the issues into that of power relationships between the technology companies developing commercial AI products and those set to be impacted by them.

Background

Over the past year, the proliferation of generative AI tools into the public consciousness has led to a spectacle of AI hype. In her interview, Whittaker describes how executives are “painting these systems as superhuman, as super capable, which leads to a sense in the public, and even among some politicians, that tech can do anything.” However, Meredith is also very quick to dismantle that false perception. She correctly points out that “these algorithms are trained on data that reflects not the world, but the internet – which is worse, arguably. That is going to encode the historical and present-day histories of marginalisation, inequality etc”

This hype has not entirely been one-sided. There is a growing reactionary movement of AI "doomers” warning against the “existential” threats posed by AI. These doomers have been quite vocal and varied in their warnings, ranging from Geoffrey Hinton’s perception of existential danger, to those suggesting pauses in AI experiments or even misguided calls to bomb data centers (we are opting not to link because we do not elevate or platform reactionary calls to violence).

In her interview with the Guardian, Meridith is also quick to recognize the bad-fath nature of the doomers as astutely as she did the hypers: “These are some of the most powerful people when it comes to having the levers to actually change this, so it’s a bit like the president issuing a statement saying somebody needs to issue an executive order. It’s disingenuous”. We can see this manifest almost in real time, with Palantir, a data-mining firm that “on occasion” is used to kill people. This context is particularly relevant given Palintir’s CEO Alex Karp is “reject[ing] calls to pause the development of artificial intelligence (AI), stating that it is only those with “no products” who want a pause.” With over billions of dollars in government data and AI contracts, it is no wonder Palantir is not interested in a pause.

Why does it matter?

Underneath all of the hype and doom of AI, there lies a conflict. One hand, we are seeing glimpses of the potential to transform vast swaths of our society and deliver advances in sciences and engineering that were unimaginable a decade ago. Unfortunately, much of the data needed to build generative AI products comes from our incredibly biased world.

In order to be truly revolutionary, this technology and its course of development needs to change significantly. We can consider the harms beginning with the automated ingestion and surveillance of all publicly available data, including the copyrighted and commercial products produced by writers and artists around the world. Afterwards, we find a second layer of exploitation taking place, generally of the workers labeling the data and are often exposed to horrifically graphic violent and sexually explicit images while being payed less than $2 per hour. The final set of harms are seen and felt today by all marginalized communities that interact with AI products that can’t tell black people apart from apes, or content moderation tools which disproportionately flag islamic religious content as harmful, or tools that misgender transgender people.

In her interview, Merdith is astute not only in recognizing the disingenuous nature of a particular vision of AI harm but also in calling attention to the work required to resolve the harms. “If you were to heed Timnit’s warnings you would have to significantly change the business and the structure of these companies. If you heed Geoff’s warnings, you sit around a table at Davos and feel scared.”

Research Highlight: Low-Memory Finetuning of Language Models without Backpropagation

Summary

Researchers from Princeton University have developed and analyzed a memory efficient optimization algorithm for finetuning neural networks, which can be applied to large language models. Their algorithm is a zeroth order algorithm, meaning it does not need gradients (and hence does not need backpropagation). Classical wisdom and theory suggests that zeroth order algorithms would work quite poorly in the high-parameter regime of modern LLMs, but the authors show that under certain assumptions, zeroth order algorithms can have a reasonable theoretical convergence rate.

Overview

As pretrained models like large language models have become increasingly powerful and available for use, many people have been applying them to their own desired use-cases. While in-context learning and prompting can take one pretty far, finetuning an LLM’s parameters can generally achieve much better results. However, finetuning an LLM is expensive, especially in terms of GPU memory requirements. Finetuning uses several times more memory than inference, and LLM inference currently requires a lot of memory.

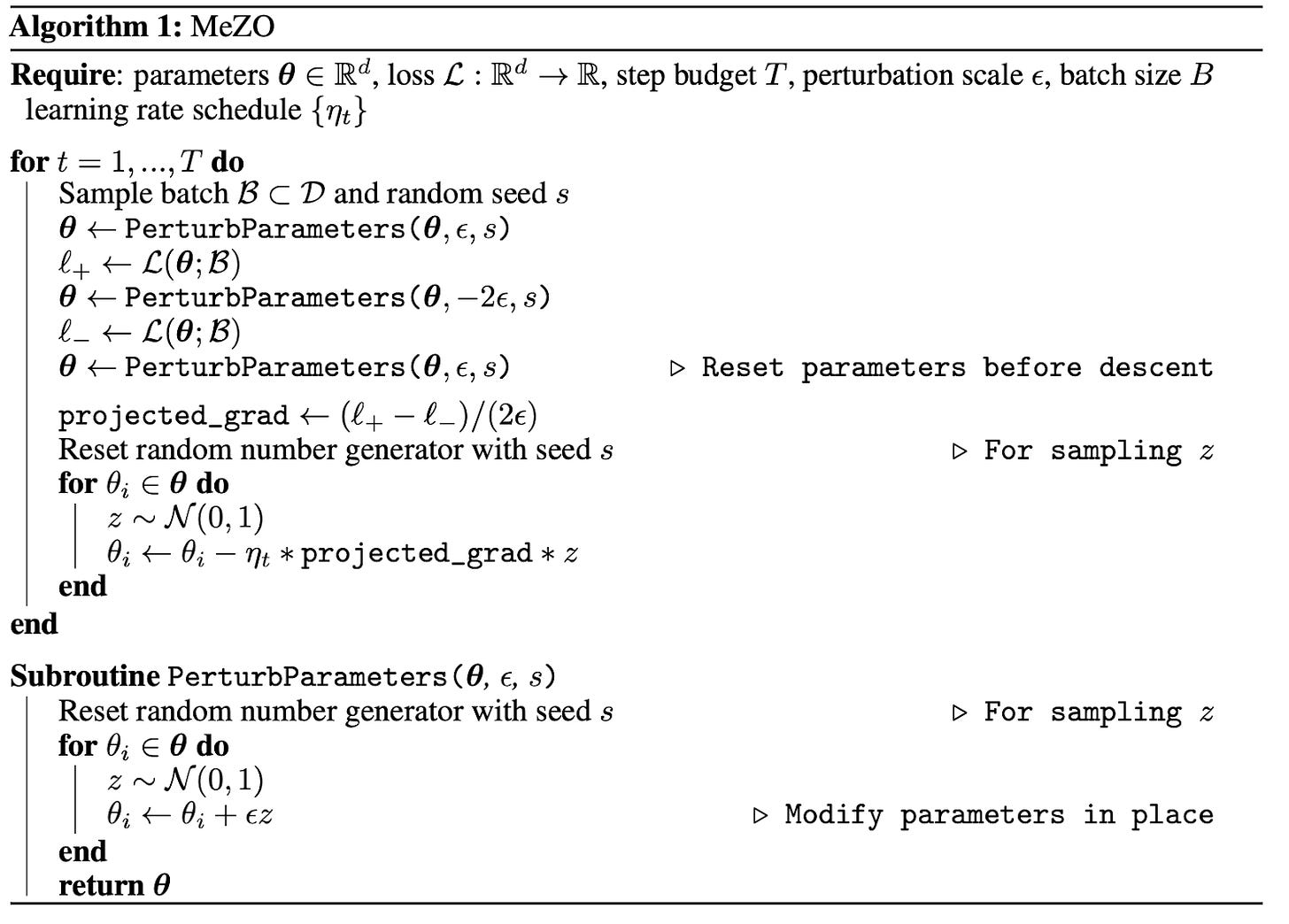

The recent paper “Fine-Tuning Language Models with Just Forward Passes'' tackles this problem by developing a zeroth order optimization algorithm for finetuning LLMs. The authors start with the classical zeroth order stochastic gradient descent (ZO-SGD) algorithm, which when applied to neural networks only uses forward passes and does not require backpropagation. They note that the naive implementation would still require 2x the memory cost of inference. Hence, they design the Memory-efficient ZO-SGD (MeZO) algorithm for computing the ZO-SGD updates using only a small amount of space. Their algorithm is based on the clever use of in-place operations, passing around random seeds, and rewriting certain arithmetic operations so that they can be done in-place.

As written above, for each update MeZO sequentially iterates through every single parameter one at a time, which would be very slow. By using more memory, this can be made faster. For instance, the authors suggest instead iterating through each parameter matrix, and updating the whole matrix at once. This requires an additional memory cost equal to the maximum parameter matrix size, which comes out to a reasonable 0.86GB for the 66 billion parameter OPT model.

The authors show the effectiveness of MeZO in several experiments. MeZO trained models outperform zero-shot, in-context, and linear probing baselines across many tasks for autoregressive language models. Further, MeZO can do this with much less memory (and thus in general fewer GPUs). For instance, the authors find that MeZO requires very similar memory to inference, whereas finetuning can take up to 11x more memory than MeZO; thus, finetuning requires 8 A100 GPUs for training OPT 30B, whereas MeZO requires just 1 A100 GPU.

Why does it matter?

MeZO allows a 30B parameter OPT model to be finetuned on a single A100 with 80GB memory. It is predicted to be able to train the 175B model on 8 A100s.

Improving the efficiency of working with large pretrained models is important for the democratization of these powerful tools. For finetuning specifically, parameter efficient finetuning (PEFT) methods such as LoRA and prefix tuning have been widely adopted to efficiently adapt pretrained models. MeZO allows for finetuning all weights, which may lead to more powerful models in some settings. Moreover, MeZO can be combined with LoRA and prefix tuning, which even further lowers their memory requirements.

This work theoretically and empirically shows the promise of zeroth order optimization in certain modern machine learning setups. Future work can explore this further. For instance, zeroth order optimizers can be used for optimizing non-differentiable objectives, as they do not require computing gradients; the current work provides initial experiments showing that MeZO is capable of this in particular settings.

Editor Comments

Daniel: I’m just gonna waste a little space here to say it’s funny that we’re covering a no-gradient method in The Gradient

New from the Gradient

Joon Park: Generative Agents and Human-Computer Interaction

Christoffer Holmgård: AI for Video Games

Other Things That Caught Our Eyes

News

Stack Overflow Moderators Are Striking to Stop Garbage AI content From Flooding the Site “Volunteer moderators of the forum are striking over a policy that says AI-generated content can practically not be moderated.”

Big banks are talking up generative A.I. — but the risks mean they're not diving in headfirst “Major banks and fintech companies claim to be piling into generative artificial intelligence as the hype surrounding the buzzy technology shows no signs of fizzling out — but there are lingering fears about potential pitfalls and risks.”

AI-powered church service in Germany draws a large crowd “On Friday, over 300 people attended an experimental ChatGPT-powered church service at St. Paul’s church in the Bavarian town of Fürth, Germany, reports the Associated Press.”

Europeans Take a Major Step Toward Regulating A.I. “The European Union took an important step on Wednesday toward passing what would be one of the first major laws to regulate artificial intelligence, a potential model for policymakers around the world as they grapple with how to put guardrails on the rapidly developing technology.”

Google challenges OpenAI's calls for government A.I. czar “Google and OpenAI, two U.S. leaders in artificial intelligence, have opposing ideas about how the technology should be regulated by the government, a new filing reveals.”

Meta open sources an AI-powered music generator “Not to be outdone by Google, Meta has released its own AI-powered music generator — and, unlike Google, made it available in open source.”

Google, one of AI’s biggest backers, warns own staff about chatbots “Alphabet Inc (GOOGL.O) is cautioning employees about how they use chatbots, including its own Bard, at the same time as it markets the program around the world, four people familiar with the matter told Reuters.”

How the media is covering ChatGPT “With advancements in AI tools being rolled out at breakneck pace, journalists face the task of reporting developments with the appropriate nuance and context—to audiences who may be encountering this kind of technology for the first time. But sometimes this coverage has been alarmist.”

Papers

Daniel: I spoke with Scott Aaronson some time ago about his work with OpenAI on watermarking LLM-generated text. Methods like watermarking are valuable because they present a way that we could identify GPT-generated text. Aaronson’s method involves pseudorandomly (instead of randomly) generating a next token. In that vein, it’s interesting to see this study looking at the reliability of watermarks for LLMs. The common criticism is that watermarks could be removed from LLM outputs by AI paraphrasing or human editing. The study’s authors had a Llama model generate watermarked text and asked a non-watermarked GPT-3.5 to re-write that text (doing lots of prompt engineering to try to get rid of the watermark), then checked whether they could detect the watermark in the rewritten text. After AI paraphrasing, the researchers could still detect the watermark, but needed more text to do it. The team found that grad students did better than GPT-3.5 at scrubbing watermarks, but that they could still detect it (detection just required observing more tokens). The work doesn’t claim that removing a watermark is impossible—one could do so if they were meticulous enough or had an LLM with a specialized decoder—but removal appears to be very difficult. This seems like good news!

Derek: I like the new work “DreamSim: Learning New Dimensions of Human Visual Similarity using Synthetic Data.” The authors study methods of measuring similarity between images. They make a dataset of Stable Diffusion generated variations of images, along with human similarity judgements between triplets of such images. Using a model consisting of an ensemble of pretrained image models, they train a new similarity metric via LORA finetuning on their new dataset. They thoroughly study the differences between image similarity metrics (including their own), and find that learned similarity metrics can be made to better agree with human judgements by finetuning on their dataset. Improving models using synthetic data has always been a dream, and it seems like modern generative models are quite capable of generating data for this purpose.

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at editor@thegradient.pub and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! For feedback, you can also reach Daniel directly at dbashir@hmc.edu or on Twitter. If you enjoyed this newsletter, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!

Thanks for The Gradient. Such a valuable resource! I tend to focus on the point of agreement between the doomers and hype beasts. Which is faith that there will be quick, continuous growth to AGI from current models, pretending that big data LLMs are that something that close to close. It seems clear to me there needs to be at least one more breakthrough like the optimization one in -2012. Given so much of the cutting edge is led by big companies (and the CCP!) with big budgets for hardware, IMO that adds a while other set of risks and potential outcomes. Thoughts?

This is the Prisoner's Dilemma writ large. You have inspired me to write more about this! Thank you.