Welcome to the 50th update from the Gradient! If you’re new and like what you see, subscribe and follow us on Twitter :) You’ll need to view this post on Substack to see the full newsletter!

Want to write with us? Send a pitch using this form.

News Highlight: U.S. Sanctions driving Chinese firms to advance AI without latest chips

Summary

The Wall Street Journal reports that Chinese researchers and engineers are studying techniques that achieve state-of-the-art AI performance with fewer and less powerful compute chips as a result of recent sanctions and export controls on U.S. manufactured computing chips. Their recent investments into training Large Language Models (LLMs) without Nvidia chips is beginning to show promising early signs. The researchers speculate that “if it works well, they can effectively circumvent the sanctions”.

Background

In October 2022, the Biden-Harris administration announced export restrictions on advanced semiconductors and chip manufacturing equipment, requiring U.S. manufacturers to obtain a license from the Commerce department to export chips utilized in “advanced artificial intelligence calculations and supercomputing”. This has cut off Chinese companies from NVIDIA A100 chips (and next-gen H100), the most powerful chips available and those most widely used to train large language models.

Without vast access to these chips, Chinese scientists have been innovating at a hardware and a software level to achieve state-of-the-art performance on a few Chinese-language tasks, including reading comprehension and grammar challenges. One example of those successes are firms combining “three or four less-advanced chips…to simulate the performance of one of Nvidia’s most powerful processors, according to Yang You, a professor at the National University of Singapore”. The Wall Street Journal goes on to claim that “Chinese companies have been more aggressive in combining multiple software techniques together” to reduce the computational intensity of model training; however it’s unfortunate that none of those techniques were mentioned. Readers would benefit from understanding just exactly how Chinese companies are being more `aggressive` than their Western counterparts which are currently gobbling up swaths of the internet and other personal and copyrighted content in their own pursuits of AI.

Why does it matter?

Like many Chinese research scientists, the Gradient also lacks giant supercomputing clusters of NVIDIA GPUs to train foundational models and explore differing development ideas. The work being done by Chinese scientists and engineers to overcome compute hurdles introduced by US sanctions has the potential to benefit all across the industry. While large US firms have no difficulty constructing super computers with thousands of GPUs, that amount of compute is insurmountable to an individual researcher or curious student. As the techniques and ideas get published and/or open sourced, there is a great opportunity for those all around the world to leverage the learnings for their own work.

While the innovations pose the potential to benefit many across the AI community, there are also great risks from using sanctions to achieve ambiguous foreign policy goals. It's probable that “if advanced successfully, the research could allow Chinese tech firms to both weather American sanctions and make them more resilient to future restrictions.” And while there is already very little evidence that sanctions have been ever effective at achieving their goals either historically nor recently, they remain an attractive “tool of first resort” to US presidential administrations, partially for their ease of deployment which lacks congressional approval or oversight. If these sanctions fail, at achieving their desired policy outcomes, the other reckless and risky alternatives being floated such as bombing microchip factories become all the more likely. Rather than feeding into another global us versus them narrative with world ending stakes, the US should be pursuing AI policy outcomes that encourage and promote cooperation, partnerships, openness, and joint research ventures between the Chinese AI community and their US and international counterparts.

Editor Comments

Justin: Over 50 years of sanctions against Iran couldn’t stop U.S. manufactured chips from finding their way into “Iranian Kamikaze Drones” being utilized in Ukraine; I am highly skeptical that recent Chinese chip sanctions and export controls will achieve their desired effects. I do worry about what comes next after the government realizes that sanctions were ineffective at achieving their vague aims of “confronting” China.

Hugh: I’m more optimistic (if that’s the right word) than Justin here. Unlike drones which require relatively small numbers of chips, LLMs require a large number of very expensive GPUs to train. It’s much trickier to hide a multi-million dollar purchase, though still not impossible.

Daniel: A +1 to Hugh’s comment. Broadly speaking, for China, distributed training on trailing edge chips is going to be the game for now. Andrew Feldman pointed out to me that it took decades to develop the capacity to develop advanced semiconductors in the US—while we have made things more difficult for China, I would think the timeline for establishing such capabilities to be quicker. Another thought, though, is if China is able to play catch up with trailing edge chips and their techniques (a) are found out and (b) scale in the appropriate ways, then (as noted above) that may allow countries like the US without constraints on their semiconductor use to pull ahead even further.

Research Highlight: Multimodal Models, where are we now?

Summary

The last few years have seen significant development in multimodal models, which are capable of processing more than one type of input data. This week, we will cover several recent multimodal models, including ImageBind by Meta AI. Also, we review several common aspects of many of the most successful multimodal models: CLIP-like frameworks for learning multimodal embeddings, Transformer encoders and decoders, and ways to combine different modalities within Transformers.

Overview

The release of GPT-3, now 3 years ago, opened more than one floodgate. Further work on scaling and language modeling quickly followed, but so too did ideas like CLIP, DALL-E, and a host of other multimodal schemes.

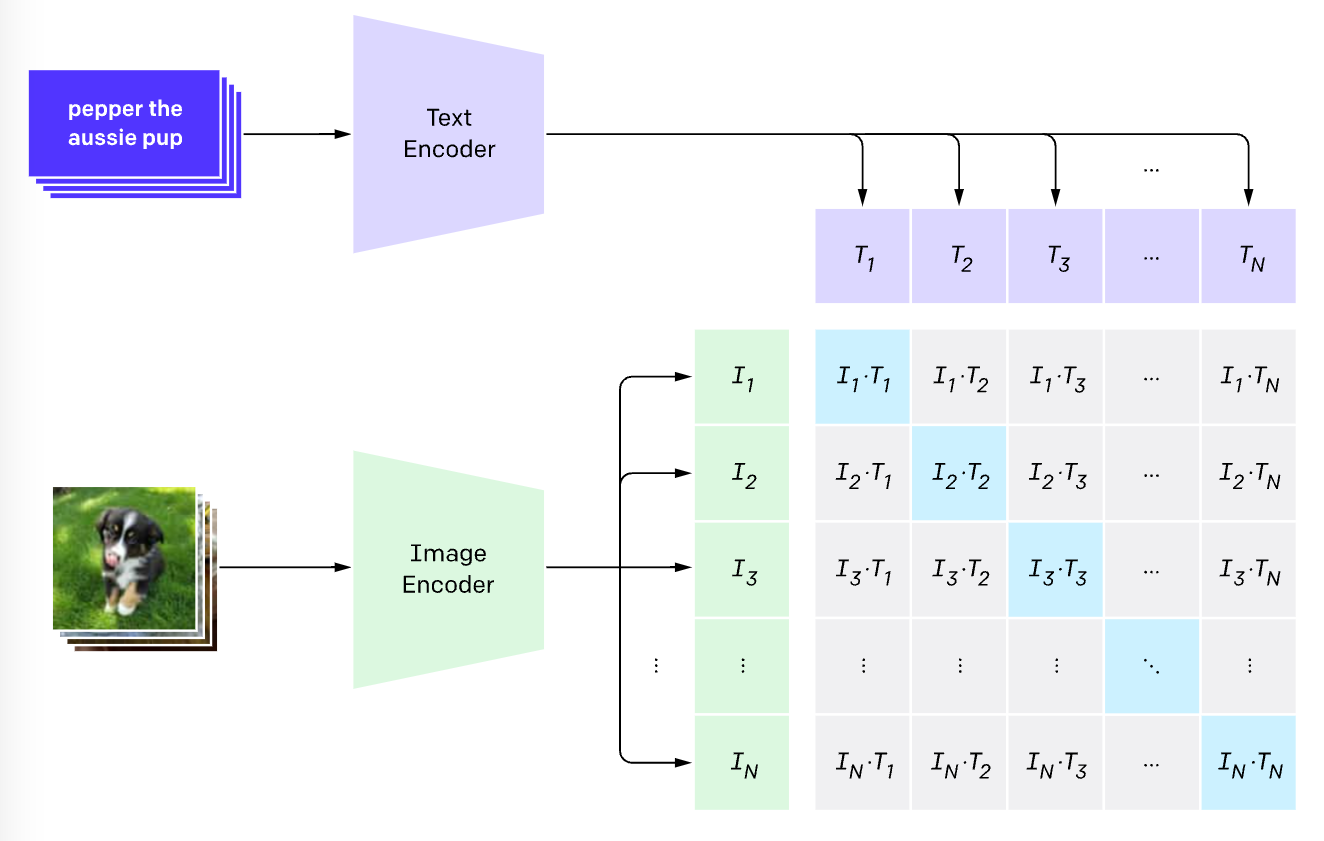

Recently, Meta AI released ImageBind, a model that embeds six modalities — text, images, audio, depth images, thermal images, and Inertial Measurement Unit readings (IMU) — into the same joint embedding space. The model allows several different capabilities, such as emergent zero-shot classification of audio using text prompts (e.g. what is this audio segment about), even though the model was not trained on any (text, audio) pairs. This is possible because during training, ImageBind uses a CLIP-like contrastive approach to train encoders for each modality on (image, modality) pairs, for instance on (image, text) pairs and (image, audio) pairs.

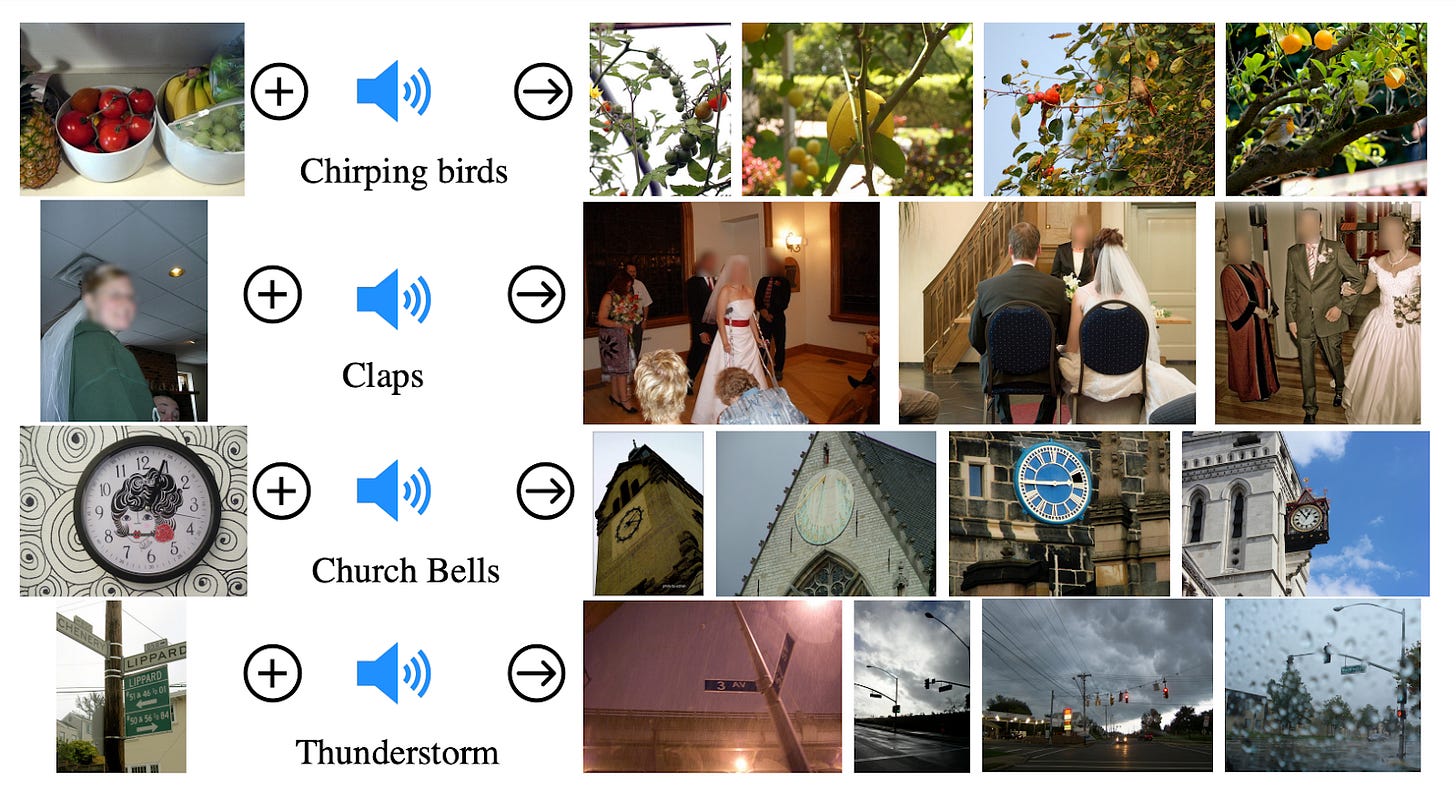

Even though ImageBind needs six encoders — one for each modality — its architecture section is hilariously simple: “We use a Transformer architecture for all the modality encoders.” Empirically, ImageBind performs well on various tasks involving the different modalities, such as few-shot classification of audio and depth images, object detection with audio queries, and embedding space arithmetic whereby e.g. adding the audio embedding for “church bells” to the image embedding of fruits will give an embedding that is similar to the image embeddings of birds surrounded by fruits.

ImageBind exemplifies two themes of successful multimodal models from the last two years. First, contrastively trained models like CLIP work very well for multimodal embeddings. For instance, ImageBind, ALIGN, ProteinCLAP, cpt-code, VideoCLIP, and AudioCLIP all find success in training on a CLIP-like contrastive loss for joint embeddings on paired multimodal data. Several non-contrastive methods for improving over CLIP have been proposed in the last two years, but recent work shows that CLIP still performs favorably when tuned with techniques to improve training. Indeed, there are still improvements to be made over the basic CLIP framework, so CLIP-like models could take us even further in the future.

A second theme of recent multimodal models epitomized by ImageBind is the widespread use of Transformer architectures for essentially every modality: text, images, audio, video, proteins, robotics, Atari games, and several others. Works like CLIP and Flamingo used non-Transformer vision encoders, though Vision Transformers can be used as well (e.g. as done in the original CLIP paper, OpenCLIP, OpenFlamingo, BLIP, and BLIP-2). Transformers are useful not only due to their single modality performance, but also because Transformers for different modalities can more easily interact with each other, e.g. by encoding each modality as tokens or using cross-attention between modalities.

A third theme of recent multimodal models is autoregressive generation with Transformers conditioned on embeddings from multiple modalities. For instance, Gato uses this approach to generate many types of data (text, predicted observations, and actions) using a single Transformer model. Flamingo and other vision-language models like BLIP generate text with a Transformer decoder model modified to condition on visual input. It is unknown how GPT-4 incorporates vision into the generative model of text, but open source efforts to achieve some of GPT-4’s capabilities, such as MiniGPT-4, feeds visual inputs to a vision encoder (pretrained ViT and Q-Former), which are then passed through a linear layer to an LLM (in this case, Vicuna).

Why does it matter?

Multimodal models will clearly be an important part of future AI systems. The several themes of multimodal models outlined above have led to very impressive models and systems already, and their continued development will have an important role to play in a number of different directions that concern the AI field.

For one, powerful multimodal models will perform well on a new array of tasks and enable products and systems to be built that were not available before (e.g. GPT-4’s vision capabilities). Furthermore, multimodal models and their abilities and limitations will likely have an impact on contemporary debates about what is required to “understand” and form a model of the world around us.

That being said, multimodal models do still have their limitations. While image generation models such as Stable Diffusion are powerful, video generation models still yield outputs that look a bit surreal. Recent work has also presented settings in which vision-language models lack compositional/relational understanding and proposes potential solutions to this limitation. Multimodal models will continue to be a useful testbed for novel techniques and a forum for thinking about questions concerning the relationship between language and other modalities as a way of understanding the world.

Sources

Embeddings

Generative

New from the Gradient

Brigham Hyde: AI for Clinical Decision-Making

Scott Aaronson: Against AI Doomerism

Other Things That Caught Our Eyes

News

That wasn’t Google I/O — it was Google AI ‘The day began with a musical performance described as a “generative AI experiment featuring Dan Deacon and Google’s MusicLM, Phenaki, and Bard AI tools.” It wasn’t clear exactly how much of it was machine-made and how much was human.’

AI Scientists: Safe and Useful AI? “There have recently been lots of discussions about the risks of AI, whether in the short term with existing methods or in the longer term with advances we can anticipate.”

OpenAI Tells Congress the U.S. Should Create AI 'Licenses' to Release New Models “Sam Altman told Congress Tuesday that AI developers should get a license from the U.S. government to release their models. Altman explained that he wants a governmental agency that has the power to give and take licenses so that the U.S. can hold companies accountable.”

Google tool to spot fake AI images after puffer jacket Pope went viral “Google has launched a new tool to help people identify fake AI-generated images online, following warnings that the technology could become a wellspring for misinformation.”

Apple’s new ‘Personal Voice’ feature can create a voice that sounds like you or a loved one in just 15 minutes “As part of its preview of iOS 17 accessibility updates coming this year, Apple has announced a pair of new features called Live Speech and Personal Voice. Live Speech allows users to type what they want to say and have it be spoken out.”

AI-powered coding, free of charge with Colab “Since 2017, Google Colab has been the easiest way to start programming in Python. Over 7 million people, including students, already use Colab to access these powerful computing resources, free of charge, without having to install or manage any software.”

Unfortunately, OpenAI and Google have moats “The companies that have users interacting with their models consistently have moats through data and habits.”

Texas A&M Prof Flunks All His Students After ChatGPT Falsely Claims It Wrote Their Papers “A number of seniors at Texas A&M University–Commerce who already walked the stage at graduation this year have been temporarily denied their diplomas after a professor ineptly used AI software to assess their final assignments, the partner of a student in his class — known as DearKick on Reddit.”

Papers

Daniel: Mechanistic interpretability is a super important line of research right now, especially as we consider a future in which LLMs might be integrated into scaled, critical systems. A recent work from Stanford called “Interpretability at Scale: Identifying Causal Mechanisms in Alpaca” scales a method called Distributed Alignment Search (DAS) that uncovered perfect alignments between interpretable symbolic algorithms and fine-tune deep learning models. They apply their method, called Boundless DAS, to Alpaca 7B and discover that the model, when solving a simple numerical reasoning problem, implements a causal model with two interpretable boolean variables. I’m hopeful that we’ll see similar work scaled further and applied towards the many other models we’re seeing come out of industry right now.

Derek: People have been working hard on 3D data generation recently (especially since 2D image generation has become massively successful in the last few years). The recent OpenAI work Shap·E: Generating Conditional 3D Implicit Functions by Jun and Nichol tackles this via generating an implicit neural representation from a text description. Their approach uses a diffusion model to generate weights of an MLP that give an implicit representation of a 3D output. Shap-E is much faster for generation than recent optimization-based generation methods (e.g. DreamFusion) and generates better samples than Point-E, which generates explicit point clouds.

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at editor@thegradient.pub and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! For feedback, you can also reach Daniel directly at dbashir@hmc.edu or on Twitter. If you enjoyed this newsletter, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!