Update #47: AI Index Report Highlights and Text-to-3D

Takeaways from Stanford's AI Index Report (including industry-academia gaps and AI misuse) and research leveraging text-to-2d models for text-to-3d.

Welcome to the 47th update from the Gradient! If you’re new and like what you see, subscribe and follow us on Twitter :) You’ll need to view this post on Substack to see the full newsletter!

Want to write with us? Send a pitch using this form.

News Highlight: Highlights from Stanford’s Annual AI Index Report

Summary

Stanford University’s Institute for Human-Centered Artificial Intelligence has published its Annual AI Index Report since 2017. Written in collaboration with various organizations in the industry and academia, the AI Index report provides a thorough overview and analysis of the current state of AI research, industry adoption, market trends, as well as public sentiments about AI. This year, the report includes new analyses on foundation models and their socio-political impacts, such as on K-12 education. Top takeaways from this year’s report include the industry racing ahead of academia in generating state-of-the-art AI research, performance saturation of new models on traditional benchmarks, and a rapid rise in the misuse of AI.

Overview

Research and Education

While it's no revelation that the number of publications has steadily increased in AI over the years, the report finds that in 2021, around 60% of all published AI documents were journal articles – a total of around 300,000 publications. Compare that to the 85,000 conference papers, the numbers of which have been decreasing since 2019. In fact, Figure 1.1.2 from the report shows that the only two categories of publications that have seen a steady increase in their volume since 2019 are journal publications and repository submissions (such as those on arXiv).

FIgure 1.1.3 from the report shows that most AI papers published fall under the “Pattern Recognition” sub-domain, followed by “Machine Learning,” which has overtaken “Computer Vision” since 2019 (though it can be argued that many papers in computer vision fall under the “Pattern Recognition” category). It is also interesting to note that in the Education and Government sectors, Chinese institutions publish the most, in the Non-Profit sector, the EU and UK take the lead, while American companies are comfortably ahead in their publication record (Figure 1.1.5). Overall, Chinese institutions boast a strong lead in publication volume: of the top 10 institutions in the world that publish the most AI research, only one, MIT, is in the United States, while 9 others are based in China (Figure 1.1.21).

To compare the volume of publications against their significance, the report utilizes a database from Epoch AI, which curates a list of significant AI and machine learning systems released since the 1950s. The categorization of any publication as “significant” depends on various factors such as a paper’s state-of-the-art improvement over a benchmark, historical significance, or high citation numbers. Analyzing the number of such significant publications by region, the authors find that the United States produced 16 significant publications in 2022, followed by the UK at 8 significant publications, and China at 3. Additionally, investigating significant publications by sector reveals that since 2015, not only has the industry overtaken academia in producing more impactful research, but this gap has steadily increased. In 2022, the industry produced 32 significant publications, while academia published only 3 significant papers.

The above trend has undoubtedly had an impact on the careers of PhD students in Computer Science. While around 1 in 5 CS PhD students today specializes in AI, most of the PhD students (around 65%) head to the industry after graduation. In fact, the number of Computer Science, Computer Engineering and Information Science faculty hired in North America has stayed relatively flat since 2010 and has actually decreased since 2019 (Figure 5.1.12).

Public Opinion

A 2022 survey analyzed in the report finds that amongst people of all countries, Chinese citizens are the most optimistic about AI products and services, with around 78% of the population agreeing with the statement that AI products have more benefits than drawbacks. China is followed by Saudi Arabia at 76%, and India at 71% in this analysis. In comparison, only 35% of Americans agreed with the same statement.

Another study on the perceptions of safety of self-driving cars in the report found that 65% of respondents would not feel safe in a self-driving car. While globally, 27% of respondents reported feeling safe in a self-driving car, a study by Pew Research shows that the same number is at 26% for Americans.

An interesting survey from the report’s authors includes an analysis of the “State of the Field According to the NLP Community” in 2022, which finds that around 67% of researchers agree that “Most of NLP is dubious science”. Find numbers on other hot takes on an NLP winter in the chart below:

Technical Performance

In Chapter 2, The report analyzed the performance of new AI algorithms on various benchmarks across vision, language, and reinforcement learning and found that while considerable progress has been made over the years, the year-over-year improvement has stagnated recently.

However, 2022 saw the development of large models that began to show improved performance on multi-task settings. Models such as Gato and Flamingo can handle various input modalities (images and text), and also execute different tasks such as question answering or game playing. Generative models reached the average consumer as companies such as Microsoft and Google integrated chatbots into their existing services. A slew of text-to-text, text-to-image, text-to-video and text-to-3D models were released that prompted the formation of new startups, and also the development of new integrations for companies such as Adobe and Canva.

However, the rise in increased accessibility to state-of-the-art generative models has consequently led to a rapid increase in the cases of misuse of AI. A study analyzed in Chapter 3 of the AI Index Report shows an almost exponential increase in the annual rate of “AI Incidents and Controversies” from 2012 to 2021.

Increase in Misuse

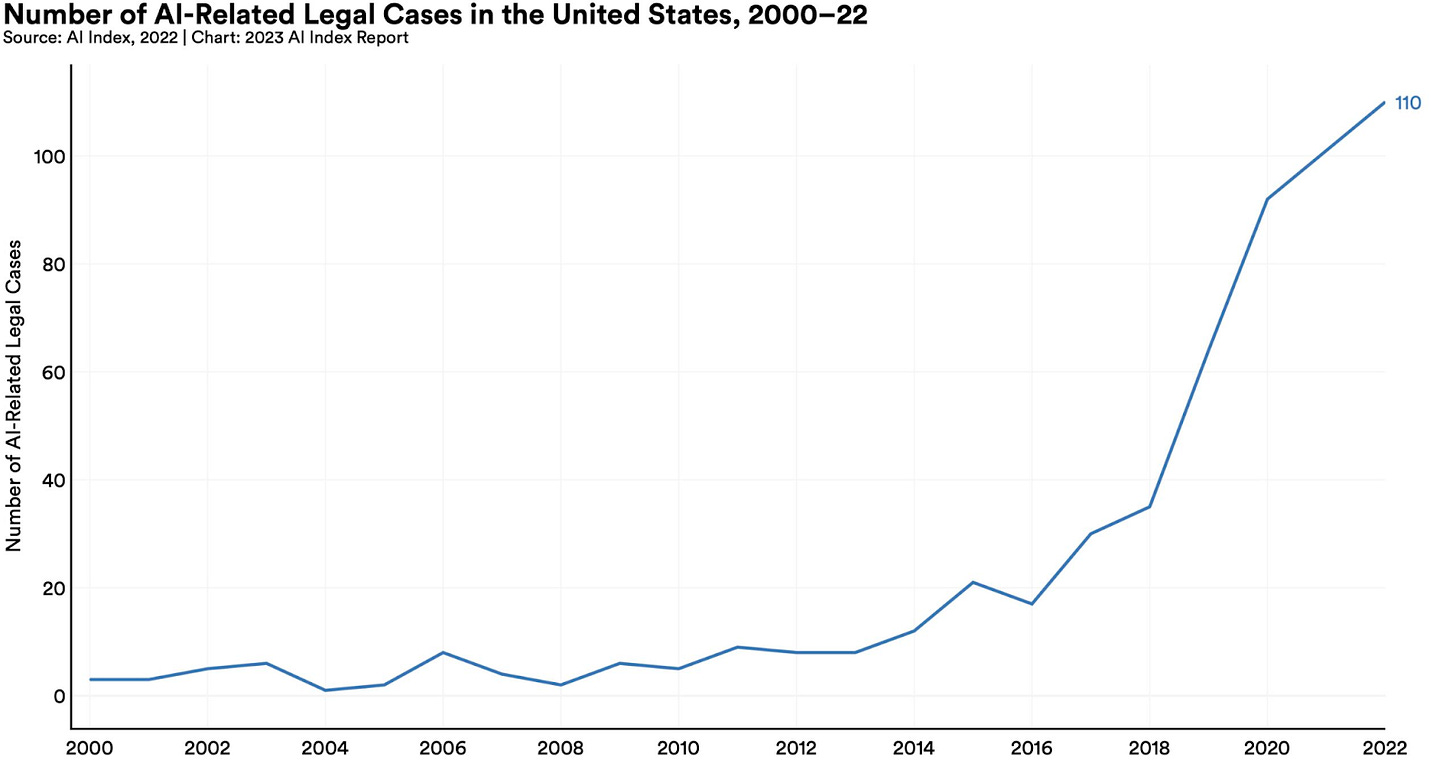

These events have further been matched by a rapid escalation in the number of AI related legal cases, which have also increased exponentially in the past decade (Chapter 6).

Overall, 2022 was a landmark year for AI as it dominated public discourse and large models made their way into the hands of millions of customers. The increase in AI-related publications and products is matched with a rise in cases of misuse and their associated legal proceedings. As the domain moves into the public spotlight, we must balance excitement and hope for the future with a balanced and thorough approach to deploying these models in the real world. The AI Index report not just shows us the collective progress and exponential growth our field has seen, but also the tangible consequences technology advancement can bring.

Editor Comments

Daniel: I find the concept of relative publication volume and “importance” really interesting, especially given the constant discourse we see about the “US-China AI Cold War” and how the volume of research output translates into real-world impacts in how countries in the West and East use and export AI systems. The measures of publication importance we have are necessarily short term—we probably won’t have a sense of how important a work “really” was in the broad scheme of things for years or decades after publication. This same point applies to the “relative importance” of work in academia vs industry. The industry does indeed publish more papers that have immediate impact (and, consequently, other markers that get measured in “importance”). That seems like what you’d expect! In what many would consider an “ideal world,” academic researchers should be focused on long-term, high-risk work that would have significant implications were it successful (see my interviews with Sewon Min and Kyunghyun Cho for discussion of this and related points). To me, this makes it really hard to make judgments with the short-term information we can gather about who is doing the most important work and what directions are worth investing in—”promise” is a notion that moves in the right direction but is also limited. I don’t know that I have a clear answer to what the right notion looks like beyond vague gestures, but I do think we can do better than we are now.

Research Highlight: From Text-to-2D Models to Text-to-3D Models

Sources:

[1] DreamFusion: Text-to-3D using 2D Diffusion

[2] Instruct-NeRF2NeRF: Editing 3D Scenes with Instructions

Summary

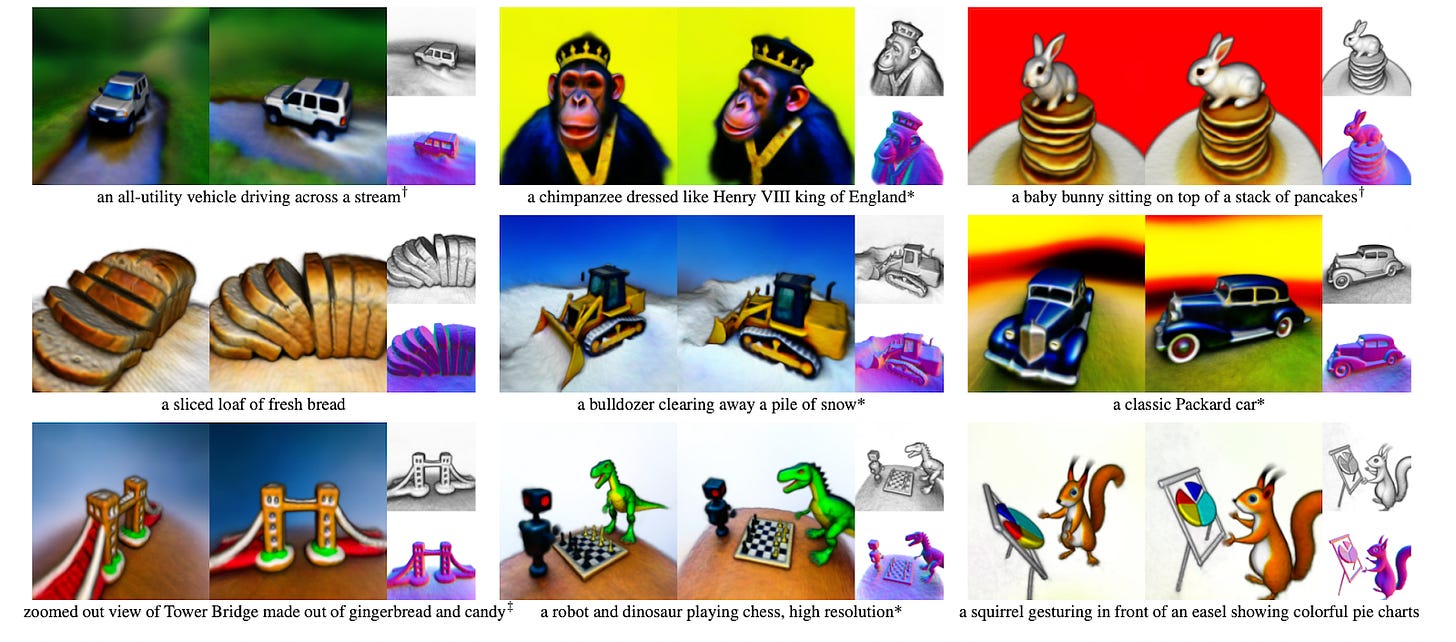

Text-to-2D image generation models such as DALL-E, Stable Diffusion, and Imagen have become very powerful in the last two years. Researchers have been working on finding similar success with Text-to-3D generation models, for generating meshes, scenes, or other types of 3D data. Several recent works leverage powerful text-to-2D models as part of a system that generates 3D data. This week we are covering two such works: DreamFusion and Instruct-NeRF2NeRF.

Overview

Some of the largest recent advances in machine learning come from text-to-2D image generation models like DALL-E 2 and Stable Diffusion. Certain application areas like computer-aided design (CAD), video games, and 3D animation require 3D data, so researchers and engineers have been working towards text-to-3D data generation as well. This problem is harder than text-to-2D for several reasons; for one, there are many fewer available pairs of 3D data and text descriptions than there are image-text pairs.

Two recent works, DreamFusion and Instruct-NeRF2NeRF, tackle this problem by leveraging powerful pretrained text-to-2D models (Imagen and InstructPix2Pix) to generate 3D data.

While 2D data has a standard representation as pixel grids, 3D data can be represented in several ways (e.g. meshes, point clouds, implicit representations), each with their own trade-offs. Both of these works use Neural Radiance Fields (NeRFs) to represent 3D scenes. In other words, both methods generate a neural network that represents a scene fitting a text description, and this neural network can be used to view the scene from any position and any viewing angle.

DreamFusion was first released in September 2022, and recently won the Outstanding Paper Award at the ICLR 2023 conference. It starts with a randomly initialized NeRF, which is trained to represent a 3D scene that fits an input text description. At a training step, an image from the current state of the scene is rendered from a random viewpoint. Then noise is added to an image, and a frozen Imagen model is used to predict the added noise, given the text input and noised image. The predicted noise and added noise is used to update the NeRF so that rendered views look like good images that match the text caption (as measured by Imagen), based on a novel approach that the authors term Score Distillation Sampling (SDS).

Instruct-NeRF2NeRF is a method of editing a 3D scene to fit a text description. For example, in the top figure, a scene including a man dressed in plain clothes is edited with the instruction “Make him into a marble statue.” Instruct-NeRF2NeRF is built off of InstructPix2Pix, a text-to-2D diffusion model that takes in an image and text instruction, and edits the image based on the text instruction. Instruct-NeRF2NeRF takes in a text instruction, a NeRF, and the training set of images, positions, and viewing angles used to train the NeRF. To get an output NeRF that edits the scene based on the text instruction, the authors use a particular training process starting from the input NeRF. First, the NeRF is used to render an image from the current scene at a training viewpoint. Then this image is edited to follow the text instruction using InstructPix2Pix, and the training dataset image is replaced with this edited image. This process continues iteratively.

Why does it matter?

These models show that the recent progress in text-to-2D in can be effectively leveraged for text-to-3D generation. Both of these methods generate each 3D scene independently using the text-to-2D model, but we may see even more progress if there can be some learned model that shares information across many 3D scenes. The quality of the generated 3D data is already impressive, and as these models continue to advance, we can expect to see even more stunning results.

There is still much work to be done in 3D generation. These two methods are effective but slow; to generate one 3D scene, they require solving an optimization problem using iterative gradient-based methods. For instance, DreamFusion requires about 1.5 hours to generate a 3D scene on a TPUv4 machine with 4 chips, whereas text-to-2D models like DALL-E 2 and Stable Diffusion can generate images in seconds. Also, these models inherit some limitations from the text-to-2D models that they are built on, such as biases from text-image caption datasets, and inability to perform large edits like completely deleting a statue in a scene. There are also some 3D-specific limitations. In InstructNeRF2NeRF, it is hard to edit a shirt to have a checkerboard pattern. The 2D model is capable of generating images with a checkerboard pattern, but the checkers are inconsistent, in that they are at different parts of the shirt in different views.

New from the Gradient

Daniel Situnayake: AI at the Edge

Soumith Chintala: PyTorch

Other Things That Caught Our Eyes

News

Edge Impulse launching new way to optimize models for any edge device “With ‘Bring Your Own Model’ and our new Python SDK, we have launched a whole new way to use Edge Impulse. It’s designed to make ML practitioners feel incredibly productive with edge AI, taking their existing skills and applying them to an entirely new domain — hardware — while working confidently next to embedded engineers.”

February 2023 Robotics Investments Total US $620 Million “Robotics funding for the month of February 2023 totaled $620M, the result of 36 investments. The February investments bring the 2023 totals to approximately $1.14B.”

Three ways AI chatbots are a security disaster “AI language models are the shiniest, most exciting thing in tech right now. But they’re poised to create a major new problem: they are ridiculously easy to misuse and to deploy as powerful phishing or scamming tools. No programming skills are needed. What’s worse is that there is no known fix.”

Mozilla Launches Responsible AI Challenge “At Mozilla, we believe in AI: in its power, opportunity, and potential to solve the world’s most challenging problems. But we shouldn’t do this alone. That’s why we are relaunching the Mozilla Builders program with the Responsible AI Challenge.”

Instant Videos Could Represent the Next Leap in A.I. Technology “Ian Sansavera, a software architect at a New York start-up called Runway AI, typed a short description of what he wanted to see in a video. ‘A tranquil river in the forest,’ he wrote.”

AI Is Getting Powerful. But Can Researchers Make It Principled? “Soon after Alan Turing initiated the study of computer science in 1936, he began wondering if humanity could one day build machines with intelligence comparable to that of humans. Artificial intelligence, the modern field concerned with this question, has come a long way since then.”

Sequoia and Other U.S.-Backed VCs Are Funding China’s Answer to OpenAI “A boom in artificial intelligence startup funding sparked by OpenAI has spilled over to China, the world’s second-biggest venture capital market. Now American institutional investors are indirectly financing a rash of Chinese AI startups aspiring to be China’s answer to OpenAI.”

Canada Opens Probe into OpenAI, the Creator of AI Chatbot ChatGPT “Canada declared that it has started a probe into ChatGPT, the popular AI chatbot created by the US-based software company OpenAI.”

Papers

Daniel: I’m excited about a paper on… Grounding! Modern large language models’ (LLMs’) ability to produce seemingly meaningful text has rekindled interest in the “Symbol Grounding Problem,” which questions whether AI systems’ internal representations and outputs bear intrinsic meaning. In “The Vector Grounding Problem,” Mollo and Millière extend this interrogation to LLMs that compute over vectors rather than symbols. They come to the (rather surprising!) conclusion that LLMs do have the resources to overcome the vector grounding problem when we think of grounding as “referential grounding,” or what makes our representations “hook onto the world.”

Derek: A paper introducing HyperDiffusion for generating implicit neural representation weights gives an interesting approach for 3D generation. They fit implicit neural representations to represent 3D data, then train a diffusion model directly in the parameter space of these implicit neural representations so that they may later generate 3D data (by generating parameters of implicit neural representations). This then allows them to generate both 3D and 4D data (e.g. moving objects) using essentially the same model, only differing in positional encoding.

Tweets

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at editor@thegradient.pub and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! For feedback, you can also reach Daniel directly at dbashir@hmc.edu or on Twitter. If you enjoyed this newsletter, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!