Update #46: GPT-4 and Modular Reasoning for Visual Question Answering

We recount what we've learned about GPT-4 so far, and discuss ViperGPT and VisProg.

Welcome to the 46th update from the Gradient! If you’re new and like what you see, subscribe and follow us on Twitter :) You’ll need to view this post on Substack to see the full newsletter!

Want to write with us? Send a pitch using this form.

News Highlight: GPT-4

Summary

OpenAI recently announced GPT-4, a large multimodal model that inputs text and images, and outputs text. This highly anticipated successor to the GPT-3 and GPT-3.5 models showcases a significant improvement in capabilities. Users can access GPT-4 directly in the OpenAI API, or via the products of several partner companies.

Background

The GPT-4 technical report provides minimal technical details, merely stating that “Transformer-style model pre-trained to predict the next token in a document, using both publicly available data (such as internet data) and data licensed from third-party providers.” After this standard LLM pretraining, GPT-4 was finetuned via Reinforcement Learning from Human Feedback (RLHF).

Differently from previous GPT models, GPT-4 accepts visual input, which, for instance, allows it to answer questions about an input image; thus, in addition to its capabilities as an LLM, GPT-4 continues the line of work that develops multimodal models (e.g., OpenAI’s CLIP or DALL-E models and the Flamingo model from DeepMind). Citing “the competitive landscape and the safety implications of large-scale models like GPT-4,” the report does not provide any further information about the exact architecture, model size, hardware, or training data.

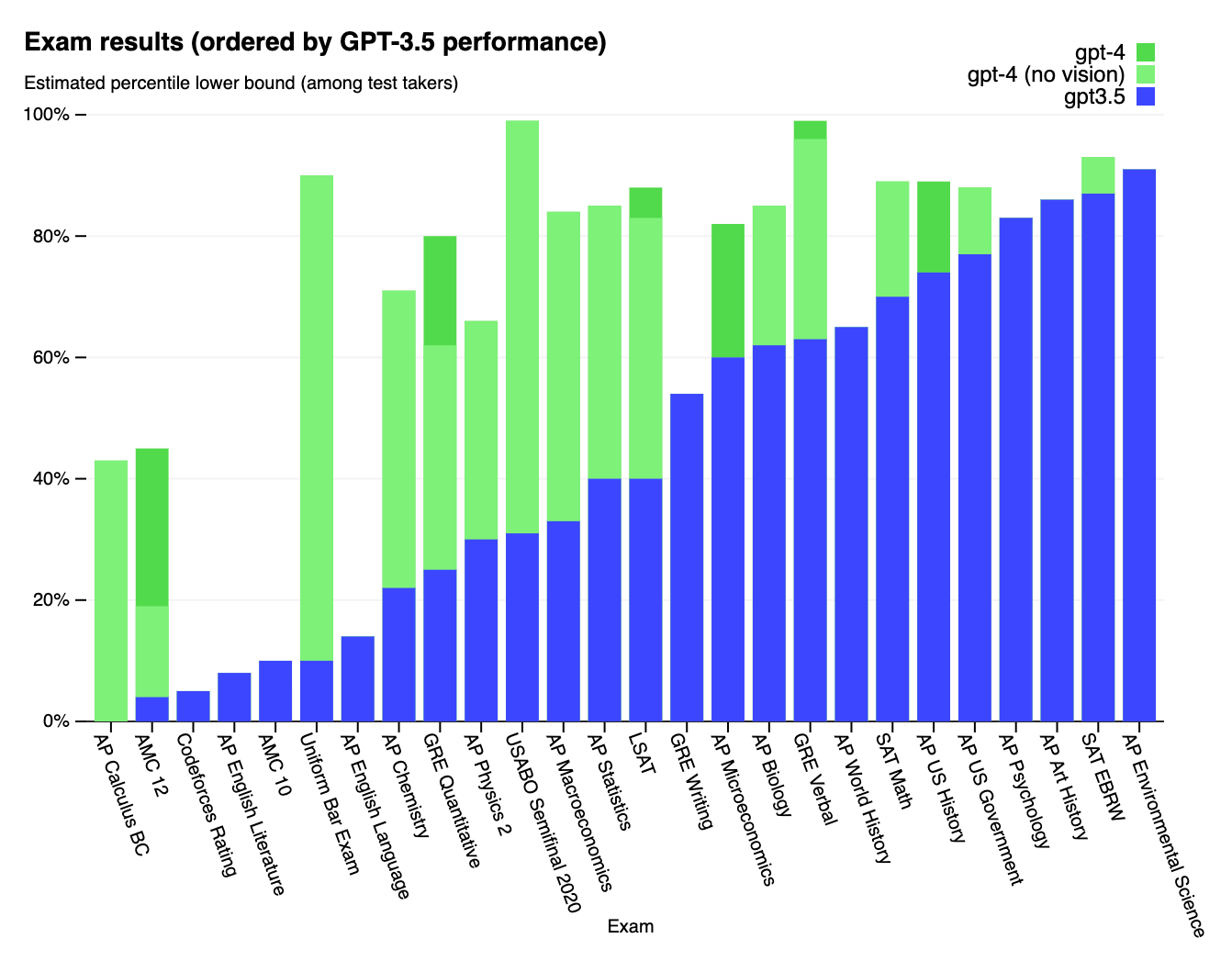

GPT-4 improves significantly over previous models like GPT-3.5 and many domain-specific models on various benchmarks. Notably, GPT-4 was tested on an assortment of academic and professional exams (see above Figure), including the AP, SAT, GRE, and LSAT exams; performance was significantly higher than GPT-3, and is at or above human level for many exams (e.g. on many AP exams, and on the GRE).

Microsoft researchers recently released a study on the capabilities of earlier versions of GPT-4, before training was fully completed. With experiments in tasks involving mathematics, coding, and rhetoric (e.g. writing a proof of a theorem, where every line of the proof rhymes), these researchers contend that GPT-4 can be argued to be an “early (yet still incomplete) version of an artificial general intelligence (AGI) system.”

While GPT-4 certainly shows a large jump in capabilities, many of these reported results have received criticism. OpenAI took some measures to use testing data that had not been seen before, but their technical report notes that there is still substantial contamination of testing data in several exams. Horace He, Arvind Narayanan, and Sayash Kapoor provide several types of evidence that GPT-4 can regularly solve Codeforces problems that it has seen during training, but struggles with unseen problems. Without further information on GPT-4’s training data, it remains unclear how other tests of its capabilities may be confounded by data contamination.

Why does it matter?

GPT-4 can be used through the ChatGPT interface or the OpenAI API, though image input capabilities are not yet available through these channels. Additionally, OpenAI has partnered with various companies to incorporate GPT-4 into products. For instance, Microsoft’s Bing, Khan Academy's Khanmigo, Duolingo Max roleplay bot, and Morgan Stanley’s new knowledge base organization technology all use GPT-4 to understand text input and generate text output. Thus, GPT-4 is already reaching many users.

As machine learning models like GPT-4 continue to improve and integrate into real-world products that impact a wide array of users, they are also having more impact on more of society. These models are becoming more proficient at many tasks and jobs that humans do. A recent study by OpenAI, OpenResearch, and the University of Pennsylvania suggests that “approximately 80% of the U.S. workforce could have at least 10% of their work tasks affected by the introduction of GPTs, while around 19% of workers may see at least 50% of their tasks impacted.” Thus, such models may profoundly impact the labor market and society as a whole. While GPT-4 provides some hints, it is unclear what exactly that impact will look like. In light of these advancements, it is crucial for researchers, policymakers, engineers, and industry to collaborate in addressing the ethical, social, and economic implications of such transformative technologies as they continue to reshape our world.

Editor Comments

Daniel: Yes, this newsletter is coming two weeks after the fact and this is the millionth piece of GPT-4 coverage you’ve seen, but it’s essentially impossible not to cover this—I think we still have lots to talk about. A paper from Microsoft hinted at ‘Sparks of AGI’ and a recent YouTube video says this is not hype, that the “unrestrained GPT-4” might just be on the road. It’s indeed almost disconcerting that tool use is an emergent capability in GPT-4 and might presage more to come. I’m impressed, but there’s still much left to do. We’ve seen that there is likely data contamination and plenty of memorization going in GPT-4, as evidenced by its performance on coding problems after a certain date. My college metaphysics professor, who (a bit embarrassingly for me) has probably spent more time with ChatGPT (and GPT-4) than I have, has run headfirst into some of its limitations. If you ask GPT-4 to explain Avicenna’s cosmological argument in a way that’s convincing to someone who’s not already somewhat on board… you don’t get as far as we’d hope.

According to the Microsoft researchers, GPT-4 can be convincing—recent work like CICERO buttresses the argument that contemporary large language models can convince people of things. That said, even if you agree with François Chollet that we haven’t begun to walk down the correct road to “real intelligence” yet, these systems are powerful and not well understood. Jan Leike is right to question whether it’s wise for us to integrate LLMs everywhere we can as soon as we can: it wasn’t long ago that many complained of neural networks’ lack of robustness guarantees, but now many seem ready to put them in critical infrastructure. I feel some what’s been said about GPT-4 so far feels reductionist in different ways: the utterly unimpressed don’t take GPT-4 seriously enough, but others may go too far in the other direction. Sam Altman himself seems to have a clear head about this—he has been careful to call GPT-4 a “tool” that will be superseded in the years to come.

But it is a powerful tool, and one we don’t understand very well. I don’t know that it’s inevitable that our understanding needs to lag so far behind current capabilities, but the resolution to that gap isn’t an easy one. We would either need to slow down capability development (which some have called for, but I’m skeptical that researchers and organizations will actually do), or substantially increase investment and speed in research that seeks to understand these models better. At the very least, we could consider slowing down adoption of state-of-the-art systems in critical contexts until we have a better handle on risks and mitigation strategies.

Things are probably going to get weird in the next few years, and I don’t know if LLMs everywhere in our economy is something we want just yet—correlated failures seems like the right worry to have. But we should understand what we’ve already set ourselves up for—I’m hoping to bring on an economist to talk about all of this soon. It’s worth being open-minded and welcoming of new ideas and taking LLMs seriously, but at times like these it’s also more critical than ever to exercise epistemic humility, to approach claims with caution, and to engage in slow deliberation and make informed judgements. I’ll agree with accelerationists that degrowth doesn’t seem like an option at this stage, but I don’t think building is the only imperative we should accept. Building a hammer won’t do you much good if you don’t know where the handle is and can’t use it well. This was already a long (and inevitably reductive) comment, but we are going to have to learn a lot about how to manage complexity in the coming years.

Research Highlight: Modular Reasoning for Visual Question Answering With ViperGPT and VisProg

Summary

Visual Question Answering (VQA) is the task of answering questions based on an input image. Current methods for this problem train end-to-end models that directly generate text responses to the queries. However, such models often lack interpretability and do not generalize as well to domains outside the training distribution. To improve upon existing work and utilize the ability of internet-scale models to learn “in-context”, two recent papers, ViperGPT and VisProg, use LLMs to output an executable program that calls user-defined API functions for tasks such as semantic segmentation, object detection, and image inpainting. This methodology makes the model more interpretable; its API calls, as well as intermediate outputs, can be read and analyzed by a human. Additionally, the network learns to reason about an input query in the context of the input image in an open-ended manner, enabling the model to generalize to different domains. ViperGPT outperforms prior methods on zero-shot generalization on the GQA dataset and VisProg shows better generalization across tasks when learning from many examples on the same dataset as compared to prior methods.

Overview

Neural Module Networks pioneered the space of composing vision modules to address the VQA problem. The hypothesis behind this idea is that vision modules can inherently be combined to answer many queries, meaning that modules can be executed sequentially with some functions operating on the outputs of a prior module. Consider the prompt “How many white horses are there in this image?”. Given a detector for horses, you may first gather the predicted bounding boxes for the “horse” category in the image, then pass these through a color thresholding module to determine how many of the horses are white, and then return the final number. While models were previously limited by a module’s ability to detect or segment a finite set of objects, it is now possible to pass in free-form and abstract queries without explicit limitations with the introduction of large visual-language models.

Both ViperGPT and VisProg utilize an LLM to generate an executable program that utilizes a pre-defined API, including functions such as detect(image, obj_category) or segment(image, obj_category). VisProg also utilizes the “in-context learning” abilities of LLMs, which allow a language model to respond to unseen queries when given a few examples of the input and output behavior. In VisProg, the authors prompt the model with examples of a query and the corresponding symbolic program that should be written to answer the question. In contrast, ViperGPT passes the specification of all the functions in the API available to the model and does not include examples of the expected output. In ViperGPT, only the docstrings and the inputs and outputs for each function in the API are provided in the prompt, not the source code itself.

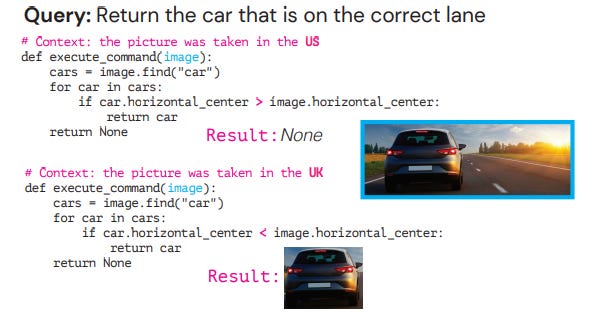

Another difference between the two models is in the code they generate. VisProg outputs a general symbolic program that is inherently language-agnostic: the sequence of symbolic calls VisProg generates must be parsed to be executed. This can be advantageous if users don’t want to specifically run programs in Python—the same symbolic outputs from the LLM can be parsed to executable code in any language. ViperGPT, however, utilizes OpenAI’s Codex to directly write Python code that can simply be compiled and executed. The ability of both of these models to write code to answer input queries significantly enhances their interpretability. The ease of understanding these models’ reasoning is further enhanced as they only make calls to user-defined functions, whose intermediate outputs can be visualized easily. Here’s an example of such a visualization from VisProg:

Both models show interesting capabilities that extend beyond simple question answering. By introducing a VideoSegment class in the API, ViperGPT’s authors extend their model to answer questions about videos, achieving ~50% accuracy on the NExT-QA dataset designed for temporal VQA. In a similar vein, VisProg can perform zero-shot reasoning on image pairs by breaking down its input original query into smaller questions and a Python expression involving arithmetic and logical operators. The model outperforms prior methods on the NLVr2 dataset in this setting.

ViperGPT can also learn from additional context provided alongside the image. For instance, given an image of a car driving on a road and the context “This image was taken in the UK”, ViperGPT can change its output to account for varying traffic regulations:

It should also be noted that these modules have different trade-offs and shouldn’t be compared without qualification. As mentioned previously, VisProg relies on in-context learning and hence requires some input-output examples in its prompt, while ViperGPT only needs knowledge of the API specification. Hence, VisProg outperforms all prior methods on the GQA dataset, but ViperGPT excels in the zero-shot setting on the same dataset.

Why does it matter?

These works strike a balance between utilizing the emergent abilities of LLMs while providing structure through a set of API calls. Additionally, as both of these models directly generate programs, their outputs can be easily integrated into existing codebases. Since the models do not depend on LLMs beyond simply querying them with structured prompts, the models themselves will improve as we improve LLMs in the coming years. VQA tools like these also have a significant potential in decreasing the barrier to access to vision-based AI tools for individuals without a computer science background. For instance, ViperGPT or VisProg could be used in various consumer-facing applications by adding a chatbot-like interface to segmentation and detection tasks (“Does this manufactured item have a defect?”, “How many people came into my store yesterday?”).

Q&A With A Subject Matter Expert

Q: Since the model relies on a finite number of modules, how do you see future works overcoming this limitation beyond scaling the number of modules available?

Firstly, the number of fundamental vision tasks requiring specific modules is relatively limited. Although it is possible to develop more modules, the core strength of these methods resides in the ability to combine a few basic modules to perform a wide array of tasks. Secondly, not all modules are essential in every computer vision framework. Users can create their own APIs with the necessary modules for their specific use case. For instance, an Optical Character Recognition (OCR) module may be unnecessary if the application does not involve text. Lastly, the upcoming GPT-4 model with its 32k context window should be capable of accommodating a vast array of fundamental tasks.

Q: Follow Up to the above question, do you think as we get better LLM's to write code, could an LLM write its own module to scale? In what ways would that actually help vs defeat the purpose of having a user-defined API in the first place?

LLMs can certainly contribute to creating their own modules. In the future, I envision LLMs detecting missing modules and potentially developing new ones, possibly with assistance from internet browsing to identify available pre-trained modules. However, it is important to note that user-defined APIs also serve to provide guard-rails to guide the intended functionality.

Q: Since a single forward pass of the model requires not just the LLM to run inference, but also various state-of-the art visual-language models, detectors, depth estimators, etc to run too, what compute limitations do you anticipate with this project? Which domains do you think might be the most feasible to utilize these models in?

With proper engineering techniques, such as batching inputs from different processes, the approach can be highly efficient. The primary computational constraint is the rate limit imposed by OpenAI when querying Codex. As open-source code models advance, I expect this issue to be resolved. One advantage of both of these frameworks is their ability to seamlessly integrate improvements in the modules employed. For instance, if a model is distilled into a smaller, faster version, one can easily replace the existing module with the new one by modifying a single line of code.

Q: The authors from ViperGPT discuss using external knowledge from GPT3 to inform the code written by Codex. In what ways do you think incorrect data or hallucinated information is likely to make its way thorugh to the pipeline here?

In the ViperGPT approach, GPT-3 does not directly inform Codex; instead, Codex queries GPT-3 when necessary, without conditioning the code on GPT-3's output. Regarding the knowledge accessed, it is true that querying LLMs may generate hallucinated information. However, ViperGPT’s approach is relatively robust against this for two reasons. First, any non-LLM knowledge base system could be used as an alternative to LLMs, which would be beneficial in more restricted domains where the knowledge base encompasses all potential queries. Second, when using LLMs, the input questions are more targeted, as they form only one step in the multi-step code execution process. The LLM does not need to hallucinate information about the image or context, as these are already provided and reasoned about. After this reasoning process, one could query GPT-3 for knowledge, resulting in specific, precise questions like “Does Dr Pepper contain alcohol?”, which can be more easily audited for hallucination. Moreover, with tools like ChatGPT plugins, it is possible to envision an interface with GPT + WolframAlpha or other plugins that increase the knowledge base reliability.

New from the Gradient

Sewon Min: The Science of Natural Language

Richard Socher: Re-Imagining Search

Other Things That Caught Our Eyes

News

People Aren’t Falling for AI Trump Photos (Yet) “Fake images of Trump getting arrested may not fool anyone—but the next thing cooked up by AI might. On Monday, as Americans considered the possibility of a Donald Trump indictment and a presidential perp walk, Eliot Higgins brought the hypothetical to life.”

Microsoft threatens to restrict data from rival AI search tools “Microsoft Corp (MSFT.O) has threatened to cut off access to its internet-search data, which it licenses to rival search engines, if they do not stop using it as the basis for their own artificial intelligence chat products, Bloomberg News reported on Friday.”

We Need to Talk (Just as Soon as I Consult ChatGPT) “Faced with challenging situations in parenting, romance or work, some are getting by with a little help from their A.I. friends. Todd Mitchem kept struggling to have honest, productive conversations with his son. “He’s 15,” Mr. Mitchem, 52, said with a laugh.”

Microsoft brings OpenAI’s DALL-E image creator to the new Bing “Microsoft today announced that its new AI-enabled Bing will now allow users to generate images with Bing Chat. This new feature is powered by DALL-E, OpenAI’s generative image generator.”

Microsoft subsidiary Nuance is using GPT-4 for a new physician notes app “Microsoft-owned AI company Nuance is leveraging GPT-4 to power new software designed to ease the burden of clinical documentation for physicians. Nuance, acquired last year by Microsoft for $19.”

Samsung’s Moon Shots Force Us to Ask How Much AI Is Too Much “Have you heard the moon conspiracy theory? No, not the one about the moon landings. It’s about the Samsung Galaxy S23 Ultra, and the theory it fabricates pictures of the moon, creating images that are far more detailed than the camera itself can actually capture.”

Papers

Daniel: There are too many things from the last few weeks I’m pretty excited about, so here’s a bag of papers (ha, see what I did there). I’ve been interested in the question of steerability in pre-trained generative models, and a few works come at this in different ways. “Ablating Concepts in Text-to-Image Diffusion Models” does… essentially what the title says! At a high level, to prevent generation of an undesired “target concept,” the researchers formulate an objective that seeks to minimize the distance between two distributions: that of a sequence of noised images conditioned on the target concept, and that of a sequence of noised images conditioned on an “anchor concept” that overwrites the anchor concept and is a superset of similar to the target concept (e.g., “cat” is an anchor concept corresponding to the target concept “grumpy cat”).

Meanwhile, “Erasing Concepts from Diffusion Models” proposes a method for fine-tuning model weights to erase concepts from diffusion models, given text referring to the concept to be erased. This method also uses a model’s own knowledge to steer diffusion away from an undesired concept. In this case, focusing on Stable Diffusion, the authors negate a concept by guiding a model’s conditional prediction away from the concept.

Third, I think a natural question about contrastively trained vision-language models (e.g. CLIP) is whether they have compositional understanding of images. CLIP is trained on image-caption pairs like a photo of a horse eating grass with the caption “a horse is eating grass”—can it separate out the concept of “horse” from that of “grass,” and does it know the difference between the original caption and “grass is eating horse”? An ICLR paper “When and Why Vision-Language Models Behave Like Bags-of-Words” says no, and proposes a fix: mining of composition-aware hard negatives.

Finally, because I’m an (empirical) theory nerd, I have to call out “The Low-Rank Simplicity Bias in Deep Networks” which has had some updates since its initial submission to Arxiv. I’m glad the authors have continued to work on this paper.

Derek: Just from reading the title of the paper “Three iterations of (d − 1)-WL test distinguish non isometric clouds of d-dimensional points.” (https://arxiv.org/abs/2303.12853), I was very excited. This work is a nice theoretical work about point cloud models that are invariant to Euclidean symmetries (rotations, reflections, permutations, translations). Such models are important to processing physical data, such as molecules, proteins, 3D objects in robotics or vision, and many other types of data from the natural sciences. It shows that geometric versions of the Weisfeiler-Lehman (WL) graph isomorphism algorithms, when using enough dimensions, are maximally powerful for symmetry invariant processing of point clouds. Thus, neural networks based on these WL algorithms are a promising direction to explore. Also, the fact that only so few iterations are needed means that shallow models are theoretically expressive for these tasks; future empirical and theoretical work that explores this could be interesting.

Tanmay: I really like the RoboNinja paper from Dr. Shuran Song’s lab at Columbia. It teaches a robot to cur fruits that have a hard core in the middle, and shows impressive results at cutting an avocado, a mango, a peach, a plum and even bone-in meat. RoboNinja uses an interactive state estimator and an adaptive cutting policy to achieve this. The state estimator uses sparse collision information to iteratively estimate the position and geometry of an object's core. The adaptive cutting policy generates closed-loop cutting actions based on the estimated state and a tolerance value. RoboNinja was developed on a differentiable cutting simulator that supports multi-material coupling and generates optimized trajectories as demonstrations for policy learning. Furthermore, by using a low-cost force sensor to capture collision feedback, RoboNinja was able to successfully deploy the learned model in real-world scenarios.

Tweets

not trying to say anything here, I just can’t get enough of these

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at editor@thegradient.pub and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! For feedback, you can also reach Daniel directly at dbashir@hmc.edu or on Twitter. If you enjoyed this newsletter, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!

I lead an ML team (applied, not research). Obviously these models are amazing, but also so underwhelming. Every time I’ve asked any of these models to do anything other than summarize general knowledge it was trained on it fails. For example, I thought maybe GPT 3.5 could help me in my literature reviews and summarize papers for me. Even with not very technical papers at best I got a bad summary of the abstract. A high percentage of the time I got back pure hallucination. Sparks of AGI is typical big tech hype.