Gradient Update #8: Robot Taxes and Foundation Models

In which we discuss a new proposal to tax “robot labor” and discuss the new report on "Foundation Models" such as GPT-3 from Stanford’s AI Lab.

Welcome to the eighth update from the Gradient! If you were referred by a friend, subscribe and follow us on Twitter!

News Highlight: Taxing the Robots?

This edition’s news story is Tax, not the Robots.

Summary With the growing use of robots in industries and workplaces, numerous politicians and entrepreneurs have started expressing their support for the introduction of a "robot tax". The idea behind the tax is that workplaces are replacing human labor with robots, which increases unemployment. Therefore, to disincentivize firms from replacing human labor with robots, a tax of some form should be enforced. For example, whenever a firm replaces a human worker with a robot, it’s required to pay added tax to the government. This safeguards human employment. Moreover, even if workplaces forgo their human workers in favor of robots despite this policy, the robot tax would then act as a proxy for the revenue lost via payroll taxes.

While the intrinsic idea in maintaining employment and government revenue streams is understandable, taxing robots is a double-edged sword. As it turns out, things are not as black and white as they might seem. Robots do not always replace humans in the workplace, and some might even work in conjunction with the human workforce and handle the most stringent tasks for them. Sanctioning a robot tax would mean that we slow down the pace at which robots are adopted in the industries, taking away the many advantages they offer to the workers. Thus, a robot tax can be argued to be a ‘throw the baby out with the bathwater approach for handling the adoption of automation, and there are various approaches that may be more appropriate.

Background Increased mechanization and improvements in engineering practices and materials were primary factors in the Industrial Revolution in Europe and the United States. Therefore, the idea to replace or optimize human labor with the use of machinery is nothing new. But while many people, especially manual labor in primary industries, did lose jobs due to mechanization in the short run, the resultant growth of businesses from the use of machinery created employment opportunities in the long run. The advent of computers in the 1980s and 1990s boosted productivity and created jobs but simultaneously destroyed the jobs of typists and file clerks.

Naturally, the same dichotomy precipitates in the discussion of robot taxes. Today, we’re in an age that many are referring to as the 4th Industrial Revolution. The combined forces of artificial intelligence, robotics, quantum computing, and genetic engineering are changing the way we live and work. So, while some workers have certainly lost their jobs due to mechanization, robots do not always spell trouble for humans in the workplace. In fact, studies have shown that the assertion that robots are taking our jobs is not well-founded. Research shows that firms using robots experience more employment growth than those that do not. The same firms also show signs of being more productive and their employees are generally more performant. In the same breath, similar studies show that firms that are not keen on adopting robots see decreases in employment.

Furthermore, in many cases, robots at workplaces act as helpers for human workers, not direct replacements. This is especially true for the medical and health industries. Hard, taxing, and manual labor at such workplaces lead to high labor turnover. But robots designed specifically to handle the most laborious tasks reduce this turnover rate. Introducing a robot tax would slow down the adoption rate of robots in such industries where there is a need to ease the burden of work on human labor.

Why does it matter? With the growth of nanotechnology, artificially intelligent robots, and neural networks that show faint signs of algorithmic insight, there is a conversation to be had on the usage of robots and automation in our workplaces. But for now, large-scale job losses from artificial intelligence and automation are mostly a theoretical worry. However, tax economists and policymakers need to plan for the decades ahead, and their insight needs to penetrate through the cloud of the current economic circumstances. But while it is clearly a good idea to preemptively work to avoid the negative consequences of robot adoption, perhaps the robot tax solution is half-baked and counterintuitive at best. As such, a better approach would be to focus on reforming policies surrounding the present labor and capital taxes instead.

Editor Comments

Hugh: It is indeed very strange that the US tax system privileges returns from capital (e.g. robot workers) over regular income (e.g. from labor), but a “robot tax” sounds like a band-aid for a much larger problem, which is what do we do now that technology has exacerbated wealth inequality to levels approaching (and perhaps soon exceeding) that of the Gilded Age? I think we’re going to need more radical solutions, like a universal basic income or a complete overhaul of the taxation system, to ensure that the coming AI revolution produces a future that benefits everyone instead of just a select few.

Andrey: I tend to think this is a pretty complex topic, and so am wary of taking a stand on what policy solutions are best. However, I am sure that some policy measure is needed to lessen the blow of automation for human workers. In particular, autonomous driving is poised to hit many trucks and Uber/Lyft drivers eventually. This may take a while, as we’ve seen the technology is hard to get just right and deploy, but I do think it’s just a matter of time and is likely to happen within a decade. With there being millions of truck drivers in the US, the impact seems likely to be significant, if gradual. A robot tax may be part of the solution for slowing down the transition to laying off workers, but it does seem like it could only with other policies aimed at creating and setting people up for jobs not as hard hit by automation in the first place.

Daniel: My broad thoughts align with Andrey’s and Hugh’s—I think the advent of novel technologies can often create friction and pain (like humans losing jobs), and managing that transition period is important to mitigate concerns, but as Andrey pointed out a tax might not do much besides managing a transition. I think we’re still figuring out what the best long-term policies are for these sorts of changes, and we will certainly need more creative and far-reaching solutions to deal with forthcoming changes.

Paper Highlight: Foundation Models

This week’s paper is On the Opportunities and Risks of Foundation Models.

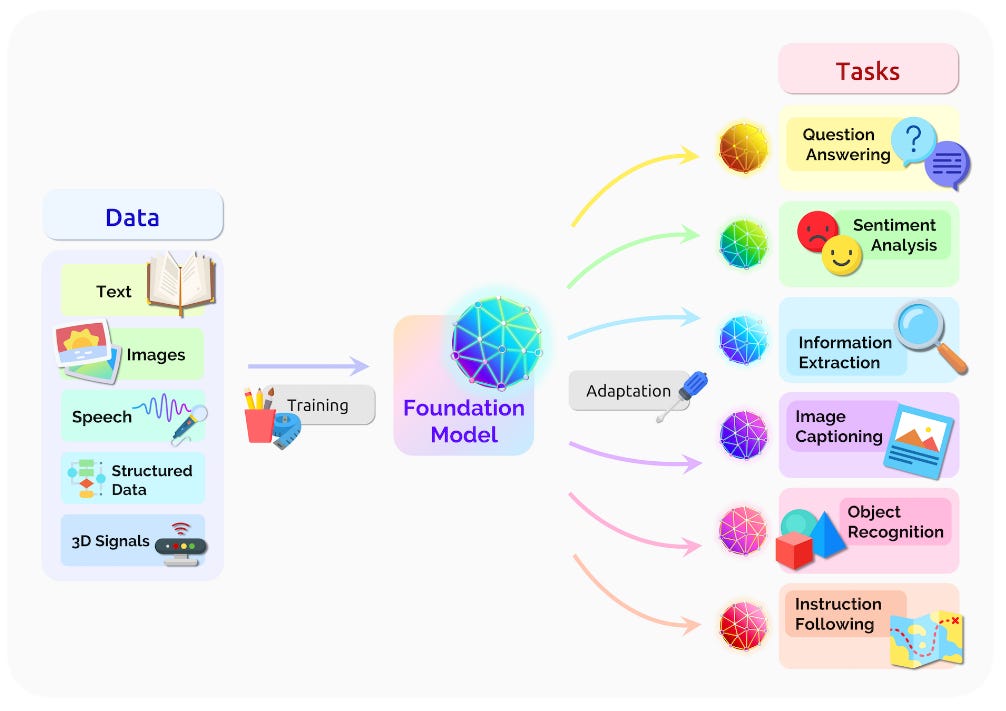

Background The main background this paper requires is familiarity with transfer learning. Specifically, the definition of transfer learning is using knowledge from pretraining on one task (e.g. object recognition in images) to improve performance on another (e.g. activity recognition in videos). A common way this is performed is to train a neural network to perform one task, and leverage learned representations for another downstream task, which makes it possible to get high performance on the downstream task with much less data. A notable variant of this process is in self-supervised learning, where the pretraining task is set up with unlabeled data. For example, BERT is trained by predicting a missing word in a sentence given its surrounding context. Transfer learning via fine-tuning is far from new, with it being commonplace in Deep Learning for close to a decade, but gigantic models such as GPT-3 and others like it have demonstrated this technique can be even more powerful than we thought.

Summary This paper introduces the notion of foundation models: models that “are trained on broad data at scale and are adaptable to a wide range of downstream tasks,” such as BERT, DALL-E, and GPT-3. While performing transfer learning on pre-trained models has been possible for decades, the authors argue that GPT-3 and similar models pose unique opportunities and risks due to their enormous and unprecedented scale. The authors note that foundation models are particularly important because of “emergence” and “homogenization.” Emergence refers to the property that the behavior of these systems is “implicitly induced rather than explicitly constructed.” Homogenization refers to techniques for building machine learning systems consolidating around foundation models (e.g. fine-tuning GPT-3 for various downstream tasks). While this creates exciting new possibilities, it also has drawbacks, such as causing a single point of failure.

Scope The report is largely a survey paper, discussing examples of foundation models in various contexts. There are five parts to the report. The introduction defines foundation models and the scope of the report. Section 2, “Capabilities” discusses the core tasks that foundation models can perform, grouped under language, vision, robotics, reasoning, interaction, and philosophy. Section 3, “Applications,” discusses applications of the capabilities from section 2, specifically focused on examples from healthcare, law, and education. Section 4, “Technology” discusses technical details of implementing foundation models, e.g. modeling, training, evaluation, and data. Finally, Section 5, “Society,” discusses important social implications of foundation models, for example, the legality and economics of these systems.

Opportunities and Risks

To highlight a subset of the opportunities and risks considered:

Opportunities

Foundation models allow for fine-tuning / transfer learning, which is an efficient way to get good performance on downstream tasks

A large scale model can be trained once, sharing the benefit of the computational cost across various applications

Models fine-tuned from foundation models can use much less data

Risks

Lack of accessibility (e.g. GPT3)

Failures in the foundation model impact all downstream tasks

Changing the foundation model is extremely expensive since it is used in many downstream applications

Editor Comments

Hugh: Since most of the largest machine learning models are trained in the industry, it’s nice to see an academic institution get into the game. I’m excited to see Stanford potentially become a new center for scaling research that is attached to different pressures than those from industry labs.

Adi: It’s exciting that Stanford is entering this area, and hopefully we can see more academic institutions train and study models that approach the largest in the world. Variants of “foundation models” have existed for a very long time, though I agree with the report’s thesis that the unprecedented scale achieved with recent models (e.g. GPT-3) requires special attention to study and has unique implications.

Justin: No stranger to the inherent power in names and language, Meredith Whittaker, faculty director of the AI Now Institute said it best “They renamed something that already had a name; they’re called large language models... It works to disconnect LLMs from previous criticisms.”

Andrey: Following up on Justin’s comment, I think a key question with respect to this paper is whether a term like ‘foundation models’ is needed at all. While GPT-3 and the like have certainly shown immense potential for fine-tuning for many tasks, this has already been common practice in Computer Vision with models such as VGG and ResNet. So why coin the new term ‘foundation models’? Personally, I think a term such as ‘base model’ or ‘pre-trained model’ is just fine. The paper does justify this name as follows:|

“We introduce the term foundation models to fill a void in describing the paradigm shift we are witnessing; we briefly recount some of our reasoning for this decision. Existing terms (e.g., pre-trained model, self-supervised model) partially capture the technical dimension of these models but fail to capture the significance of the paradigm shift in an accessible manner for those beyond machine learning

….

“We chose the new term foundation models to identify the models and the emerging paradigm that are the subject of this report. In particular, the word “foundation” specifies the role these models play: a foundation model is itself incomplete but serves as the common basis from which many task-specific models are built via adaptation. We also chose the term “foundation" to connote the significance of architectural stability, safety, and security: poorly-constructed foundations are a recipe for disaster and well-executed foundations are a reliable bedrock for future applications.”

So I can see some merit to needing the term of less technical people ‘beyond machine learning, but then again the very notion of ‘model’ is already technical and makes little sense to those beyond machine learning.

As for the report in general, it certainly is nice to have a survey and position paper on this important topic. But I do wonder if it would have made more sense not to bundle up all its various aspects into one article, and instead, to address the various dimensions it covers in separate papers.

Guest Comments

Gary Marcus & Ernest Davis (authors, Rebooting.AI): The last few years have been an eye-opening lesson in what you can do with vast amounts of data—but that doesn’t mean you should build your AI around large scale pre-trained models. It’s already clear there are many problems with these stochastic parrots in terms of reliability, interpretability, bias, etc. Still, the Stanford report is a great opportunity to ask a deep question: what should we really want the foundations of AI to provide? In an upcoming Gradient essay, we will argue that building a strong, reliable foundation may require a very different approach.

Recently From the Gradient

Yannic Kilcher on Being an AI Researcher and Educator

An Introduction to AI Story Generation

The Gradient Community Discussion Thread #2

Evan Hubinger on Effective Altruism and AI Safety

News

Rise of the robo-drama: Young Vic creates a new play using artificial intelligence The Young Vic’s new show, AI, explores these questions, casting the same technology as its virtual star. The production is not so much a piece of theatre as dramaturgy, rehearsal, and workshop all in one.

“Flying in the Dark”: Hospital AI Tools Aren’t Well Documented A new study reveals models aren’t reporting enough, leaving users blind to potential model errors such as flawed training data and calibration drift.

GPT-3 mimics human love for ‘offensive’ Reddit comments, study finds "Chatbots are getting better at mimicking human speech — for better and for worse."

Always there': the AI chatbot comforting China's lonely millions "After a painful break-up from a cheating ex, Beijing-based human resources manager Melissa was introduced to someone new by a friend late last year."

Google’s New AI Photo Upscaling Tech is Jaw-Dropping "Photo enhancing in movies and TV shows is often ridiculed for being unbelievable, but research in real photo enhancing is actually creeping more and more into the realm of science fiction. Just take a look at Google’s latest AI photo upscaling tech."

See more at Last Week in AI.

Papers

Music Composition with Deep Learning: A Review Generating a complex work of art such as a musical composition requires exhibiting true creativity that depends on a variety of factors that are related to the hierarchy of musical language. Music generation has been faced with Algorithmic methods and recently, with Deep Learning models that are being used in other fields such as Computer Vision. In this paper, we want to put into context the existing relationships between AI-based music composition models and human musical composition and creativity processes.

Image2Lego: Customized LEGO Set Generation from Images Although LEGO sets have entertained generations of children and adults, the challenge of designing customized builds matching the complexity of real-world or imagined scenes remains too great for the average enthusiast. In order to make this feat possible, we implement a system that generates a LEGO brick model from 2D images. We design a novel solution to this problem that uses an octree-structured autoencoder trained on 3D voxelized models to obtain a feasible latent representation for model reconstruction, and a separate network trained to predict this latent representation from 2D images. … Finally, we test these automatically generated LEGO sets by constructing physical builds using real LEGO bricks.

Do Transformer Modifications Transfer Across Implementations and Applications? The research community has proposed copious modifications to the Transformer architecture since it was introduced over three years ago, relatively few of which have seen widespread adoption. In this paper, we comprehensively evaluate many of these modifications in a shared experimental setting that covers most of the common uses of the Transformer in natural language processing. Surprisingly, we find that most modifications do not meaningfully improve performance. Furthermore, most of the Transformer variants we found beneficial were either developed in the same codebase that we used or are relatively minor changes. We conjecture that performance improvements may strongly depend on implementation details and correspondingly make some recommendations for improving the generality of experimental results.

Train Short, Test Long: Attention with Linear Biases Enables Input Length Extrapolation Since the introduction of the transformer model by Vaswani et al. (2017), a fundamental question remains open: how to achieve extrapolation at inference time to longer sequences than seen during training? … We introduce a simple and efficient method, Attention with Linear Biases (ALiBi), that allows for extrapolation. ALiBi does not add positional embeddings to the word embeddings; instead, it biases the query-key attention scores with a term that is proportional to their distance. … Finally, we provide an analysis of ALiBi to understand why it leads to better performance.

Fastformer: Additive Attention Can Be All You Need The transformer is a powerful model for text understanding. However, it is inefficient due to its quadratic complexity to the input sequence length. Although there are many methods for Transformer acceleration, they are still either inefficient on long sequences or not effective enough. In this paper, we propose Fastformer, which is an efficient Transformer model based on additive attention. … Extensive experiments on five datasets show that Fastformer is much more efficient than many existing Transformer models and can meanwhile achieve comparable or even better long text modeling performance.

Tweets

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at gradientpub@gmail.com and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! If you enjoyed this piece, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!