Gradient Update #36: Argo AI shuts down and Convolutions are great for sequence modeling

Convolutional models for long sequence modeling (with author Q&A!) and thoughts on the Argo AI shutdown.

Welcome to the 36th update from the Gradient! If you were referred by a friend, subscribe and follow us on Twitter!

Want to write with us? Send a pitch using this form :)

News Highlight: Argo AI Shuts Down

Summary

Pittsburgh-based self-driving startup Argo AI is shutting down. Following in the stead of Uber’s and Lyft’s self-driving units, the Ford and VW-backed startup will shut down and have its parts absorbed into the two backers.

Background

Argo AI is just one of many competitors in the autonomous vehicle ecosystem. The company burst onto the scene in 2017 with $1 billion in funding from auto titans Ford and VW.

While the company itself will shut its doors, many of its employees will have the opportunity to continue working on the problem they have been solving at Argo: a written statement from the startup said “Many of the employees will receive an opportunity to continue work on automated driving technology with either Ford or Volkswagen, while employment for others will unfortunately come to an end.”

TechCrunch says Argo appeared to be gaining ground this year: the company has been testing self-driving Ford vehicles on roads in multiple American cities and in the EU. Last month, Argo launched a new ecosystem of services including commercial robotaxis and autonomous delivery. The shutdown highlights the difficulty of commercializing in this space and the effects of difficult-to-keep promises about the technology.

The difficulty for Argo involves the company’s inability to attract new investors. In a third-quarter earnings report Ford said it recorded a $2.7 billion non-cash, pre tax impairment on its investment in Argo, which resulted in a $827 million net loss.

Why does it matter?

As of late, we are seeing a lot of shakeup in the AV startup ecosystem. As more and more entrepreneurs and operators chase after the “full self-driving” holy grail, the goalpost seems to recede further and further into the distance. As difficulties manifest, companies that put time and effort into autonomous driving have reconsidered their investments.

The numbers tell us that Argo has been a serious money sink. Furthermore, the company anticipated being able to bring autonomous vehicle technology broadly to market by 2021. Ford’s decision reflects a shift towards developing advanced driver assistance systems as opposed to full-fledged autonomous vehicles, leaving open the possibility that Ford might buy an AV system down the line rather than developing it in house. In particular, Ford has said it would like to focus on L2 and L3 driving which may have more near-term benefits.

Editor Comments

Daniel: Ford’s decision certainly reflects a trend among automakers–the difficulty and investment of time and money required to develop full self-driving technology is proving greater and greater. The question, it seems, is how many automakers and startups will make Ford’s decision to develop something more limited and consider buying AV down the line. With less competitive pressure and fewer actors in the ecosystem, will the advancement of AV technology be slower? Or will the shift towards tackling more manageable problems cut through the forest and prove a better path? Furthermore, I remain skeptical about the benefits of L2/3 autonomous driving unless certain human factors considerations are included. First, many people treat partially automated vehicles as self-driving. Second, there are studies that have shown humans do not work well with partially automated systems.

Research Highlight: What Makes Convolutional Models Great on Long Sequence Modeling?

Summary

A new simple, efficient, and effective global convolution for long sequence modeling has been developed by researchers from Microsoft Research, UIUC, and Princeton. The authors identify two key design principles for convolutions in long sequence models: fewer parameters than elements of the sequence, and decaying magnitude of weights for further away elements. Their proposed Structured Global Convolution (SGConv) satisfies these principles, and performs well in a variety of empirical settings.

Overview

Sequence models based on convolutions and Transformers have found tremendous success across different fields of machine learning, though they face issues when processing long sequences. Indeed, convolutional models are often based on local convolutions with a low window size, which have difficulty capturing long range interactions. Also, standard Transformers have runtime and memory requirements that scale quadratically with the sequence length, so they cannot process very long sequences. State space models (SSMs) such as S4 have recently emerged as efficient and effective models for processing long sequences. However, such models are more involved, and require certain computational tricks and initialization to make work.

The authors of the current work aimed to develop models that are as effective as SSMs, which only contain necessary components without further complexity. With an analysis of existing models and some empirical ablations, the authors identify two key design principles for a long sequence model: efficient parameterization and decaying structure.

Efficient parameterization: the number of parameters in the convolutional kernel should have a low number of parameters that scales sublinearly in the sequence length.

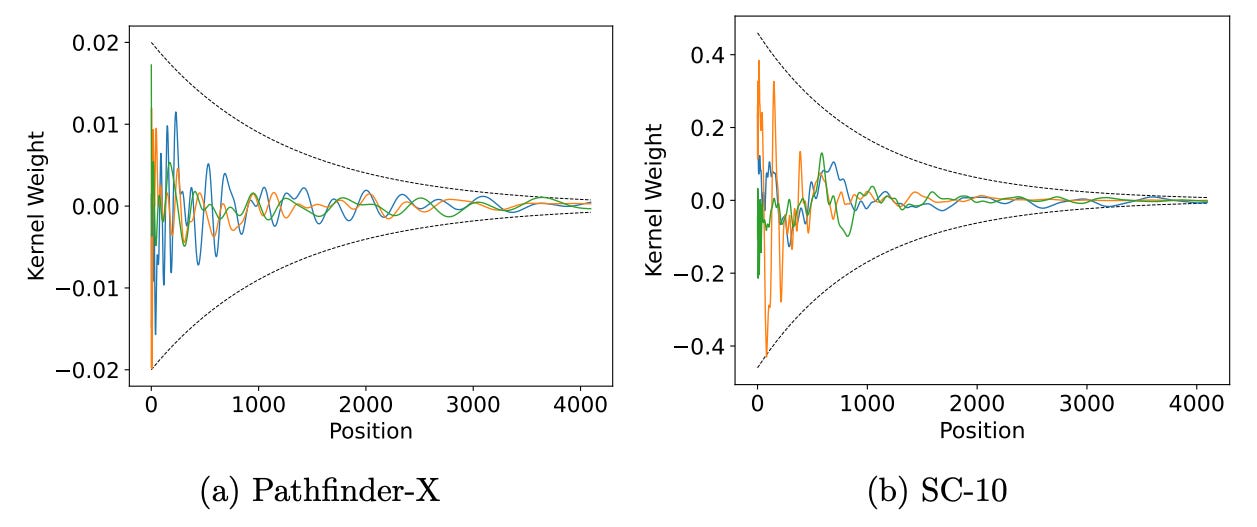

Decaying structure: the magnitude of values in the convolutional kernel should decay away from the base point. This provides the inductive bias that nearby elements in the sequences are weighted more than faraway elements.

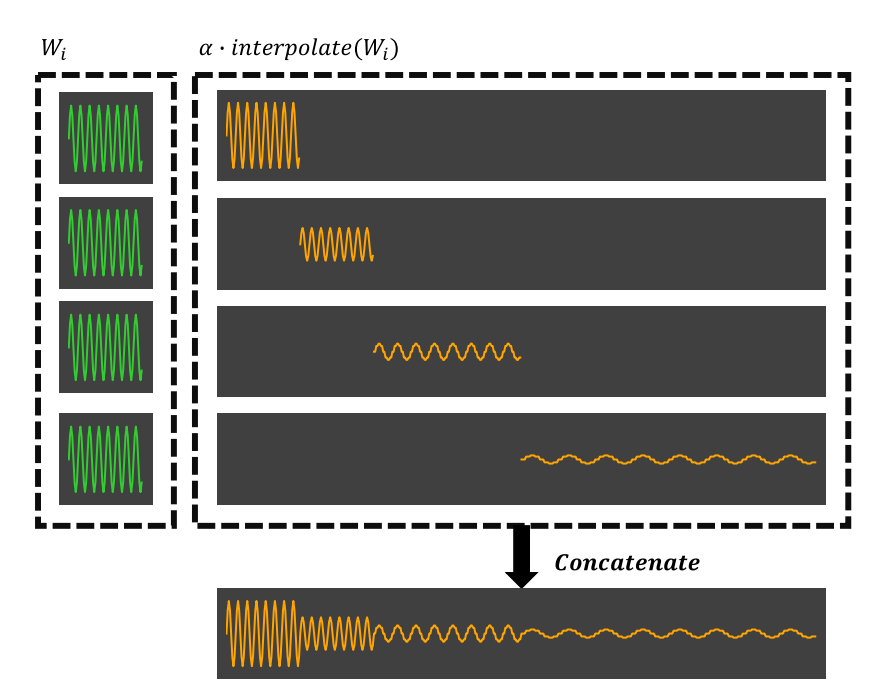

The authors propose a parameterization of a depth-wise convolution, dubbed Structured Global Convolution (SGConv), that satisfies these two design principles. The design of the convolution is simple, and nearly fits in a single equation. For a sequence of length L, SGConv only requires a number of d-dimensional base weight vectors w that is logarithmic in L. It upsamples each d-dimensional base weight vector to a longer length to use as a convolutional filter, and scales these upsampled filters so that longer ones are scaled down in magnitude.

In experiments, SGConv generally outperforms other models in the Long Range Arena sequence modelling tasks, Speech Command tasks, and when used as a drop-in replacement for certain convolutional or attentional layers in some language and vision tasks. Moreover, it is more runtime efficient than S4 and attention layers, even without optimized CUDA kernels. It has several other benefits over existing models too; SGConv is simpler to implement than S4, and requires much less memory than self-attention.

Why does it matter?

As SGConv is an efficient and simple module, it can potentially find use in many models across various domains. For instance, the authors find that simply substituting some attention or convolution layers in existing models for SGConv approximately retains performance, and further tuning or adjustments could boost performance further. We also asked the first author Yuhong Li for a brief interview:

Q&A:

Q: What does this mean for the field of sequence modelling / long sequence modelling?

Yuhong Li: First, we appreciate Albert Gu and others for the fantastic work S4 and the follow-up papers to make S4 efficient… However, when I read the paper and the code, I struggled because of the mathematical background knowledge required for state space models and acceleration of the model. And many researchers with limited understanding of the prior works have the same confusion. Thus, it’s less intuitive to be used for the general audience. Our work is based on empirical observations and finds two intuitive principles contributing to global convolutional models… Overall, we provide a non-state-space-model angle for constructing the global convolutional kernel with the two principles we mentioned above. The SGConv code we provide is plug-and-play. And most of the readers familiar with PyTorch can understand the code and try it for their applications within hours.

Q: What further work needs to be done, and/or why did you start this line of work?

Yuhong Li: We start the line of work trying to find alternative, efficient structures for long-sequence language modeling. And the finding of SGConv is also surprising to us since we didn’t expect such simple principles can work on wide-ranging tasks. And we believe that the state-space model or SGConv has the potential for other tasks that require long-range modeling.

Editor Comments

Derek: It is cool to see such a simply explained model that is motivated so intuitively and that performs so well. The encoded inductive biases are clear, and the efficiency benefits are evident. The authors did a great job at showing the utility of SGConv as a plug-and-play module in several different architectures and domains.

New from the Gradient

Matt Sheehan: China’s AI Strategy and Governance

Luis Voloch: AI and Biology

Other Things That Caught Our Eyes

News

How Google's former CEO Eric Schmidt helped write AI laws in Washington without publicly disclosing investments in AI start-ups ”About four years ago, former Google CEO Eric Schmidt was appointed to the National Security Commission on Artificial Intelligence by the chairman of the House Armed Services Committee.”

AI is disrupting long-held assumptions about universal grammar “Unlike the carefully scripted dialogue found in most books and movies, the language of everyday interaction tends to be messy and incomplete, full of false starts, interruptions, and people talking over each other.”

AI Digitally Restored a Lost Trio of Gustav Klimt Paintings. Not Everyone Is Happy About It. “Emil Wallner of the Google Arts and Culture team (above) joined Dr. Franz Smola, a leading Klimt expert at the Belvedere Museum, to use AI in an effort to re-create the paintings' colors. The end result: recolorized paintings that Dr. Smola says are in line with Klimt's style and vision.”

OpenAI will give roughly 10 AI startups $1M each and early access to its systems “OpenAI, the San Francisco-based lab behind AI systems like GPT-3 and DALL-E 2, today launched a new program to provide early-stage AI startups with capital and access to OpenAI tech and resources. Called Converge, the cohort will be financed by the OpenAI Startup Fund, OpenAI says.”

Papers

Adversarial Policies Beat Professional-Level Go AIs We attack the state-of-the-art Go-playing AI system, KataGo, by training an adversarial policy that plays against a frozen KataGo victim. Our attack achieves a >99% win-rate against KataGo without search, and a >50% win-rate when KataGo uses enough search to be near-superhuman. To the best of our knowledge, this is the first successful end-to-end attack against a Go AI playing at the level of a top human professional. Notably, the adversary does not win by learning to play Go better than KataGo -- in fact, the adversary is easily beaten by human amateurs. Instead, the adversary wins by tricking KataGo into ending the game prematurely at a point that is favorable to the adversary. Our results demonstrate that even professional-level AI systems may harbor surprising failure modes.

ERNIE-ViLG 2.0: Improving Text-to-Image Diffusion Model with Knowledge-Enhanced Mixture-of-Denoising-Experts In this paper, we propose ERNIE-ViLG 2.0, a large-scale Chinese text-to-image diffusion model, which progressively upgrades the quality of generated images~by: (1) incorporating fine-grained textual and visual knowledge of key elements in the scene, and (2) utilizing different denoising experts at different denoising stages. With the proposed mechanisms, ERNIE-ViLG 2.0 not only achieves the state-of-the-art on MS-COCO with zero-shot FID score of 6.75, but also significantly outperforms recent models in terms of image fidelity and image-text alignment, with side-by-side human evaluation on the bilingual prompt set ViLG-300.

A simple, efficient and scalable contrastive masked autoencoder for learning visual representations We introduce CAN, a simple, efficient and scalable method for self-supervised learning of visual representations. Our framework is a minimal and conceptually clean synthesis of (C) contrastive learning, (A) masked autoencoders, and (N) the noise prediction approach used in diffusion models. The learning mechanisms are complementary to one another: contrastive learning shapes the embedding space across a batch of image samples; masked autoencoders focus on reconstruction of the low-frequency spatial correlations in a single image sample; and noise prediction encourages the reconstruction of the high-frequency components of an image. The combined approach results in a robust, scalable and simple-to-implement algorithm. The training process is symmetric, with 50% of patches in both views being masked at random, yielding a considerable efficiency improvement over prior contrastive learning methods. … CAN outperforms MAE and SimCLR when pre-training on ImageNet, but is especially useful for pre-training on larger uncurated datasets such as JFT-300M: for linear probe on ImageNet, CAN achieves 75.4% compared to 73.4% for SimCLR and 64.1% for MAE…

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at editor@thegradient.pub and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! For feedback, you can also reach Daniel directly at dbashir@hmc.edu or on Twitter. If you enjoyed this newsletter, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!