Gradient Update #30: AI Art and Reliable Pretrained Language Models

In which we discuss how generative AI is impacting the art landscape and Plex, a method from Google that extends pretrained language models for reliability.

Welcome to the 30th update from the Gradient! If you were referred by a friend, subscribe and follow us on Twitter!

News Highlight: A new wave of art with AI

Summary

In the past few years, artists have increasingly been using AI as a tool to generate art. While some artists train generative models on carefully curated datasets, models such as DALL-E have democratized access to generating art by allowing users to prompt AI systems with text in order to generate images. As this new art form enters our cultural landscape, it also raises questions about its originality, ownership and ‘creativity’.

Background

Algorithms have long been used to generate art, starting in the 1960’s when a plotter was programmed to print geometric patterns. Since then, computer-generated art has been digitized and has witnessed many transformations, leading to today’s era of AI and generative modeling. While previously artists had to specify shapes or patterns to be drawn, digital artists today can create art with more abstract instructions.

In 2018, Mario Kinglemann created the series Memories of a Passerby by training a GAN on a curated set of paintings from the 17th to 19th centuries and sampling it to create paintings drawing from the patterns and features of the images it was trained on. The role of the artist here is twofold - one, to apply their aesthetic sensibilities to curate an appropriate training dataset, and second, to choose which images from the generator are worth being presented as art.

With the development of image generation models such as DALL-E, users need not spend time curating training datasets. Instead, the model can be fed a text prompt and produce images within minutes. Here, the artist’s task is primarily to provide creative prompts, which has in turn led to the creation of an entire sub-domain of prompt engineering catered to discovering patterns between text inputs and their generated images. Users have realized that specific keywords and phrases help the model better understand their intentions and generate images with the required effects. In fact, a recent “prompt book” by Guy Parsons aims to do exactly this by sharing a vast array of keywords and sentence structures to use with DALL-E 2.

Why does it matter?

While text-based image models have certainly made it easier to create art with AI, they have also raised many important questions. For instance, when a user creates an image with DALL-E, did the user create the art? Should ownership be instead attributed to the artists whose work was included in DALL-E’s training dataset, or to the engineers who developed DALL-E? Furthermore, how do we establish guidelines for plagiarism in this context? Does DALL-E produce novel images “inspired” by others in its training dataset, or does it merely compose and replicate patterns it has learned? As artists continue to use these tools to create art, and companies begin to monetize access, it’s imperative to answer these questions to ensure that this progress and increased accessibility is met with fair cultural norms.

Editor Comments

Daniel: I think the recent cases we’ve seen about patents and copyright demonstrate that our legal frameworks aren’t prepared to articulate and deal with the questions that arise with AI-generated art. The situation is indeed complicated: we’re looking at complex systems that has been developed by engineers and researchers using data generated from the work of artists and others. Those systems are then being used by still different people who are also contributing to the final product. This makes “credit assignment” really difficult–I think we can come to norms and agreements that simplify the situation (because I don’t think legislation that reflects the full nuance of what is happening here is feasible or implementable), but those norms need to reflect the current realities of our situation and not outdated assumptions about how art can and will be made.

Paper Highlight: Plex: Towards Reliability Using Pretrained Large Model Extensions

Summary

This week’s highlight works towards developing more reliable neural networks and measuring the reliability of various models. The collaboration between many authors at Google and Oxford proposes 10 task types across 40 datasets for evaluating model reliability. They develop “pretrained large model extensions” (Plex) for vision transformers (ViTs) and the T5 text encoder-decoder model, which improve these models’ performance on reliability measures.

Overview

The authors enumerate various desiderata for reliable AI models, which fall into three categories:

Uncertainty: we want the AI model to augment its predictions with some measure of confidence, which may include suggesting that a human make a difficult decision that the model is uncertain about.

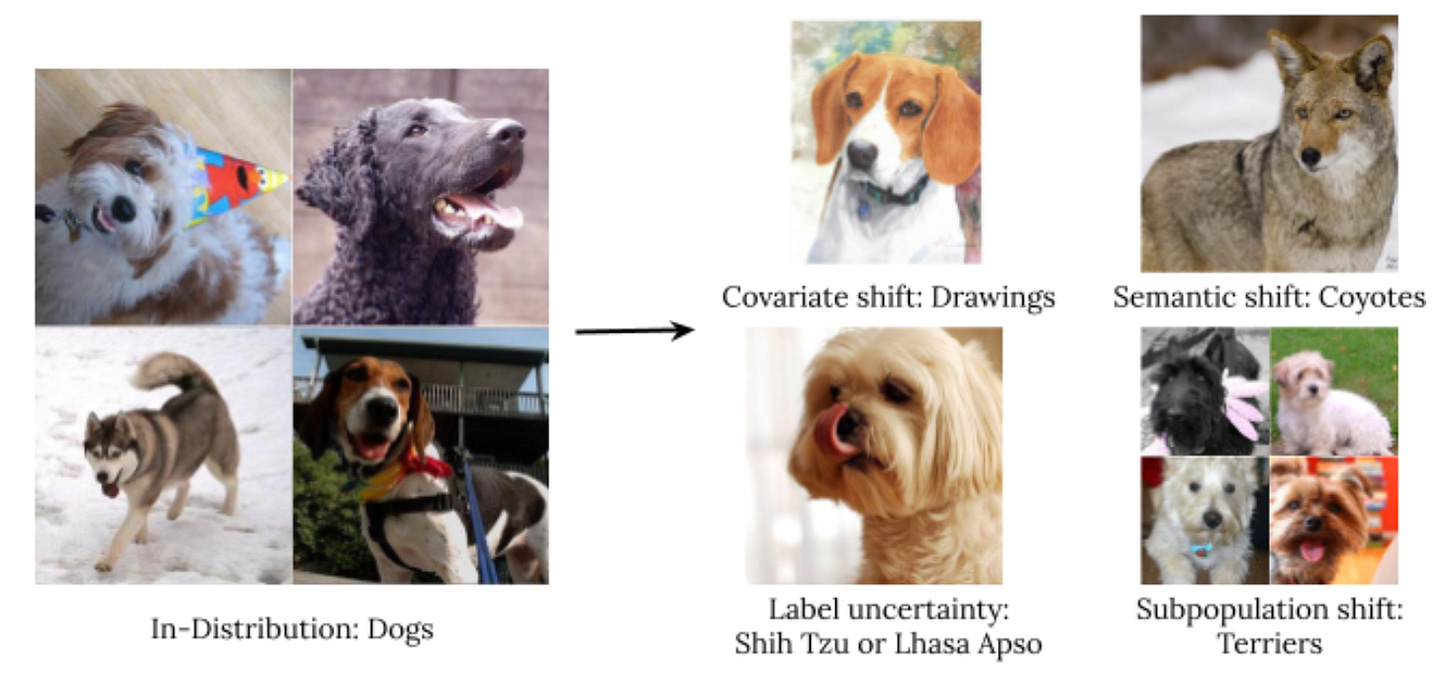

Robust Generalization: we want the model to perform well when it receives inputs from a distribution similar to its training distribution, while also doing well under various types of distribution shift (illustrated below).

Adaptation: we want the AI model to quickly learn from new data using few labeled examples; this includes active learning, in which the model aids in obtaining new data.

Types of distribution shift considered in the project

Plex uses a standard Transformer–namely ViT for vision and T5 for text–as its base model. As ensembles of models are useful for measuring uncertainty and robustness, the authors use an efficient ensembling method known as BatchEnsemble, which uses less time and memory than naive ensembling by training several copies of the same model architecture. Also, the authors replace the last layer of each Plex model with a Gaussian process, which captures some notion of uncertainty when inputs are very different from the training data. This Plex model is first pretrained on a large dataset, then finetuned on a target dataset, and finally evaluated on various metrics.

The experiments in this work reveal several interesting insights into reliability. First, increasing the model size or the pretraining dataset size improves performance on the reliability metrics. On the other hand, the size of the downstream dataset does not have an obvious correlation with reliability — though there is evidence of lower reliability for data distributions that appear to be very different from the pretraining data.

Why does it matter?

Much recent work on large pretrained models has focused on predictive performance on certain downstream tasks. To develop the safe, efficient, and widely applicable AI models of the future, we also need to understand and improve the reliability of AI. Further, we must ensure that reliable AI is used where possible, as deployment of unreliable AI could come with unforeseen circumstances under edge cases and other distribution shifts. As Plex shows that simple modifications to widely used Transformer models can improve reliability, this makes it more likely that reliable models will indeed be used.

Editor Comments

Derek: It is great that simple and efficient changes can improve reliability in the ways shown in this work. This, along with the clear exposition of reliability desiderata, make me want to further investigate these properties of neural networks.

Daniel: I’m always excited to see work that goes “beyond the scalar” in analyzing and optimizing for properties of neural networks that aren’t just performance on a particular task. We need to be able to do this more easily going forward in order to develop the types of systems people will trust and use in everyday life.

New from the Gradient

Sebastian Raschka: AI Education and Research

Lt. General Jack Shanahan: AI in the DoD, Project Maven, and Bridging the Tech-DoD Gap

Other Things That Caught Our Eyes

News

A 75-Year-Old Harvard Grad Is Propelling China's AI Ambitions “At a time when the US and China are divided on everything from economics to human rights, artificial intelligence is still a point of particular friction.”

Man Sues City of Chicago, Claiming Its AI Wrongly Imprisoned Him “Upon exoneration after nearly a year in jail, 65-year-old Chicago resident Michael Williams has filed a lawsuit against the city on grounds that a controversial AI program called ShotSpotter led to his essentially evidence-less arrest, The Associated Press reports.”

Documents Reveal Advanced AI Tools Google Is Selling to Israel “Training materials reviewed by The Intercept confirm that Google is offering advanced artificial intelligence and machine-learning capabilities to the Israeli government through its controversial ‘Project Nimbus’ contract.”

Papers

Learning Dynamics and Generalization in Reinforcement Learning Solving a reinforcement learning (RL) problem poses two competing challenges: fitting a potentially discontinuous value function, and generalizing well to new observations. In this paper, we analyze the learning dynamics of temporal difference algorithms to gain novel insight into the tension between these two objectives… temporal difference learning encourages agents to fit non-smooth components of the value function early in training, and at the same time induces the second-order effect of discouraging generalization. We corroborate these findings in deep RL agents trained on a range of environments… Finally, we investigate how post-training policy distillation may avoid this pitfall, and show that this approach improves generalization to novel environments in the ProcGen suite and improves robustness to input perturbations.

MegaPortraits: One-shot Megapixel Neural Head Avatars In this work, we advance the neural head avatar technology to the megapixel resolution while focusing on the particularly challenging task of cross-driving synthesis… We propose a set of new neural architectures and training methods that can leverage both medium-resolution video data and high-resolution image data to achieve the desired levels of rendered image quality and generalization to novel views and motion. We demonstrate that suggested architectures and methods produce convincing high-resolution neural avatars, outperforming the competitors in the cross-driving scenario…

Tweets

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at gradientpub@gmail.com and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! If you enjoyed this piece, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!