Gradient Update #26: Facial Recognition in the Real World and Large Language Model Advances

In which we discuss challenges and opportunities for facial recognition companies like Clearview AI, and recent large language model advances like Imagen and zero-shot reasoning.

Welcome to the 26th update from the Gradient! If you were referred by a friend, subscribe and follow us on Twitter!

News Highlight: Facial Recognition in the real world - Setbacks and opportunities for Clearview AI

Summary

Clearview AI, an infamous facial recognition technology company, has recently made headlines again as regulators in different countries have limited the scope of their business. A recent lawsuit from the ACLU resulted in a settlement that banned Clearview AI from selling its product to most private businesses, while the UK fined the company $10 million for breaching their privacy laws. However, the organization also shared that it is in conversation with a partner to bring its facial recognition technology to schools in the US, marking a tumultuous landscape full of challenges and opportunities for the controversial tech company.

Background

Clearview AI, led by Australian entrepreneur Hoan Ton-That, boasts of the largest dataset of facial images in the world, containing more than 10 billion images scraped from public sources on the open internet. The dataset powers facial recognition technology that has mostly been used by public law enforcement agencies till now. Clearview’s original methodology utilizes a “one-to-many matching” approach in which their algorithm would match a queried image with the billions of images in their database, and then return the closest matches. A version of this technology is currently being used in Ukraine to identify both Ukrainian and Russian corpses. Clearview AI returns the social media account for each soldier and the Ukranian government informs the family of the deceased.

Many lawmakers and privacy advocates have raised concerns about Clearview. Their arguments center around consent in curating the dataset — although Clearview scrapes all images from publicly available sources, most people will never know that their photos are a part of the company’s database. While that sounds legal but unethical, the company has been deemed illegal in Australia and Canada, and four countries have asked the organization to cease operations due to violations of their data privacy laws. In the US, the company’s settlement with the ACLU prevents it from doing business with most private companies, but it can continue to provide the service to local and federal law enforcement agencies (excepting those in Illinois). The company must also provide an “opt-out” option for Illinois residents and spend at least $50,000 in advertising this opt-out option.

The backlash has prompted Clearview AI to shift its methodology to a “one-to-one” matching process, which is being promoted as a “database-free” approach. The new program, called “Clearview Consent”, is aimed at commercializing facial recognition technology and is meant to be used in ‘consent-based workflows’. This could mean something similar to Apple’s FaceID, which users set up themselves and can then be used to access their phone, make payments, and verify their identity on banking apps. A major advantage to this approach is that Clearview AI can continue selling its database-backed model to government agencies, while the new one-to-one face matching methodology can be sold to companies in the US without violating the terms of the ACLU settlement. The company also has the potential to excel in this area – Clearview’s algorithm performed near flawlessly in a vendor test at the National Institute of Standards and Technology, and was ranked #1 in the US and top ten globally. Privacy advocates have also welcomed this shift in the algorithm as it eases the concerns of the dataset being used commercially.

Why does it matter?

The primary concern many data privacy organizations have with Clearview’s algorithm is that of ‘public anonymity’. The freedom of an individual to take a walk, go to the grocery store, ride public transportation, without being constantly identified and tracked, is put at risk if Clearview’s model could be deployed commercially. While the billions of images they use to develop their model are all scraped from public sources, many individuals have raised ethical concerns around the context in which the images they post online are being used.

Something to note here is that the idea of a large web-crawled dataset of images is not novel, and if the dataset itself creates ethical concerns, then many of us in the AI community must pay heed too. Computer vision researchers may be familiar with the Flickr-Face-HQ (FFHQ) dataset, which entirely consists of 70,000 images with permissive licenses scraped from flickr. While the FFHQ dataset is not intended to be used for facial recognition and only for benchmarking generative models, it did lay the groundwork for larger web-crawled datasets to be developed by others. Did individuals posting on flickr know that their images could be a part of a large research database? It is legal in the US, but is it ethical? Perhaps it is harmless for research itself, but as companies begin to deploy new methods into production faster, new applications of the same technology tend to emerge. Now more than ever, it is perhaps necessary for AI researchers in the industry and academia to consider the implications of their research outside of its intended use, and develop methodologies that allow for data to be curated ethically.

Editor Comments

Daniel: Clearview AI and facial recognition vendors in general have always been a grey area for me. Data collection methods like Clearview’s frequently feel “unethical,” but I sometimes find it frustrating that we jump to making these claims without working through logical arguments first. For instance, systems like Clearview’s could have uses in the public interest—yes, they are flawed and biased, but as facial recognition systems become more capable these claims are worth taking seriously (and, of course, this does get into debates over how facial recognition systems could create real-life panopticons and so forth). We also willingly give our data to platforms to provide better services for us (again, this is now fairly contentious), and there is probably a question over how beneficial those services are. I still think facial recognition systems need to be discussed and understood far more deeply before we attempt to pass final judgement.

Paper Highlight: Large Language Model Advances: Imagen and Zero-Shot Reasoning

Summary

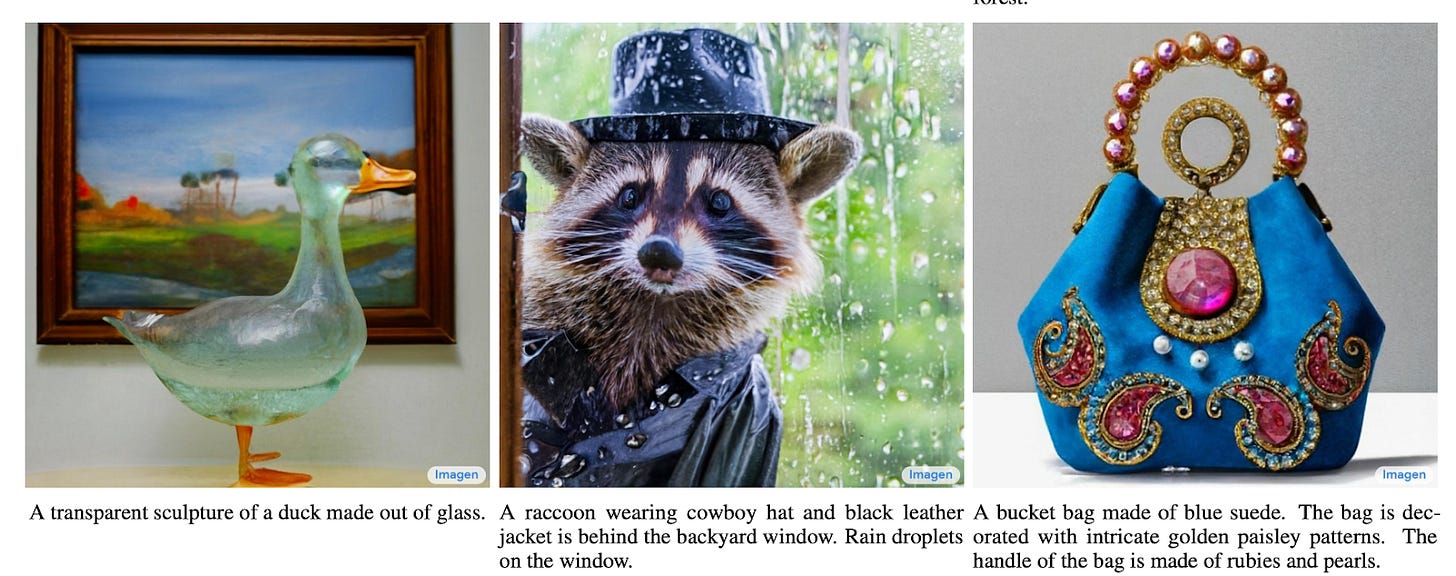

Many recent works have built off of versatile pretrained large language models (LLMs). Recently, some works have proposed relatively simple modifications to LLMs that allow them to accomplish amazing feats. Google Brain’s Imagen model uses a diffusion model on top of a frozen generic LLM to achieve state-of-the-art text-conditioned image generation. A work by the University of Tokyo and Google Brain shows that simply adding “Let’s think step by step” to the prompt allows an LLM to achieve decent zero-shot reasoning performance.

Overview

Imagen is a text-to-image diffusion model that leverages text embeddings from a generic LLM (T5 XXL). The LLM is frozen, meaning that it is not updated during training. The rest of the model is a diffusion model that is conditioned on the LLM text embeddings and generates images. In contrast, DALL-E 2 encodes text using CLIP, which was trained in a multimodal fashion to embed text and images in a shared latent space. Imagen outperforms DALL-E 2 on MS-COCO and several human evaluation metrics, thus showing the power of an LLM that was only trained on text, and no images. Moreover, experiments show that increasing the size of the LLM significantly improves performance, and is more impactful than increasing the size of the diffusion model.

In another work, researchers affiliated with the University of Tokyo and Google Brain demonstrate that a simple change can significantly improve the zero-shot reasoning capabilities of LLMs. Past works found that LLMs often achieve strong performance in intuitive, single-step tasks (system 1), yet they often struggle with multi-step reasoning tasks (system 2). This work shows that simply adding “Let’s think step by step” to the end of the prompt encourages the LLM to reason in multiple steps, and significantly improves zero-shot accuracy. The authors also tried other templates (shown in Table below), and many of them also improve accuracy by large margins, though accuracy significantly varies across templates.

Why does it matter?

The largest language models can be very difficult and costly to train (see the logbook detailing the training process of Meta AI’s Open Pretrained Transformer). Thus, extracting as much as possible out of the current LLMs can save much time and energy. It can also help us prioritize what the research community should work on: the zero-shot reasoning paper suggests that it may be beneficial to work on general prompting that works across multiple tasks, as opposed to only on narrow task-specific prompting. Since these two works achieve impressive results through only using relatively simple changes on top of LLMs, other simple changes may further improve the capabilities of models that incorporate LLMs.

Editor Comments

Derek: I always like to see simple architectures and modifications that nonetheless perform well. Both of these works only require querying a LLM, without accessing or fine-tuning the underlying weights. Since many more researchers can query LLMs, whereas few researchers have the access or hardware for dealing with the parameters of trained LLMs, these two works can be used and built upon by many researchers in the future.

Daniel: I’m also interested in the simplicity that underlies these seemingly diverse improvements in capability—it seems like the path forward is often “make the language model bigger.” It’s particularly interesting how the LLM in Imagen doesn’t use a contrastive objective like CLIP in order to incorporate information about images into its text embeddings the way DALL-E 2 does.

New from the Gradient

Ben Green: “Tech for Social Good” Needs to Do More

Max Braun: Teaching Robots to Help People in their Everyday Lives

Other Things That Caught Our Eyes

News

Who’s liable for AI-generated lies? “As advanced AIs such as OpenAI’s GPT-3 are being cheered for impressive breakthroughs in natural language processing and generation — and all sorts of (productive) applications for the tech are envisaged from slicker copywriting to more capable customer service chatbots — the risks of such powerful text-generating tools inadvertently automating abuse and spreading smears can’t be ignored.”

Pony.ai loses permit to test autonomous vehicles with driver in California “The California Department of Motor Vehicles revoked Pony.ai’s permit to test its autonomous vehicle technology with a driver on Tuesday for failing to monitor the driving records of the safety drivers on its testing permit.”

Robots Pick Up More Work at Busy Factories “Robots are turning up on more factory floors and assembly lines as companies struggle to hire enough workers to fill rising orders.”

Why Isn’t New Technology Making Us More Productive? “For years, it has been an article of faith in corporate America that cloud computing and artificial intelligence will fuel a surge in wealth-generating productivity. That belief has inspired a flood of venture funding and company spending.”

Papers

ReLU Fields: The Little Non-linearity That Could In many recent works, multi-layer perceptions (MLPs) have been shown to be suitable for modeling complex spatially-varying functions including images and 3D scenes… this expressive power of the MLPs, however, comes at the cost of long training and inference times. On the other hand, bilinear/trilinear interpolation on regular grid based representations can give fast training and inference times, but cannot match the quality of MLPs without requiring significant additional memory… We introduce a surprisingly simple change that [retains the high fidelity result of MLPs while enabling fast reconstruction and rendering times] -- simply allowing a fixed non-linearity (ReLU) on interpolated grid values. When combined with coarse to-fine optimization, we show that such an approach becomes competitive with the state-of-the-art.

Translating Images into Maps We approach instantaneous mapping, converting images to a top-down view of the world, as a translation problem. We show how a novel form of transformer network can be used to map from images and video directly to an overhead map or bird's-eye-view (BEV) of the world, in a single end-to-end network… Posing the problem as translation allows the network to use the context of the image when interpreting the role of each pixel. This constrained formulation, based upon a strong physical grounding of the problem, leads to a restricted transformer network that is convolutional in the horizontal direction only. The structure allows us to make efficient use of data when training, and obtains state-of-the-art results for instantaneous mapping of three large-scale datasets…

Tweets

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at gradientpub@gmail.com and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! If you enjoyed this piece, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!