Gradient Update #20: New Chinese Regulations, DeepNet

In which we discuss China's new regulations on recommendation algorithms and a 1000-layer transformer.

Welcome to the 20th update from the Gradient! If you were referred by a friend, subscribe and follow us on Twitter!

News Highlight: China’s Regulatory Laws on Recommendation Algorithms Go Into Effect

Summary

Earlier this year, China passed laws that severely restrict the ability of tech companies to use recommendation algorithms in their products. Many Chinese companies like e-commerce giant AliBaba, video games production house Tencent, and the global social media phenomenon TikTok, all use recommendation algorithms in their products. The laws, which went into effect on March 1st this year, are one of the first in the world to impose such sweeping regulations on tech companies and will undoubtedly set the global stage in the years to come.

Background

In the past few years, many countries have introduced legislation to rein in big tech companies that have capitalized on customer data to build their empires. In most of the western world, only plans or draft bills exist: the US has introduced an array of bills but not enacted any yet, the UK revealed plans to create a ‘pro-competition’ regulator in 2021, and the EU has continued discussions on the Digital Markets Act (DMA) to regulate big-tech companies, to be enacted next year. In the meantime, China has emerged as one of the first countries to fully enact a sweeping tech regulation bill, which lays out strict restrictions on how companies can use personal recommendation algorithms. Courtesy of CNBC, here are some key highlights from the bill:

Companies must not use algorithmic recommendations to do anything that violates Chinese laws, such as endangering national security.

Algorithmic recommendation services that provide news information need to obtain a license and cannot push out fake news. This provision was a new addition to last year’s draft rules.

Companies need to inform users about the “basic principles, purpose and main operation mechanism” of the algorithmic recommendation service.

Users must be able to opt out of having recommendation services via algorithms.

Users must be able to select or delete tags that are used to power recommendation algorithms and suggest things to them.

Companies must facilitate the “safe use” of algorithmic recommendation services for the elderly, protecting them against things like fraud and scams. This was also a new addition to the previous draft.

Why does it matter?

While the law has received global attention for being one of the first of its kind, it also merits discussion because of multiple other factors. While many of the acts' policies seem well-intentioned, their vague language and enforcement mechanisms can quickly turn things awry. For instance, the text of the bill mentions that companies must “promote positive energy” through their recommendations and not risk national security. However, what constitutes a violation of these guidelines is unclear. Does dissent against a government policy go against “positive energy”? Which social media websites risk being fined over allowing such posts on their platforms? Who determines if a news article is fake or not? Is it a government agency or a third party? Another stream of questions arises from how these laws will be enforced, which experts say will likely involve looking at proprietary source code. How will a company’s Intellectual Property rights be upheld in this situation? And are government agencies qualified enough to scour through millions of lines of code to determine if it meets the legal requirements?

Many questions remain to be asked, but what unfolds in this next chapter of tech regulation will certainly be a first for the modern world.

Editor Comments

Daniel: It’s fascinating to see how differently China has treated social media sites and AI businesses in general. It can be a bit jarring to contrast the ways in which Beijing deals with them, but considering China’s goals for AI systems puts this in an interesting light. I’ll be curious to watch how these laws evolve and whether other countries follow China’s lead on this front.

Andrey: These laws are sure to have a massive impact on many popular social media sites, shopping platforms, and countless other websites. There is a ton of evidence that social media and recommendation engines in particular have led to negative impacts for mental health, misinformation, radicalization, and other problems. Therefore, it is an interesting question whether such a powerful set of laws would be beneficial for countries in the west. It is not likely that this would even be possible, but perhaps something similar could be attempted.

Paper Highlight: DeepNet: Scaling Transformers to 1,000 Layers

Summary

The authors develop a novel normalization function and new weight initializations for Transformers, which allows successful training of Transformers with an order of magnitude more layers than previous Transformers. These proposed modifications (termed DeepNet) are easy to implement with a few changes to the code of existing Transformers. The new normalization function and initialization is supported by a theoretical analysis of gradient updates in Transformer encoders and decoders. Experiments show the effectiveness of DeepNet for improving training stability, which allows analysis of scaling laws with respect to Transformer depth and significantly improves large-scale multilingual translation performance across experiments with over 100 languages.

Overview

The Transformer architecture has revolutionized various fields of deep learning in the last several years, due in part to its scalability to large parameter counts, state-of-the-art empirical performance, and applicability to diverse tasks and application domains. However, Transformers are known to be difficult to train, so various tricks and architecture variants have been proposed to improve training stability. For instance, the original Transformer used layer normalization after the attention and feed forward network submodules (Post-LN), while later works often put LayerNorm before these submodules (Pre-LN). While often improving stability of training and ease of hyperparameter tuning, Pre-LN is still outperformed by Post-LN on various tasks.

Source: On Layer Normalization in the Transformer Architecture, Xiong et al. 2019

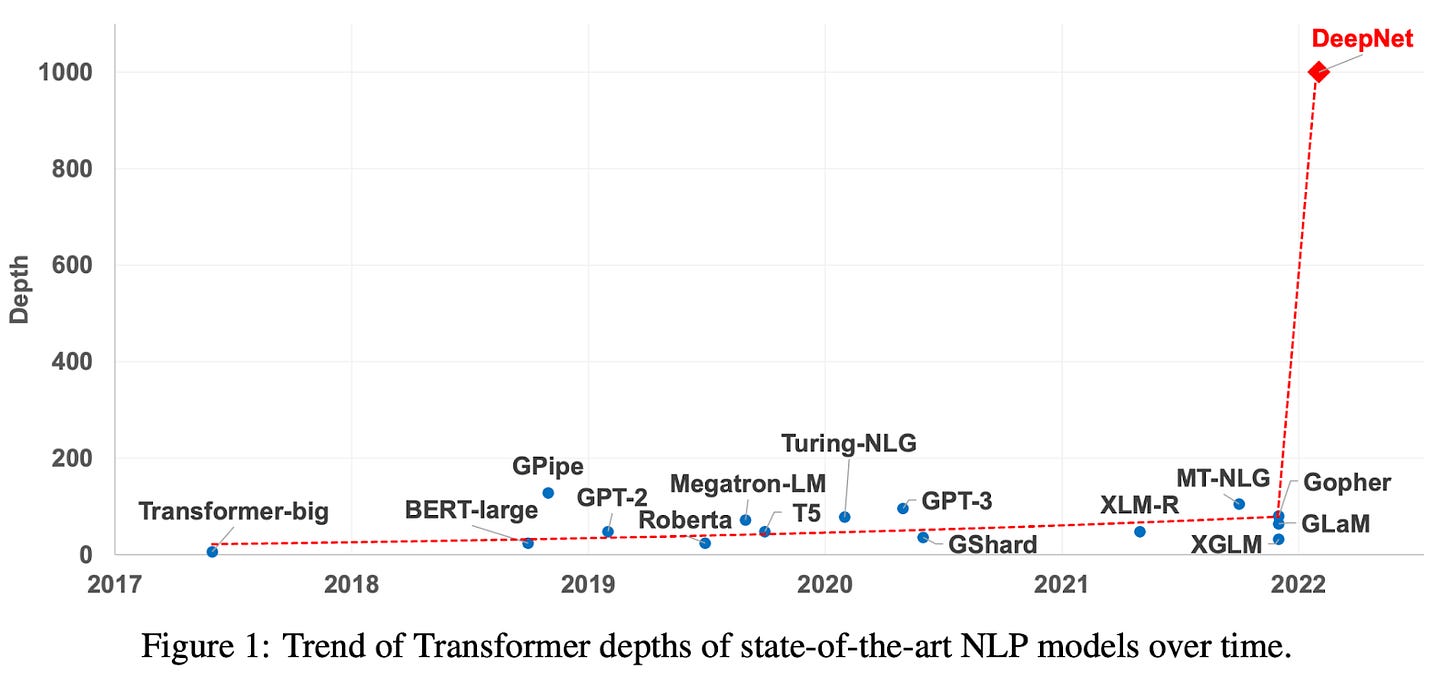

Other works have made changes through altered weight initialization or other architectural modifications. Still, Transformers have not yet been scaled up to 1000 layers, which the current work aims to do while allowing the training-stability benefits of Pre-LN along with the strong performance of Post-LN. The authors propose a few changes to a Post-LN architecture that can be captured in a small bit of code.

In particular, the authors introduce new alpha and beta parameters, which are both scalars. The alpha parameter scales the output of the submodule f, i.e. the attention module or the feedforward network of each Transformer block. The beta parameter controls the scale of the parameter initializations for the feedforward network weights, value projection, and output projection. Moreover, the authors give choices of the alpha and beta that can be computed solely from the number of layers of the Transformer.

The authors theoretically justify their choices of alpha and beta by deriving the expected updates to the Transformer as it learns by stochastic gradient descent. By setting alpha and beta correctly, the authors show that the model updates can be expected to be appropriately bounded, so there is no explosion of weights. Since the choice of alpha and beta only depend on the number of layers in the Transformer encoder and decoder, it can be computed ahead of time, before any training.

Experiments show that DeepNet can indeed be trained with 1000 layers, which is significantly higher than any previous Transformer. While other Transformer architectures drop in performance or even diverge when adding more layers, DeepNet maintains or increases performance as the number of layers is increased. In particular, deeper versions of DeepNet with 1000 layers perform much better than shallower versions on large-scale multilingual machine translation tasks.

Why does it matter?

As neural networks have been found to improve predictably in certain settings with more data, parameters, and compute time, we may expect major gains in performance from tweaking hyperparameters like depth in our networks. This work has given a way to significantly increase depth in Transformers to a level that has not been achieved before. Thus, this gives a new avenue to improve the performance of Transformers in the many domains that it has found success in — simply try increasing the depth further.

Editor Comments

Derek: As someone who can not keep up with the many Transformer modifications being proposed in the literature, this work caught my eye. For one, they have a seemingly reasonable theoretical analysis, which helps justify their proposed modifications to me, as I have not followed recent empirical results in NLP benchmarks. Also, they allow training Transformers of four-digit depth, which I expect may be a useful dimension to explore in the future.

Andrey: This is clearly cool, but the obvious question is whether greater depth is even a worthwhile goal. The abstract does state “Remarkably, on a multilingual benchmark with 7,482 translation directions, our 200-layer model with 3.2B parameters significantly outperforms the 48-layer state-of-the-art model with 12B parameters by 5 BLEU points, which indicates a

promising scaling direction”, and this increase in performance despite having a fourth of the parameters is indeed a big deal. Still, many tricks in deep learning have been shown to not be essential and replaceable with simpler tricks that work just as well, so we’ll have to see if this gets widely adopted.

New from the Gradient

Bootstrapping Labels via ___ Supervision & Human-In-The-Loop

Other Things That Caught Our Eyes

News

Dartmouth Researchers Propose A Deep Learning Model For Emotion-Based Modeling of Mental Disorders Using Reddit Conversations “According to the World Health Organization (WHO), mental diseases impact one out of every four people at some point in their lives, according to the World Health Organization (WHO).”

Can A.I. Help Casinos Cut Down on Problem Gambling? “A few years ago, Alan Feldman wandered onto the exhibition floor at ICE London, a major event in the gaming industry.”

U.S. eliminates human controls requirement for fully automated vehicles “U.S. regulators on Thursday issued final rules eliminating the need for automated vehicle manufacturers to equip fully autonomous vehicles with manual driving controls to meet crash standards.”

AI-produced images can’t fix diversity issues in dermatology databases “Image databases of skin conditions are notoriously biased towards lighter skin. Rather than wait for the slow process of collecting more images of conditions like cancer or inflammation on darker skin, one group wants to fill in the gaps using artificial intelligence.”

Papers

µTransfer: A technique for hyperparameter tuning of enormous neural networks Hyperparameter (HP) tuning in deep learning is an expensive process, prohibitively so for neural networks (NNs) with billions of parameters. We show that, in the recently discovered Maximal Update Parametrization (μP), many optimal HPs remain stable even as model size changes. This leads to a new HP tuning paradigm we call *μTransfer*: parametrize the target model in μP, tune the HP indirectly on a smaller model, and *zero-shot transfer* them to the full-sized model, i.e., without directly tuning the latter at all. We verify μTransfer on Transformer and ResNet. For example, 1) by transferring pretraining HPs from a model of 13M parameters, we outperform published numbers of BERT-large (350M parameters), with a total tuning cost equivalent to pretraining BERT-large once; 2) by transferring from 40M parameters, we outperform published numbers of the 6.7B GPT-3 model, with tuning cost only 7% of total pretraining cost. A Pytorch implementation of our technique can be found at github.com/microsoft/mup and installable via pip install mup.

Vision Models Are More Robust And Fair When Pretrained On Uncurated Images Without Supervision Discriminative self-supervised learning allows training models on any random group of internet images, and possibly recover salient information that helps differentiate between the images. Applied to ImageNet, this leads to object centric features that perform on par with supervised features on most object-centric downstream tasks. In this work, we question if using this ability, we can learn any salient and more representative information present in diverse unbounded set of images from across the globe. To do so, we train models on billions of random images without any data pre-processing or prior assumptions about what we want the model to learn. We scale our model size to dense 10 billion parameters to avoid underfitting on a large data size. We extensively study and validate our model performance on over 50 benchmarks including fairness, robustness to distribution shift, geographical diversity, fine grained recognition, image copy detection and many image classification datasets. The resulting model, not only captures well semantic information, it also captures information about artistic style and learns salient information such as geolocations and multilingual word embeddings based on visual content only. More importantly, we discover that such model is more robust, more fair, less harmful and less biased than supervised models or models trained on object centric datasets such as ImageNet.

Constrained Instance and Class Reweighting for Robust Learning under Label Noise Deep neural networks have shown impressive performance in supervised learning, enabled by their ability to fit well to the provided training data. However, their performance is largely dependent on the quality of the training data and often degrades in the presence of noise. We propose a principled approach for tackling label noise with the aim of assigning importance weights to individual instances and class labels. Our method works by formulating a class of constrained optimization problems that yield simple closed form updates for these importance weights. The proposed optimization problems are solved per mini-batch which obviates the need of storing and updating the weights over the full dataset. Our optimization framework also provides a theoretical perspective on existing label smoothing heuristics for addressing label noise (such as label bootstrapping). We evaluate our method on several benchmark datasets and observe considerable performance gains in the presence of label noise.

FastFold: Reducing AlphaFold Training Time from 11 Days to 67 Hours Protein structure prediction is an important method for understanding gene translation and protein function in the domain of structural biology. AlphaFold introduced the Transformer model to the field of protein structure prediction with atomic accuracy. However, training and inference of the AlphaFold model are time-consuming and expensive because of the special performance characteristics and huge memory consumption. In this paper, we propose FastFold, a highly efficient implementation of the protein structure prediction model for training and inference. FastFold includes a series of GPU optimizations based on a thorough analysis of AlphaFold's performance. Meanwhile, with Dynamic Axial Parallelism and Duality Async Operation, FastFold achieves high model parallelism scaling efficiency, surpassing existing popular model parallelism techniques. Experimental results show that FastFold reduces overall training time from 11 days to 67 hours and achieves 7.5-9.5X speedup for long-sequence inference. Furthermore, We scaled FastFold to 512 GPUs and achieved an aggregate of 6.02 PetaFLOPs with 90.1% parallel efficiency. The implementation can be found at this https URL

Learning Robust Real-Time Cultural Transmission without Human Data Cultural transmission is the domain-general social skill that allows agents to acquire and use information from each other in real-time with high fidelity and recall. In humans, it is the inheritance process that powers cumulative cultural evolution, expanding our skills, tools and knowledge across generations. We provide a method for generating zero-shot, high recall cultural transmission in artificially intelligent agents. Our agents succeed at real-time cultural transmission from humans in novel contexts without using any pre-collected human data. We identify a surprisingly simple set of ingredients sufficient for generating cultural transmission and develop an evaluation methodology for rigorously assessing it. This paves the way for cultural evolution as an algorithm for developing artificial general intelligence.

How Do Vision Transformers Work? The success of multi-head self-attentions (MSAs) for computer vision is now indisputable. However, little is known about how MSAs work. We present fundamental explanations to help better understand the nature of MSAs. In particular, we demonstrate the following properties of MSAs and Vision Transformers (ViTs): 1 MSAs improve not only accuracy but also generalization by flattening the loss landscapes. Such improvement is primarily attributable to their data specificity, not long-range dependency. On the other hand, ViTs suffer from non-convex losses. Large datasets and loss landscape smoothing methods alleviate this problem; 2 MSAs and Convs exhibit opposite behaviors. For example, MSAs are low-pass filters, but Convs are high-pass filters. Therefore, MSAs and Convs are complementary; 3 Multi-stage neural networks behave like a series connection of small individual models. In addition, MSAs at the end of a stage play a key role in prediction. Based on these insights, we propose AlterNet, a model in which Conv blocks at the end of a stage are replaced with MSA blocks. AlterNet outperforms CNNs not only in large data regimes but also in small data regimes.

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at gradientpub@gmail.com and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! If you enjoyed this piece, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!