Gradient Update #12: Trouble at Google AI and Correcting Outputs of Language Models

In which we discuss Google AI’s Researchers have trouble publishing after high-profile exits and Fast Modeling at Scale

Welcome to the 12th update from the Gradient! If you were referred by a friend, subscribe and follow us on Twitter!

News Highlight: Google AI’s Researchers have trouble publishing after high-profile exits

Summary

According to Google researchers, the company’s lawyers are making it difficult to publish their work. Concerned about stoking further public controversy, the lawyers seem to be avoiding publishing even uncontentious studies. This has resulted in a slowdown: Google’s output has dropped from nearly 1000 papers in 2020 to 618 in 2021 to date. Researchers have noted that papers on topics like bias have been subjected to intense scrutiny by lawyers who have little understanding of the technologies they are commenting on. However, when Business Insider approached Google, a spokesperson said the company’s research review process involves a number of interdisciplinary researchers and that the company has in fact been publishing at a faster rate than last year, but that the company website was not reflecting current numbers.

Background

Google has been embroiled in controversy after the high-profile exits of Timnit Gebru and Margaret Mitchell, two outspoken leaders of the company’s Ethical AI team. Gebru was terminated because senior employees were concerned about her publishing a paper on the dangers of large language models. Mitchell was later fired for, according to Google, sending internal company documents to an external email. The company has since affirmed its commitment to inclusivity and the team, but skepticism remains. Employees have expressed discontent with the company’s reorganization of the Ethical AI team, and a number of other employees have cited Gebru’s and Mitchell’s exits when leaving the company.

Why does it matter?

Google has one of the largest presences and some of the most prolific research output in the AI space, but its reputation seems to have been harmed significantly by the exits of Gebru and Mitchell. The ire directed at Google might serve as a cautionary tale for other companies that wish to lay stake in the ethical AI space not to tout principles that are contradicted by their actions.

Paper Highlight: Fast Model Editing at Scale

Background

Over the past few years advances in architectures, hardware, and pre-training techniques have led large language models (transformers) to dominate metrics leaderboards and discourse in ai ethics. Two common barriers to training and updating these models revolve around their size and the data that goes into the training. These models often have tens (or hundreds) of billions of parameters requiring clusters of GPUs and other specialized hardware, creating a huge financial burden for any researcher wanting to replicate or try to further update models.

Another barrier to training comes from the training data which is often either raw or filter snapshots of the internet (for better and worse). These massive unlabeled corpuses make changing knowledge information in the training set next to impossible as well as staying up to date with new developments as well as ignoring all of the lies, fake news, racist tirades, and sexually abusive materials that make up certain corners of the internet and inevitably creep into these training sets.

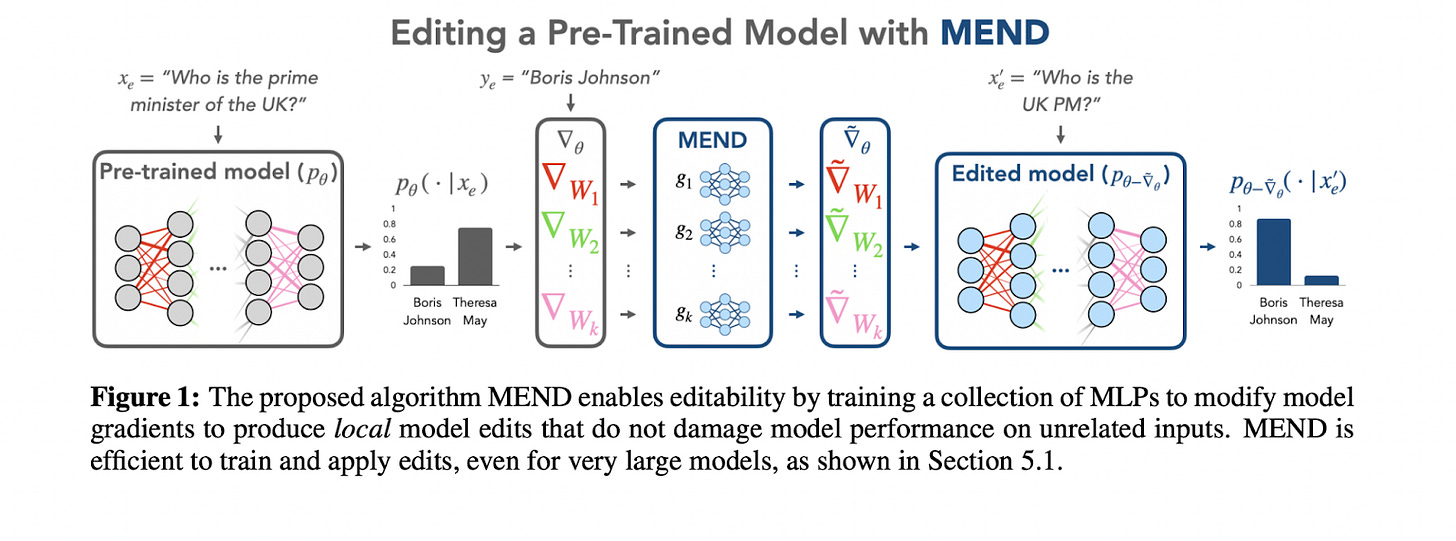

In order to correct those mistakes as well as the numerous others that are bound to creep up in any machine learning problem space, researchers at Stanford present “Model Editor Networks with Gradient Decomposition (MEND), a collection of small auxiliary editing networks that use a single desired input-output pair to make fast, local edits to a pre-trained mode”.

Overview

The main contribution of the paper is a method to modify outputs for certain inputs into the model, while not impacting accuracy on other inputs. For example, if the model incorrectly outputs ‘Theresa May’ for the input ‘Who is the prime minister of the UK’, it may be desirable to update it to instead correctly output ‘Boris Johnson’. Correcting such an output via simple fine-tuning (further training specifically on this input and output pair) will work, but is also likely to impact performance on other inputs.

The key idea of this paper is to first compute the fine-tuning gradient updates for a given input-output pair, and then use these gradient updates as an input to a set of auxiliary networks that outputs revised weight updates, with each network corresponding to a distinct layer in the model. These revised weight updates are meant to update the model to correctly output the ‘edit’ output for the ‘edit’ input, while also not changing the outputs for other inputs. These auxiliary networks are themselves trained to output such gradient updates via a dataset of edit examples and two loss components corresponding to these two objectives. Since the gradient updates are high dimensional, the paper also introduces a special parametrization that allows the auxiliary models to remain parameter efficient.

Why does it matter?

MEND shows great promise at correcting local model decisions without incurring obscene barriers to retraining all while retaining the overall performance benefits of large language models. The biggest benefactors from MEND will no doubt be first felt by the large technology companies currently deploying language models at scale. Those companies, with access to large moderation forces should be very interested in leveraging human-in-the-loop to refine and update their models.

New from the Gradient

Peter Henderson on RL Benchmarking, Climate Impacts of AI, and AI for Law

An interview Stanford JD-PhD candidate Peter Henderson, whose research is on creating robust decision-making systems to create new ML methods for applications that are beneficial to society.

Subscribe to The Gradient Podcast: Apple Podcasts | Spotify | Pocket Casts | RSS

Strong AI Requires Autonomous Building of Composable Models

We need strong artificial intelligence (AI) so it can help us understand the nature of the universe to satiate our curiosity, devise cures for diseases to ease our suffering, and expand to other star systems to ensure our survival. To do all this, AI must be able to learn representations of the environment in the form of models that enable it to recognize entities, infer missing information, and predict events. And because the universe is of almost infinite complexity, AI must be able to compose these models dynamically to generate combinatorial representations to match that complexity.

Other Things That Caught Our Eyes

News

Yale Researchers Use Machine Learning To Identify Brain Networks Predictive Of Aggression In Children - "Children’s mental disorders are defined as significant changes in how children learn, behave, or handle their emotions. Many times these changes create discomfort and make it difficult for them to get through the day."

NASA Wants Your Help Improving Perseverance Rover’s AI - "If you’re interested in helping out, NASA is calling on any interested humans to contribute to the machine learning algorithms that help Perseverance get around. All you need to do is look at some images and label geological features."

Photoshop’s AI Is Now So Fast That Hovering Over Objects Instantly Creates Perfect Masks - "Masking was once a painstaking photo-editing process. Now it's instantaneous."

Nearly a third of new code on GitHub is written with AI help - "The open-source software developer GitHub says as much as 30% of newly written code on its network is being done with the help of the company's AI programming tool Copilot."

Chinese military may have an edge over US on artificial intelligence research, report warns - "Report by Georgetown University says PLA spending may be higher than America’s and adds that many of its suppliers could gain access to US technology."

Papers

Mastering Atari Games with Limited Data “Reinforcement learning has achieved great success in many applications. However, sample efficiency remains a key challenge, with prominent methods requiring millions (or even billions) of environment steps to train … We propose a sample efficient model-based visual RL algorithm built on MuZero, which we name EfficientZero. Our method achieves 190.4% mean human performance and 116.0% median performance on the Atari 100k benchmark with only two hours of real-time game experience and outperforms the state SAC in some tasks on the DMControl 100k benchmark.”

Hierarchical Transformers Are More Efficient Language Models “ ... We postulate that having an explicit hierarchical architecture is the key to Transformers that efficiently handle long sequences. To verify this claim, we first study different ways to downsample and upsample activations in Transformers so as to make them hierarchical. We use the best performing upsampling and downsampling layers to create Hourglass - a hierarchical Transformer language model. Hourglass improves upon the Transformer baseline given the same amount of computation and can yield the same results as Transformers more efficiently. ...”

Understanding How Encoder-Decoder Architectures Attend “Encoder-decoder networks with attention have proven to be a powerful way to solve many sequence-to-sequence tasks. In these networks, attention aligns encoder and decoder states and is often used for visualizing network behavior. However, the mechanisms used by networks to generate appropriate attention matrices are still mysterious … In this work, we investigate how encoder-decoder networks solve different sequence-to-sequence tasks. We introduce a way of decomposing hidden states over a sequence into temporal (independent of input) and input-driven (independent of sequence position) components. This reveals how attention matrices are formed: depending on the task requirements, networks rely more heavily on either the temporal or input-driven components ...“

Robot Learning from Randomized Simulations: A Review “The rise of deep learning has caused a paradigm shift in robotics research, favoring methods that require large amounts of data. It is prohibitively expensive to generate such data sets on a physical platform. Therefore, state-of-the-art approaches learn in simulation where data generation is fast as well as inexpensive and subsequently transfer the knowledge to the real robot (sim-to-real) ... We provide a comprehensive review of sim-to-real research for robotics, focusing on a technique named 'domain randomization' which is a method for learning from randomized simulations.”

Tweets

Closing Thoughts

Have something to say about this edition’s topics? Shoot us an email at gradientpub@gmail.com and we will consider sharing the most interesting thoughts from readers to share in the next newsletter! If you enjoyed this piece, consider donating to The Gradient via a Substack subscription, which helps keep this grad-student / volunteer-run project afloat. Thanks for reading the latest Update from the Gradient!