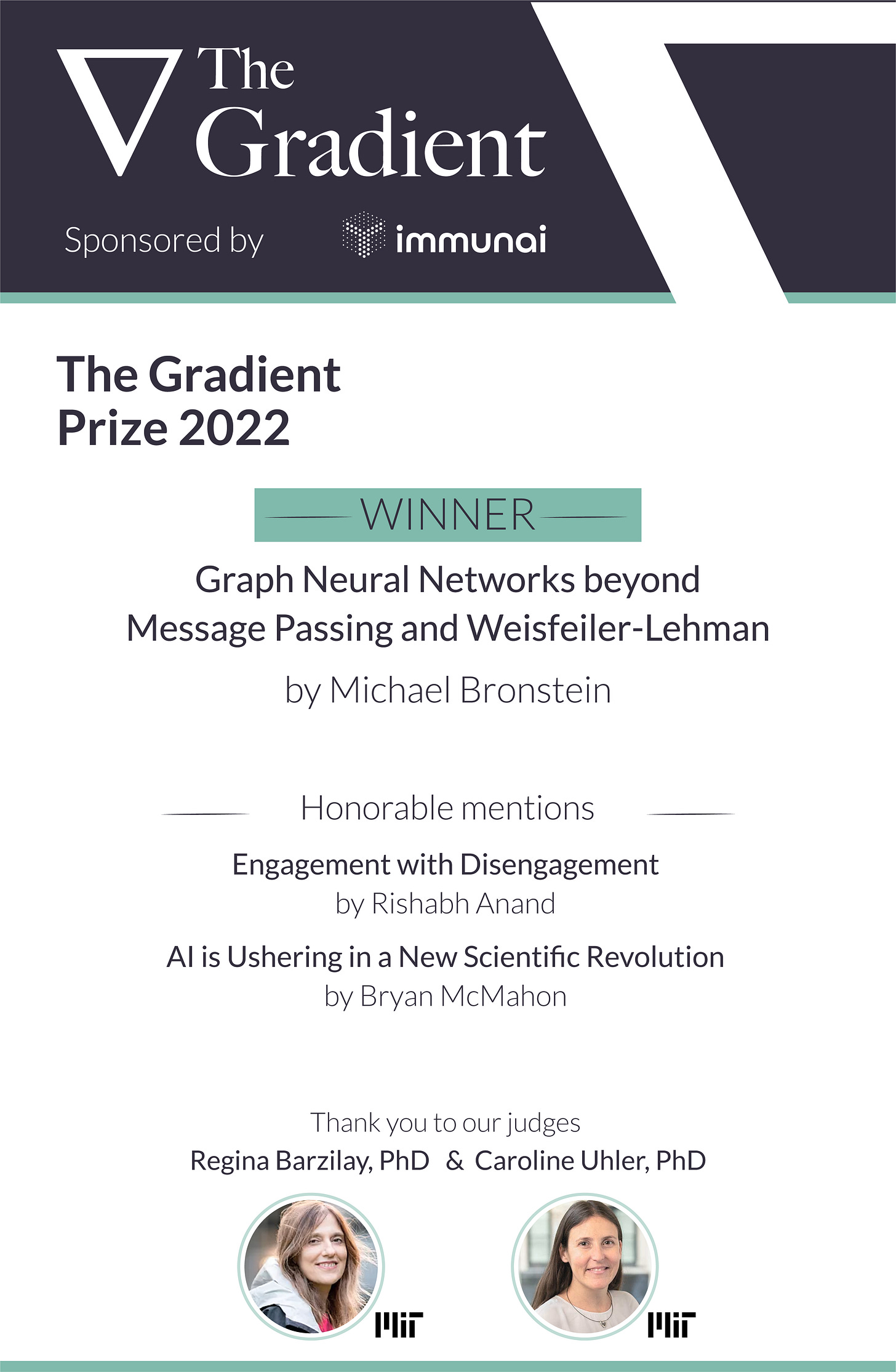

Announcing the Winners of the 2nd Gradient Prize!

$3000 in prizes, courtesy of Immunai

Earlier this year, we announced the second Gradient Prize to award our most outstanding contributions from the past several months. Gradient editors picked five finalists, which we sent to our two guest judges: Regina Barzilay and Caroline Uhler. Without further ado, here are the prize results!

Winner

Beyond Message Passing: a Physics-Inspired Paradigm for Graph Neural Networks by Michael Bronstein of Oxford and Deepmind

Our judges on why they chose this to be the winner:

The article is a comprehensive review of the latest developments in GraphML including the advantages and disadvantages of various GNN methods. We particularly like how the paper argues that the future of GraphML will be focused on physics-inspired models with mathematical rich motivations and accompanying visuals. Lastly, the paper touches on the engineering barriers involved with training these models, which is an important and often overlooked note.

Runner-ups

Engaging with Disengagement by Rishabh Anand of the National University of Singapore

Our judges’ comments:

We are all eagerly awaiting the arrival of autonomous vehicles. The article Engaging with Disengagement elaborates on many of the policy issues that make it difficult to evaluate these systems on real roads. We like how the article provides several case studies and investigates the AV policy environment across multiple countries around the world. Bringing AI regulation and policy to light is essential for the widespread adoption of these systems.

AI is Ushering in a New Scientific Revolution by Bryan McMahon of Duke University and NEDO

Our judges’ comments:

AI is Ushering in a New Scientific Revolution nicely illustrates the success that AI has achieved in a wide variety of scientific fields. The article is entertaining, accessible, and effectively informs the public about important AI achievements of recent years.

Finalists

How AI is Changing Chemical Discovery by Victor Cano Gil

While engineering, finance, and commerce have profited immensely from novel algorithms, they are not the only ones. Large-scale computation has been an integral part of the toolkit in the physical sciences for many decades - and some of the recent advances in AI have started to change how scientific discoveries are made.

One Voice Detector to Rule Them All by Alexander Veysov and Dimitrii Voronin

In our work we are often surprised by the fact that most people know about Automatic Speech Recognition (ASR), but know very little about Voice Activity Detection (VAD). It is baffling, because VAD is among the most important and fundamental algorithms in any production or data preparation pipelines related to speech – though it remains mostly “hidden” if it works properly.

Conclusion

Want to help us give out more monetary rewards for our authors? Consider becoming a supporter on Substack! Here’s a special offer just for this occasion:

As always, we’re thrilled with the quality of contributions to The Gradient; if you haven’t had a chance to catch up with these recent pieces, we highly recommend you take a look!

And if you can’t afford to help us monetarily, consider sharing The Gradient with friends!